Just-In-Time compilation using GCC (libgccjit.so)

GNU Tools Cauldron 2014

David Malcolm <dmalcolm@redhat.com>

GNU Tools Cauldron 2014

David Malcolm <dmalcolm@redhat.com>

Experimental branch of GCC

Branch "dmalcolm/jit" within git

Building GCC as a shared library

Suitable for embedding inside other programs and libraries

In-process code-generation, at runtime

See http://gcc.gnu.org/wiki/JIT

Prebuilt RPM packages available for Fedora and RHEL:

JIT compilation use cases:

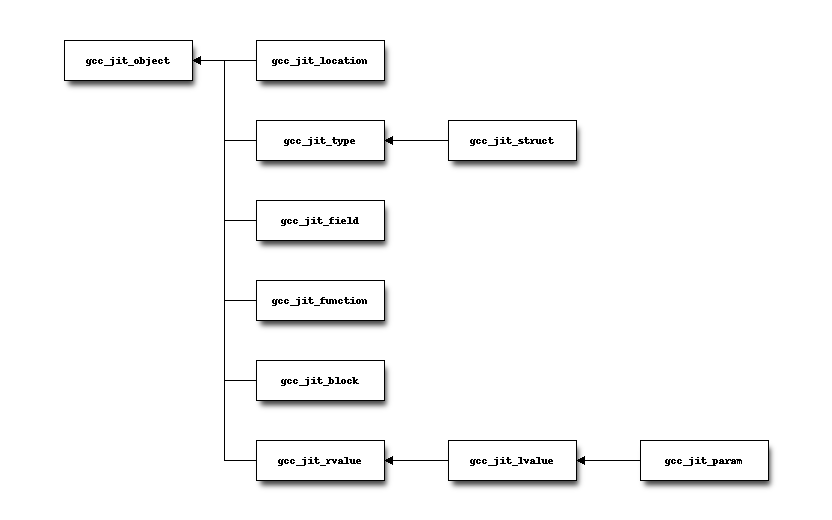

A C header file; currently with:

- 72 function prototypes

- 12 opaque types

- 8 enums

All state is hung off of context objects:

typedef struct gcc_jit_context gcc_jit_context; extern gcc_jit_context * gcc_jit_context_acquire (void); extern void gcc_jit_context_release (gcc_jit_context *ctxt);

Simple memory management for client code

Everything that's created from a context is cleaned up when the context is released.

extern const char *

gcc_jit_object_get_debug_string (gcc_jit_object *obj);

Optional, but useful to end-users

/* Use this to create locations: */

extern gcc_jit_location *

gcc_jit_context_new_location (gcc_jit_context *ctxt,

const char *filename,

int line,

int column);

/* Need to turn on generation of debuginfo: */

gcc_jit_context_set_bool_option (

ctxt, GCC_JIT_BOOL_OPTION_DEBUGINFO, 1);

We can use this to single-step through the machine code e.g. generated for bytecode:

(gdb) break fibonacci

(gdb) run

Breakpoint 1, fibonacci (input=8) at main.cc:43

43 DUP,

(gdb) next

47 PUSH_INT_CONST, 2,

(gdb) next

51 BINARY_INT_COMPARE_LT,

(gdb) next

55 JUMP_ABS_IF_TRUE, 17,

(gdb) next

59 DUP,

(gdb) next

63 PUSH_INT_CONST, 1,

(gdb) next

67 BINARY_INT_SUBTRACT,

Access to simple C types:

gcc_jit_type *int_type =

gcc_jit_context_get_type (ctxt, GCC_JIT_TYPE_INT);

gcc_jit_type *double_type =

gcc_jit_context_get_type (ctxt, GCC_JIT_TYPE_DOUBLE);

/* etc */

A common pattern:

one-time setup:

The client code maps its own API into the JIT world:

- create gcc_jit_type instances representing the structs and other types of interest

- similar for globals, functions, etc

repeatedly reuse (1) as each method becomes "hot", using (1) to compile each method to machine code

Seen e.g. in GNU Octave's JIT compiler.

How to handle this?

If we do it all in one context, we'll have a slow leak due to all of the per-method state never going away.

Solution: nested contexts:

extern gcc_jit_context *

gcc_jit_context_new_child_context (gcc_jit_context *parent_ctxt);

How to generate the equivalent of:

const char *

test_string_literal (void)

{

return "hello world";

}

gcc_jit_type *const_char_ptr_type =

gcc_jit_context_get_type (ctxt, GCC_JIT_TYPE_CONST_CHAR_PTR);

/* Build the test_fn. */

gcc_jit_function *test_fn =

gcc_jit_context_new_function (ctxt, NULL,

GCC_JIT_FUNCTION_EXPORTED,

const_char_ptr_type,

"test_string_literal",

0, NULL,

0);

gcc_jit_block *block = gcc_jit_function_new_block (test_fn, NULL);

gcc_jit_block_end_with_return (

block, NULL,

gcc_jit_context_new_string_literal (ctxt, "hello world"));

Example of a conditional:

/* if (i >= n) */

gcc_jit_block_end_with_conditional (

loop_cond, NULL,

gcc_jit_context_new_comparison (

ctxt, NULL,

GCC_JIT_COMPARISON_GE,

gcc_jit_lvalue_as_rvalue (i),

gcc_jit_param_as_rvalue (n)),

after_loop,

loop_body);

/* sum += i * i */

gcc_jit_block_add_assignment_op (

loop_body, NULL,

sum, /* lvalue */

GCC_JIT_BINARY_OP_PLUS,

gcc_jit_context_new_binary_op ( /* rvalue */

ctxt, NULL,

GCC_JIT_BINARY_OP_MULT, the_type,

gcc_jit_lvalue_as_rvalue (i),

gcc_jit_lvalue_as_rvalue (i)));

extern void

gcc_jit_block_add_comment (gcc_jit_block *block,

gcc_jit_location *loc,

const char *text);

Very useful for debugging

e.g.

gcc_jit_block_add_comment (b_entry, NULL,

"for i in 0 to (ARRAY_SIZE - 1):");

Internally they are implemented as dummy labels.

Shouldn't affect optimization.

Visible in dumps of initial tree and of gimple.

Inspired by OpenGL:

- record errors

- fail if an error has occurred

- fail gracefully when called after an error

Client code only has to check for errors once.

extern const char *

gcc_jit_context_get_first_error (gcc_jit_context *ctxt);

etc

Methods, and (optionally) operator overloading:

struct quadratic

{

double a;

double b;

double c;

double discriminant;

};

gccjit::rvalue q_a = param_q.dereference_field (field_a);

gccjit::rvalue q_b = param_q.dereference_field (field_b);

gccjit::rvalue q_c = param_q.dereference_field (field_c);

gccjit::rvalue four =

ctxt.new_rvalue (double_type, 4);

gccjit::block block = calc_discriminant.new_block ();

block.add_comment ("(b^2 - 4ac)");

block.add_assignment (

/* q->discriminant =... */

param_q.dereference_field (field_discriminant),

/* (q->b * q->b) - (4 * q->a * q->c) */

(q_b * q_b) - (four * q_a * q_c));

block.end_with_return ();

See https://github.com/davidmalcolm/pygccjit:

# Create parameter "i":

param_i = ctxt.new_param(int_type, b'i')

# Create the function:

fn = ctxt.new_function(gccjit.FunctionKind.EXPORTED,

int_type,

b"square",

[param_i])

# Create a basic block within the function:

block = fn.new_block(b'entry')

# This basic block is relatively simple:

block.end_with_return(

ctxt.new_binary_op(gccjit.BinaryOp.MULT,

int_type,

param_i, param_i))

# Having populated the context, compile it.

jit_result = ctxt.compile()

# This is what you get back from ctxt.compile():

assert isinstance(jit_result, gccjit.Result)

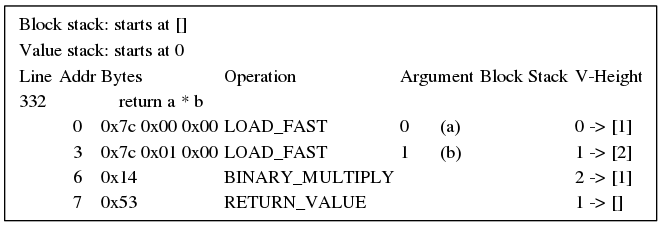

https://github.com/davidmalcolm/coconut

(not to be confused with "Unladen Swallow")

Compiles CPython bytecode to machine code

Uses the Python bindings to libgccjit

def f(a, b):

return a * b

One basic block:

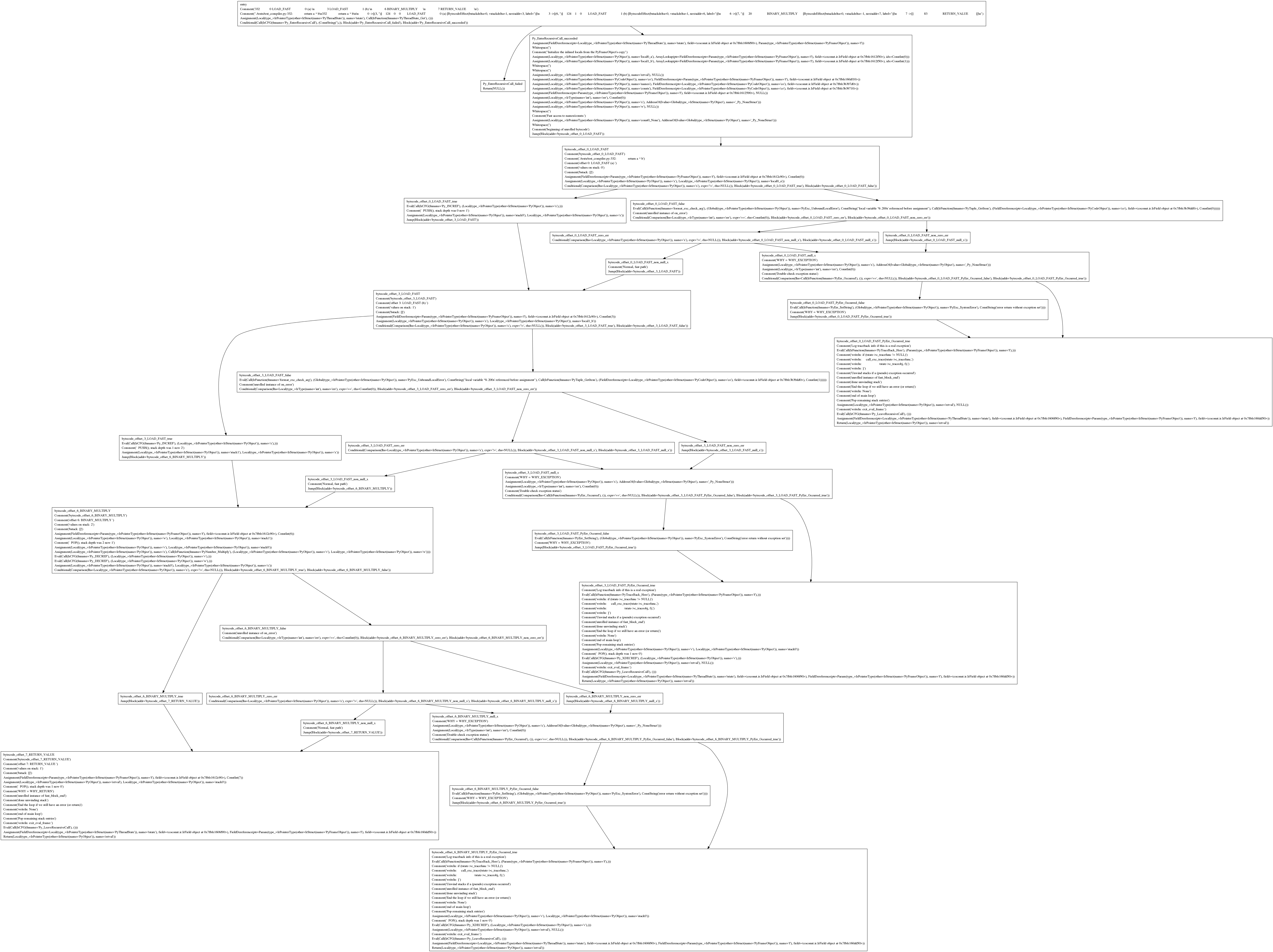

31 basic blocks:

Status: an experiment:

Yes please!

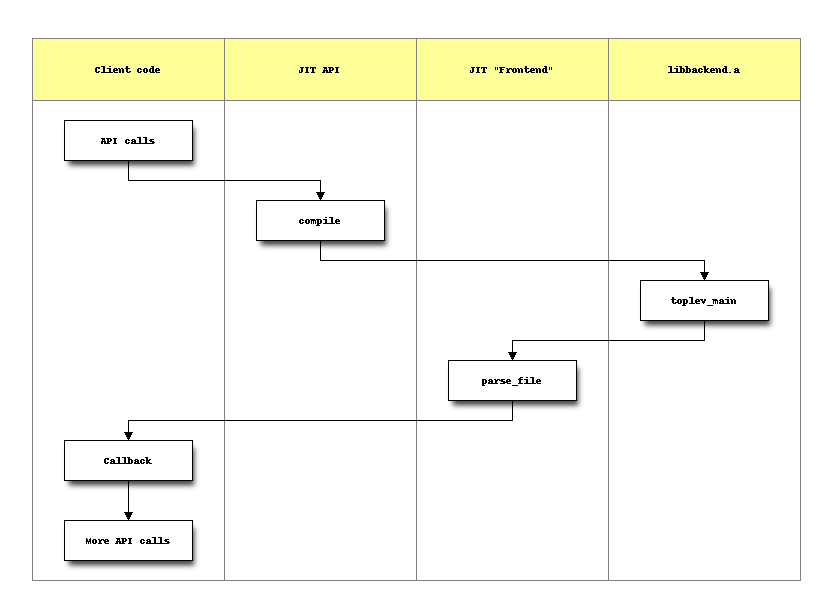

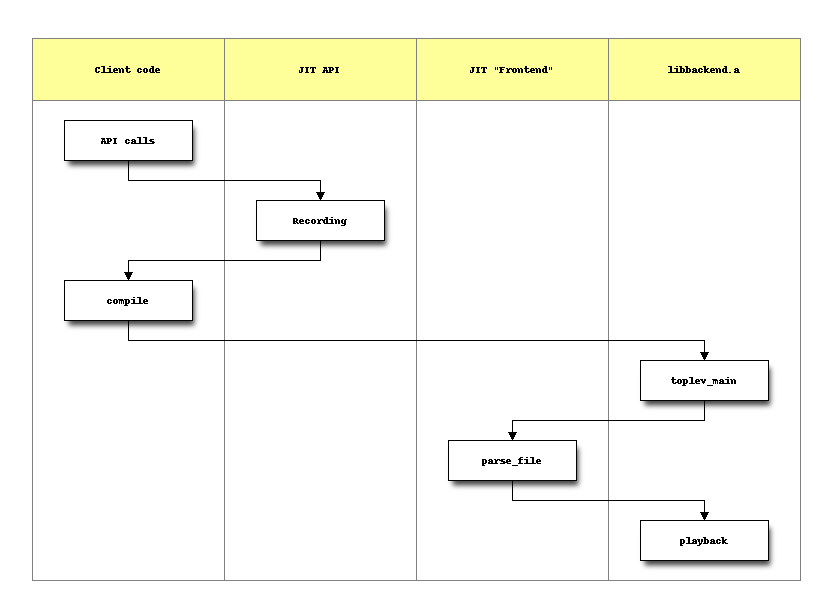

The original way it worked:

gcc::context::context ()

{

m_dumps = new gcc::dump_manager ();

m_passes = new gcc::pass_manager (this);

}

Add a big mutex and...

/* For those that want to, this function aims to clean up enough

state that you can call toplev::main again. */

void

toplev::finalize (void)

{

cgraph_c_finalize ();

cgraphbuild_c_finalize ();

cgraphunit_c_finalize ();

dwarf2out_c_finalize ();

/* etc */

}

void cgraph_c_finalize (void)

{

x_cgraph_nodes_queue = NULL;

cgraph_n_nodes = 0;

cgraph_max_uid = 0;

cgraph_edge_max_uid = 0;

cgraph_global_info_ready = false;

cgraph_state = CGRAPH_STATE_PARSING;

cgraph_function_flags_ready = false;

/* etc */

}

The testsuite for JIT now runs at the equivalent of -O3

(with each test running in-process 5 times, to shake out state issues)

Currently the library:

This shows up as a significant part of the profile.

I would prefer to do this all in-process.

Are there shared libraries for these stages in our toolchain?

OK if I factor out the spec language from the gcc harness?

Thanks for listening!