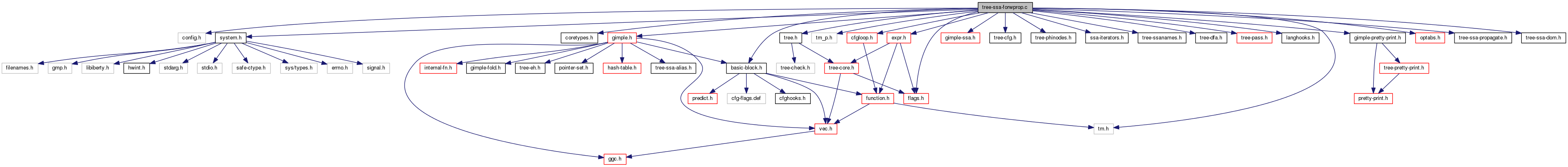

#include "config.h"#include "system.h"#include "coretypes.h"#include "tm.h"#include "tree.h"#include "tm_p.h"#include "basic-block.h"#include "gimple-pretty-print.h"#include "gimple.h"#include "gimple-ssa.h"#include "tree-cfg.h"#include "tree-phinodes.h"#include "ssa-iterators.h"#include "tree-ssanames.h"#include "tree-dfa.h"#include "tree-pass.h"#include "langhooks.h"#include "flags.h"#include "expr.h"#include "cfgloop.h"#include "optabs.h"#include "tree-ssa-propagate.h"#include "tree-ssa-dom.h"

Macros | |

| #define | CPD_ITERATIONS 5 |

Variables | |

| static bool | cfg_changed |

Macro Definition Documentation

| #define CPD_ITERATIONS 5 |

Function Documentation

|

static |

Perform re-associations of the plus or minus statement STMT that are always permitted. Returns true if the CFG was changed.

We can't reassociate at all for saturating types.

First contract negates.

A +- (-B) -> A -+ B.

(-A) + B -> B - A.

We can't reassociate floating-point or fixed-point plus or minus because of saturation to +-Inf.

Second match patterns that allow contracting a plus-minus pair

irrespective of overflow issues.

(A +- B) - A -> +- B

(A +- B) -+ B -> A

(CST +- A) +- CST -> CST +- A

(A +- CST) +- CST -> A +- CST

~A + A -> -1

~A + 1 -> -A

A - (A +- B) -> -+ B

A +- (B +- A) -> +- B

CST +- (CST +- A) -> CST +- A

CST +- (A +- CST) -> CST +- A

A + ~A -> -1

via commutating the addition and contracting operations to zero

by reassociation. (A +- B) - A -> +- B.

(A +- B) -+ B -> A.

(CST +- A) +- CST -> CST +- A.

(A +- CST) +- CST -> A +- CST.

~A + A -> -1.

~A + 1 -> -A.

A - (A +- B) -> -+ B.

A +- (B +- A) -> +- B.

CST +- (CST +- A) -> CST +- A.

CST +- (A +- CST) -> CST +- A.

A + ~A -> -1.

|

static |

Associate operands of a POINTER_PLUS_EXPR assignmen at *GSI. Returns true if anything changed, false otherwise.

Pattern match tem = (sizetype) ptr; tem = tem & algn; tem = -tem; ... = ptr p+ tem; and produce the simpler and easier to analyze with respect to alignment ... = ptr & ~algn;

References gimple_assign_set_rhs1(), remove_prop_source_from_use(), and update_stmt().

|

static |

Checks if the destination ssa name in DEF_STMT can be used as propagation source. Returns true if so, otherwise false.

If the rhs has side-effects we cannot propagate from it.

If the rhs is a load we cannot propagate from it.

Constants can be always propagated.

We cannot propagate ssa names that occur in abnormal phi nodes.

If the definition is a conversion of a pointer to a function type, then we can not apply optimizations as some targets require function pointers to be canonicalized and in this case this optimization could eliminate a necessary canonicalization.

References gimple_assign_rhs1(), POINTER_TYPE_P, TREE_CODE, and TREE_TYPE.

Referenced by forward_propagate_into_comparison_1().

|

static |

Combine OP0 CODE OP1 in the context of a COND_EXPR. Returns the folded result in a form suitable for COND_EXPR_COND or NULL_TREE, if there is no suitable simplified form. If INVARIANT_ONLY is true only gimple_min_invariant results are considered simplified.

Require that we got a boolean type out if we put one in.

Canonicalize the combined condition for use in a COND_EXPR.

Bail out if we required an invariant but didn't get one.

Referenced by forward_propagate_into_comparison_1().

|

static |

Propagate from the ssa name definition statements of COND_EXPR values in the rhs of statement STMT into the conditional arms if that simplifies it. Returns true if the stmt was changed.

References gimple_assign_set_rhs_from_tree(), and gsi_stmt().

|

static |

Combine two conversions in a row for the second conversion at *GSI. Returns 1 if there were any changes made, 2 if cfg-cleanup needs to run. Else it returns 0.

Don't propagate ssa names that occur in abnormal phis.

In addition to the cases of two conversions in a row

handled below, if we are converting something to its own

type via an object of identical or wider precision, neither

conversion is needed. Likewise, if the intermediate and initial types are either both

float or both integer, we don't need the middle conversion if the

former is wider than the latter and doesn't change the signedness

(for integers). Avoid this if the final type is a pointer since

then we sometimes need the middle conversion. Likewise if the

final type has a precision not equal to the size of its mode. If we have a sign-extension of a zero-extended value, we can

replace that by a single zero-extension. Likewise if the

final conversion does not change precision we can drop the

intermediate conversion. Two conversions in a row are not needed unless:

- some conversion is floating-point (overstrict for now), or

- some conversion is a vector (overstrict for now), or

- the intermediate type is narrower than both initial and

final, or

- the intermediate type and innermost type differ in signedness,

and the outermost type is wider than the intermediate, or

- the initial type is a pointer type and the precisions of the

intermediate and final types differ, or

- the final type is a pointer type and the precisions of the

initial and intermediate types differ. A truncation to an unsigned type should be canonicalized as

bitwise and of a mask. If we are converting an integer to a floating-point that can

represent it exactly and back to an integer, we can skip the

floating-point conversion.

References gimple_assign_set_rhs1(), gimple_assign_set_rhs_code(), remove_prop_source_from_use(), and update_stmt().

|

static |

For pointers p2 and p1 return p2 - p1 if the difference is known and constant, otherwise return NULL.

For each of p1 and p2 we need to iterate at least twice, to handle ADDR_EXPR directly in p1/p2, SSA_NAME with ADDR_EXPR or POINTER_PLUS_EXPR etc. on definition's stmt RHS. Iterate a few extra times.

References BITS_PER_UNIT, build_fold_addr_expr, BUILT_IN_NORMAL, CHAR_BIT, char_type_node, compare_tree_int(), DECL_BUILT_IN_CLASS, DECL_FUNCTION_CODE, gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_single_p(), gimple_call_arg(), gimple_call_fndecl(), gimple_call_lhs(), gimple_call_num_args(), gimple_vuse(), gsi_stmt(), host_integerp(), HOST_WIDE_INT, is_gimple_call(), NULL, NULL_TREE, size_one_node, size_zero_node, SSA_NAME_DEF_STMT, string_constant(), TREE_CODE, tree_low_cst(), TREE_STRING_LENGTH, TREE_TYPE, and TYPE_MODE.

|

inlinestatic |

Given a ssa_name in NAME see if it was defined by an assignment and set CODE to be the code and ARG1 to the first operand on the rhs and ARG2 to the second operand on the rhs.

Ignore arg3 currently.

Referenced by simplify_rotate().

Forward propagation of expressions for single use variables. Copyright (C) 2004-2013 Free Software Foundation, Inc.

This file is part of GCC.

GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version.

GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see http://www.gnu.org/licenses/. This pass propagates the RHS of assignment statements into use sites of the LHS of the assignment. It's basically a specialized form of tree combination. It is hoped all of this can disappear when we have a generalized tree combiner.

One class of common cases we handle is forward propagating a single use variable into a COND_EXPR.

bb0: x = a COND b; if (x) goto ... else goto ...

Will be transformed into:

bb0: if (a COND b) goto ... else goto ...

Similarly for the tests (x == 0), (x != 0), (x == 1) and (x != 1).

Or (assuming c1 and c2 are constants):

bb0: x = a + c1; if (x EQ/NEQ c2) goto ... else goto ...

Will be transformed into:

bb0: if (a EQ/NEQ (c2 - c1)) goto ... else goto ...

Similarly for x = a - c1.

Or

bb0: x = !a if (x) goto ... else goto ...

Will be transformed into:

bb0: if (a == 0) goto ... else goto ...

Similarly for the tests (x == 0), (x != 0), (x == 1) and (x != 1). For these cases, we propagate A into all, possibly more than one, COND_EXPRs that use X.

Or

bb0: x = (typecast) a if (x) goto ... else goto ...

Will be transformed into:

bb0: if (a != 0) goto ... else goto ...

(Assuming a is an integral type and x is a boolean or x is an integral and a is a boolean.)

Similarly for the tests (x == 0), (x != 0), (x == 1) and (x != 1). For these cases, we propagate A into all, possibly more than one, COND_EXPRs that use X.

In addition to eliminating the variable and the statement which assigns a value to the variable, we may be able to later thread the jump without adding insane complexity in the dominator optimizer.

Also note these transformations can cascade. We handle this by having a worklist of COND_EXPR statements to examine. As we make a change to a statement, we put it back on the worklist to examine on the next iteration of the main loop.

A second class of propagation opportunities arises for ADDR_EXPR nodes.

ptr = &x->y->z; res = *ptr;

Will get turned into

res = x->y->z;

Or ptr = (type1*) res = *ptr

Will get turned into (if type1 and type2 are the same size and neither have volatile on them): res = VIEW_CONVERT_EXPR<type1>(type2var)

Or

ptr = &x[0]; ptr2 = ptr + <constant>;

Will get turned into

ptr2 = &x[constant/elementsize];

Or

ptr = &x[0]; offset = index * element_size; offset_p = (pointer) offset; ptr2 = ptr + offset_p

Will get turned into:

ptr2 = &x[index];

Or ssa = (int) decl res = ssa & 1

Provided that decl has known alignment >= 2, will get turned into

res = 0

We also propagate casts into SWITCH_EXPR and COND_EXPR conditions to allow us to remove the cast and {NOT_EXPR,NEG_EXPR} into a subsequent {NOT_EXPR,NEG_EXPR}.

This will (of course) be extended as other needs arise.

|

static |

STMT is a statement of the form SSA_NAME = ADDR_EXPR <whatever>.

Try to forward propagate the ADDR_EXPR into all uses of the SSA_NAME. Often this will allow for removal of an ADDR_EXPR and INDIRECT_REF node or for recovery of array indexing from pointer arithmetic.

PARENT_SINGLE_USE_P tells if, when in a recursive invocation, NAME was the single use in the previous invocation. Pass true when calling this as toplevel.

Returns true, if all uses have been propagated into.

If the use is not in a simple assignment statement, then

there is nothing we can do.

If the use has moved to a different statement adjust

the update machinery for the old statement too. Remove intermediate now unused copy and conversion chains.

References build2, dump_file, dump_flags, get_prop_dest_stmt(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_assign_set_rhs_from_tree(), gsi_for_stmt(), gsi_stmt(), HONOR_NANS, integer_onep(), INTEGRAL_TYPE_P, invert_tree_comparison(), is_gimple_assign(), NULL_TREE, print_gimple_expr(), SSA_NAME_OCCURS_IN_ABNORMAL_PHI, TDF_DETAILS, TREE_CODE, TREE_TYPE, TYPE_MODE, TYPE_PRECISION, unshare_expr(), and update_stmt().

|

static |

NAME is a SSA_NAME representing DEF_RHS which is of the form ADDR_EXPR <whatever>.

Try to forward propagate the ADDR_EXPR into the use USE_STMT. Often this will allow for removal of an ADDR_EXPR and INDIRECT_REF node or for recovery of array indexing from pointer arithmetic.

Return true if the propagation was successful (the propagation can be not totally successful, yet things may have been changed).

Do not perform copy-propagation but recurse through copy chains.

The use statement could be a conversion. Recurse to the uses of the lhs as copyprop does not copy through pointer to integer to pointer conversions and FRE does not catch all cases either. Treat the case of a single-use name and a conversion to def_rhs type separate, though.

If there is a point in a conversion chain where the types match

so we can remove a conversion re-materialize the address here

and stop. Else recurse if the conversion preserves the address value.

If this isn't a conversion chain from this on we only can propagate into compatible pointer contexts.

Propagate through constant pointer adjustments.

As we come here with non-invariant addresses in def_rhs we need

to make sure we can build a valid constant offsetted address

for further propagation. Simply rely on fold building that

and check after the fact. Recurse. If we could propagate into all uses of lhs do not

bother to replace into the current use but just pretend we did. Now strip away any outer COMPONENT_REF/ARRAY_REF nodes from the LHS. ADDR_EXPR will not appear on the LHS.

Now see if the LHS node is a MEM_REF using NAME. If so, propagate the ADDR_EXPR into the use of NAME and fold the result.

If the address is invariant we can always fold it.

Continue propagating into the RHS if this was not the only use.

If the LHS is a plain dereference and the value type is the same as

that of the pointed-to type of the address we can put the

dereferenced address on the LHS preserving the original alias-type. Don't forward anything into clobber stmts if it would result

in the lhs no longer being a MEM_REF. Continue propagating into the RHS if this was not the

only use. We can have a struct assignment dereferencing our name twice.

Note that we didn't propagate into the lhs to not falsely

claim we did when propagating into the rhs. Strip away any outer COMPONENT_REF, ARRAY_REF or ADDR_EXPR nodes from the RHS.

Now see if the RHS node is a MEM_REF using NAME. If so, propagate the ADDR_EXPR into the use of NAME and fold the result.

If the RHS is a plain dereference and the value type is the same as

that of the pointed-to type of the address we can put the

dereferenced address on the RHS preserving the original alias-type. If the use of the ADDR_EXPR is not a POINTER_PLUS_EXPR, there is nothing to do.

The remaining cases are all for turning pointer arithmetic into array indexing. They only apply when we have the address of element zero in an array. If that is not the case then there is nothing to do.

Optimize &x[C1] p+ C2 to &x p+ C3 with C3 = C1 * element_size + C2.

|

static |

Forward propagate the comparison defined in *DEFGSI like cond_1 = x CMP y to uses of the form a_1 = (T')cond_1 a_1 = !cond_1 a_1 = cond_1 != 0 Returns true if stmt is now unused. Advance DEFGSI to the next statement.

Don't propagate ssa names that occur in abnormal phis.

Do not un-cse comparisons. But propagate through copies.

We can propagate the condition into a statement that computes the logical negation of the comparison result.

When we remove stmt now the iterator defgsi goes off it's current sequence, hence advance it now.

Remove defining statements.

|

static |

Propagate from the ssa name definition statements of the assignment from a comparison at *GSI into the conditional if that simplifies it. Returns 1 if the stmt was modified and 2 if the CFG needs cleanup, otherwise returns 0.

Combine the comparison with defining statements.

|

static |

Combine the comparison OP0 CODE OP1 at LOC with the defining statements of its operand. Return a new comparison tree or NULL_TREE if there were no simplifying combines.

For comparisons use the first operand, that is likely to simplify comparisons against constants.

If that wasn't successful, try the second operand.

If that wasn't successful either, try both operands.

References can_propagate_from(), combine_cond_expr_cond(), get_prop_source_stmt(), rhs_to_tree(), and TREE_TYPE.

|

static |

Propagate from the ssa name definition statements of COND_EXPR in the rhs of statement STMT into the conditional if that simplifies it. Returns true zero if the stmt was changed.

We can do tree combining on SSA_NAME and comparison expressions.

References gimple_assign_rhs1().

|

static |

Propagate from the ssa name definition statements of COND_EXPR in GIMPLE_COND statement STMT into the conditional if that simplifies it. Returns zero if no statement was changed, one if there were changes and two if cfg_cleanup needs to run.

This must be kept in sync with forward_propagate_into_cond.

We can do tree combining on SSA_NAME and comparison expressions.

Canonicalize _Bool == 0 and _Bool != 1 to _Bool != 0 by swapping edges.

|

static |

|

static |

Get the next statement we can propagate NAME's value into skipping trivial copies. Returns the statement that is suitable as a propagation destination or NULL_TREE if there is no such one. This only returns destinations in a single-use chain. FINAL_NAME_P if non-NULL is written to the ssa name that represents the use.

If name has multiple uses, bail out.

If this is not a trivial copy, we found it.

Continue searching uses of the copy destination.

References gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_ssa_name_copy_p(), NULL, and single_imm_use().

Referenced by forward_propagate_addr_expr().

|

static |

Get the statement we can propagate from into NAME skipping trivial copies. Returns the statement which defines the propagation source or NULL_TREE if there is no such one. If SINGLE_USE_ONLY is set considers only sources which have a single use chain up to NAME. If SINGLE_USE_P is non-null, it is set to whether the chain to NAME is a single use chain or not. SINGLE_USE_P is not written to if SINGLE_USE_ONLY is set.

If name is defined by a PHI node or is the default def, bail out.

If def_stmt is a simple copy, continue looking.

References NULL.

Referenced by forward_propagate_into_comparison_1().

|

static |

Return true if a conversion of an operand from type FROM to type TO should be applied after performing the operation instead.

That's a good idea if the conversion widens the operand, thus after hoisting the conversion the operation will be narrower.

It's also a good idea if the conversion is to a non-integer mode.

Or if the precision of TO is not the same as the precision of its mode.

|

static |

Determine whether applying the 2 permutations (mask1 then mask2) gives back one of the input.

|

static |

Helper routine for simplify_bitwise_binary_1 function. Return for the SSA name NAME the expression X if it mets condition NAME = !X. Otherwise return NULL_TREE. Detected patterns for NAME = !X are: !X and X == 0 for X with integral type. X ^ 1, X != 1,or ~X for X with integral type with precision of one.

If name has none-intergal type, or isn't a SSA_NAME, then return.

Get for EQ_EXPR or BIT_XOR_EXPR operation the second operand. If CODE isn't an EQ_EXPR, BIT_XOR_EXPR, or BIT_NOT_EXPR, then return.

Check if we have X == 0 and X has an integral type.

Check if we have X != 1 and X is a truth-valued.

Check if we have X ^ 1 and X is truth valued.

| gimple_opt_pass* make_pass_forwprop | ( | ) |

|

static |

Remove a chain of dead statements starting at the definition of NAME. The chain is linked via the first operand of the defining statements. If NAME was replaced in its only use then this function can be used to clean up dead stmts. The function handles already released SSA names gracefully. Returns true if cleanup-cfg has to run.

Referenced by associate_pointerplus(), and combine_conversions().

Referenced by forward_propagate_into_comparison_1().

|

static |

Return the rhs of a gimple_assign STMT in a form of a single tree, converted to type TYPE.

This should disappear, but is needed so we can combine expressions and use the fold() interfaces. Long term, we need to develop folding and combine routines that deal with gimple exclusively .

References fold_binary_loc(), fold_defer_overflow_warnings(), fold_undefer_overflow_warnings(), gcc_assert, gimple_location(), NULL, NULL_TREE, tcc_comparison, TREE_CODE, TREE_CODE_CLASS, and TREE_TYPE.

|

static |

Combine an element access with a shuffle. Returns true if there were any changes made, else it returns false.

|

static |

Simplify bitwise binary operations. Return true if a transformation applied, otherwise return false.

Try to fold (type) X op CST -> (type) (X op ((type-x) CST)) when profitable.

For bitwise binary operations apply operand conversions to the binary operation result instead of to the operands. This allows to combine successive conversions and bitwise binary operations.

Simplify (A & B) OP0 (C & B) to (A OP0 C) & B.

If A OP0 C (this usually means C is the same as A) is 0

then fold it down correctly. If A OP0 C (this usually means C is the same as A) is a ssa_name

then fold it down correctly. Make sure to re-process the new stmt as it's walking upwards.

(a | CST1) & CST2 -> (a & CST2) | (CST1 & CST2).

Make sure to re-process the new stmt as it's walking upwards.

Combine successive equal operations with constants.

Canonicalize X ^ ~0 to ~X.

Try simple folding for X op !X, and X op X.

( X | Y) & X -> X

( X & Y) | X -> X

(~X | Y) & X -> X & Y

(~X & Y) | X -> X | Y

(Y | ~X) & X -> X & Y

(Y & ~X) | X -> X | Y

X & ( X | Y) -> X

X | ( X & Y) -> X

(~X | Y) & X -> X & Y

(~X & Y) | X -> X | Y

(Y | ~X) & X -> X & Y

(Y & ~X) | X -> X | Y

If arg1 and arg2 are booleans (or any single bit type)

then try to simplify:

(~X & Y) -> X < Y

(X & ~Y) -> Y < X

(~X | Y) -> X <= Y

(X | ~Y) -> Y <= X

But only do this if our result feeds into a comparison as

this transformation is not always a win, particularly on

targets with and-not instructions.

|

static |

Optimize ARG1 CODE ARG2 to a constant for bitwise binary operations CODE, if one operand has the logically inverted value of the other.

If CODE isn't a bitwise binary operation, return NULL_TREE.

First check if operands ARG1 and ARG2 are equal. If so return NULL_TREE as this optimization is handled fold_stmt.

See if we have in arguments logical-not patterns.

X & !X -> 0.

X | !X -> 1 and X ^ !X -> 1, if X is truth-valued.

??? Otherwise result is (X != 0 ? X : 1). not handled.

References gimple_assign_rhs1(), gimple_assign_rhs_code(), gimple_assign_set_rhs1(), gimple_assign_set_rhs2(), gimple_assign_set_rhs_code(), gsi_stmt(), INTEGRAL_TYPE_P, is_gimple_assign(), SSA_NAME_DEF_STMT, TREE_CODE, TREE_TYPE, TYPE_PRECISION, TYPE_UNSIGNED, and update_stmt().

|

static |

GSI points to a statement of the form

result = OP0 CODE OP1

Where OP0 and OP1 are single bit SSA_NAMEs and CODE is either BIT_AND_EXPR or BIT_IOR_EXPR.

If OP0 is fed by a bitwise negation of another single bit SSA_NAME, then we can simplify the two statements into a single LT_EXPR or LE_EXPR when code is BIT_AND_EXPR and BIT_IOR_EXPR respectively.

If a simplification is made, return TRUE, else return FALSE.

References gimple_assign_set_rhs1(), gimple_assign_set_rhs2(), gimple_assign_set_rhs_code(), gimple_build_assign_with_ops(), gimple_location(), gimple_set_location(), gsi_insert_before(), GSI_NEW_STMT, make_ssa_name(), NULL, TREE_TYPE, and update_stmt().

|

static |

*GSI_P is a GIMPLE_CALL to a builtin function. Optimize memcpy (p, "abcd", 4); memset (p + 4, ' ', 3); into memcpy (p, "abcd ", 7); call if the latter can be stored by pieces during expansion.

If first stmt is a call, it needs to be memcpy

or mempcpy, with string literal as second argument and

constant length.

Otherwise look for length 1 memcpy optimized into

assignment. If the difference between the second and first destination pointer

is not constant, or is bigger than memcpy length, bail out. Use maximum of difference plus memset length and memcpy length

as the new memcpy length, if it is too big, bail out. If mempcpy value is used elsewhere, bail out, as mempcpy

with bigger length will return different result. If anything reads memory in between memcpy and memset

call, the modified memcpy call might change it. Construct the new source string literal.

Neither builtin_strncpy_read_str nor builtin_memcpy_read_str

handle embedded '\0's. If the new memcpy wouldn't be emitted by storing the literal

by pieces, this optimization might enlarge .rodata too much,

as commonly used string literals couldn't be shared any

longer. If STMT1 is a mem{,p}cpy call, adjust it and remove

memset call. Otherwise, if STMT1 is length 1 memcpy optimized into

assignment, remove STMT1 and change memset call into

memcpy call.

|

static |

GSI_P points to a statement which performs a narrowing integral conversion.

Look for cases like:

t = x & c; y = (T) t;

Turn them into:

t = x & c; y = (T) x;

If T is narrower than X's type and C merely masks off bits outside of (T) and nothing else.

Normally we'd let DCE remove the dead statement. But no DCE runs after the last forwprop/combine pass, so we remove the obviously dead code ourselves.

Return TRUE if a change was made, FALSE otherwise.

See if the input for the conversion was set via a BIT_AND_EXPR and the only use of the BIT_AND_EXPR result is the conversion.

Now verify suitability of the BIT_AND_EXPR's operands.

The first must be an SSA_NAME that we can propagate and the

second must be an integer constant that masks out all the

bits outside the final result's type, but nothing else. This is an optimizable case. Replace the source operand

in the conversion with the first source operand of the

BIT_AND_EXPR. There is no DCE after the last forwprop pass. It's

easy to clean up the first order effects here.

References gimple_assign_rhs1(), gimple_assign_set_rhs_from_tree(), gsi_stmt(), SSA_NAME_OCCURS_IN_ABNORMAL_PHI, TREE_CODE, and update_stmt().

|

static |

STMT is a SWITCH_EXPR for which we attempt to find equivalent forms of the condition which we may be able to optimize better.

The optimization that we really care about is removing unnecessary casts. That will let us do much better in propagating the inferred constant at the switch target.

If we have an extension that preserves value, then we

can copy the source value into the switch.

|

static |

Helper function for simplify_gimple_switch. Remove case labels that have values outside the range of the new type.

Collect the existing case labels in a VEC, and preprocess it as if we are gimplifying a GENERIC SWITCH_EXPR.

If any labels were removed, replace the existing case labels in the GIMPLE_SWITCH statement with the correct ones. Note that the type updates were done in-place on the case labels, so we only have to replace the case labels in the GIMPLE_SWITCH if the number of labels changed.

Corner case: *all* case labels have been removed as being

out-of-range for INDEX_TYPE. Push one label and let the

CFG cleanups deal with this further. Cleanup any edges that are now dead.

References gimple_assign_rhs1(), gimple_assign_rhs_code(), gimple_switch_set_index(), INTEGRAL_TYPE_P, is_gimple_assign(), SSA_NAME_DEF_STMT, TREE_TYPE, TYPE_PRECISION, TYPE_UNSIGNED, and update_stmt().

|

static |

If we have lhs = ~x (STMT), look and see if earlier we had x = ~y. If so, we can change STMT into lhs = y which can later be copy propagated. Similarly for negation.

This could trivially be formulated as a forward propagation to immediate uses. However, we already had an implementation from DOM which used backward propagation via the use-def links.

It turns out that backward propagation is actually faster as there's less work to do for each NOT/NEG expression we find. Backwards propagation needs to look at the statement in a single backlink. Forward propagation needs to look at potentially more than one forward link.

Returns true when the statement was changed.

See if the RHS_DEF_STMT has the same form as our statement.

Verify that RHS_DEF_OPERAND is a suitable SSA_NAME.

|

static |

Combine a shuffle with its arguments. Returns 1 if there were any changes made, 2 if cfg-cleanup needs to run. Else it returns 0.

Two consecutive shuffles.

Shuffle of a constructor.

Already used twice in this statement.

|

static |

Recognize rotation patterns. Return true if a transformation applied, otherwise return false.

We are looking for X with unsigned type T with bitsize B, OP being +, | or ^, some type T2 wider than T and (X << CNT1) OP (X >> CNT2) iff CNT1 + CNT2 == B ((T) ((T2) X << CNT1)) OP ((T) ((T2) X >> CNT2)) iff CNT1 + CNT2 == B (X << Y) OP (X >> (B - Y)) (X << (int) Y) OP (X >> (int) (B - Y)) ((T) ((T2) X << Y)) OP ((T) ((T2) X >> (B - Y))) ((T) ((T2) X << (int) Y)) OP ((T) ((T2) X >> (int) (B - Y))) (X << Y) | (X >> ((-Y) & (B - 1))) (X << (int) Y) | (X >> (int) ((-Y) & (B - 1))) ((T) ((T2) X << Y)) | ((T) ((T2) X >> ((-Y) & (B - 1)))) ((T) ((T2) X << (int) Y)) | ((T) ((T2) X >> (int) ((-Y) & (B - 1))))

and transform these into: X r<< CNT1 X r<< Y

Note, in the patterns with T2 type, the type of OP operands might be even a signed type, but should have precision B.

Only create rotates in complete modes. Other cases are not expanded properly.

Look through narrowing conversions.

One operand has to be LSHIFT_EXPR and one RSHIFT_EXPR.

If we've looked through narrowing conversions before, look through widening conversions from unsigned type with the same precision as rtype here.

Both shifts have to use the same first operand.

CNT1 + CNT2 == B case above.

Look through conversion of the shift count argument.

The C/C++ FE cast any shift count argument to integer_type_node.

The only problem might be if the shift count type maximum value

is equal or smaller than number of bits in rtype. Check for one shift count being Y and the other B - Y,

with optional casts. The above sequence isn't safe for Y being 0,

because then one of the shifts triggers undefined behavior.

This alternative is safe even for rotation count of 0.

One shift count is Y and the other (-Y) & (B - 1).

References CONVERT_EXPR_CODE_P, defcodefor_name(), floor_log2(), GET_MODE_PRECISION, INTEGRAL_TYPE_P, NULL, TREE_TYPE, TYPE_MODE, and TYPE_PRECISION.

|

static |

Recognize a VEC_PERM_EXPR. Returns true if there were any changes.

|

static |

Main entry point for the forward propagation and statement combine optimizer.

Apply forward propagation to all stmts in the basic-block.

Note we update GSI within the loop as necessary.

If this statement sets an SSA_NAME to an address,

try to propagate the address into the uses of the SSA_NAME. Handle pointer conversions on invariant addresses

as well, as this is valid gimple. ??? Better adjust the interface to that function

instead of building new trees here. Make sure to fold &a[0] + off_1 here.

Combine stmts with the stmts defining their operands.

Note we update GSI within the loop as necessary. Mark stmt as potentially needing revisiting.

In this case the entire COND_EXPR is in rhs1.

If we have a narrowing conversion to an integral

type that is fed by a BIT_AND_EXPR, we might be

able to remove the BIT_AND_EXPR if it merely

masks off bits outside the final type (and nothing

else. If the stmt changed then re-visit it and the statements

inserted before it. Stmt no longer needs to be revisited.

|

static |

We've just substituted an ADDR_EXPR into stmt. Update all the relevant data structures to match.

We may have turned a trapping insn into a non-trapping insn.

|

static |

Checks if expression has type of one-bit precision, or is a known truth-valued expression.

Don't check here for BOOLEAN_TYPE as the precision isn't necessarily one and so ~X is not equal to !X.

Variable Documentation

|

static |

Set to true if we delete dead edges during the optimization.