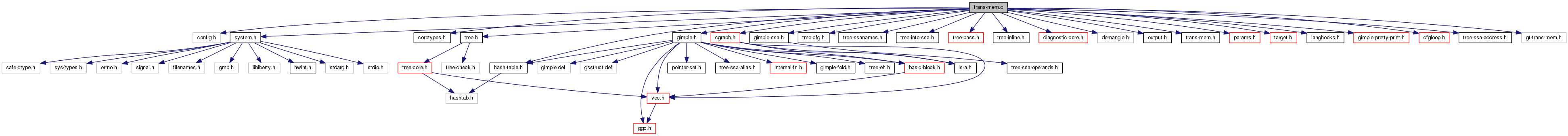

#include "config.h"#include "system.h"#include "coretypes.h"#include "hash-table.h"#include "tree.h"#include "gimple.h"#include "gimple-ssa.h"#include "cgraph.h"#include "tree-cfg.h"#include "tree-ssanames.h"#include "tree-into-ssa.h"#include "tree-pass.h"#include "tree-inline.h"#include "diagnostic-core.h"#include "demangle.h"#include "output.h"#include "trans-mem.h"#include "params.h"#include "target.h"#include "langhooks.h"#include "gimple-pretty-print.h"#include "cfgloop.h"#include "tree-ssa-address.h"#include "gt-trans-mem.h"

Data Structures | |

| struct | diagnose_tm |

| struct | tm_log_entry |

| struct | log_entry_hasher |

| struct | tm_new_mem_map |

| struct | tm_mem_map_hasher |

| struct | tm_region |

| struct | bb2reg_stuff |

| struct | tm_memop |

| struct | tm_memop_hasher |

| struct | tm_memopt_bitmaps |

| struct | tm_ipa_cg_data |

| struct | create_version_alias_info |

Macros | |

| #define | PROB_VERY_UNLIKELY (REG_BR_PROB_BASE / 2000 - 1) |

| #define | PROB_VERY_LIKELY (PROB_ALWAYS - PROB_VERY_UNLIKELY) |

| #define | PROB_UNLIKELY (REG_BR_PROB_BASE / 5 - 1) |

| #define | PROB_LIKELY (PROB_ALWAYS - PROB_VERY_LIKELY) |

| #define | PROB_ALWAYS (REG_BR_PROB_BASE) |

| #define | A_RUNINSTRUMENTEDCODE 0x0001 |

| #define | A_RUNUNINSTRUMENTEDCODE 0x0002 |

| #define | A_SAVELIVEVARIABLES 0x0004 |

| #define | A_RESTORELIVEVARIABLES 0x0008 |

| #define | A_ABORTTRANSACTION 0x0010 |

| #define | AR_USERABORT 0x0001 |

| #define | AR_USERRETRY 0x0002 |

| #define | AR_TMCONFLICT 0x0004 |

| #define | AR_EXCEPTIONBLOCKABORT 0x0008 |

| #define | AR_OUTERABORT 0x0010 |

| #define | MODE_SERIALIRREVOCABLE 0x0000 |

| #define | DIAG_TM_OUTER 1 |

| #define | DIAG_TM_SAFE 2 |

| #define | DIAG_TM_RELAXED 4 |

| #define | STORE_AVAIL_IN(BB) ((struct tm_memopt_bitmaps *) ((BB)->aux))->store_avail_in |

| #define | STORE_AVAIL_OUT(BB) ((struct tm_memopt_bitmaps *) ((BB)->aux))->store_avail_out |

| #define | STORE_ANTIC_IN(BB) ((struct tm_memopt_bitmaps *) ((BB)->aux))->store_antic_in |

| #define | STORE_ANTIC_OUT(BB) ((struct tm_memopt_bitmaps *) ((BB)->aux))->store_antic_out |

| #define | READ_AVAIL_IN(BB) ((struct tm_memopt_bitmaps *) ((BB)->aux))->read_avail_in |

| #define | READ_AVAIL_OUT(BB) ((struct tm_memopt_bitmaps *) ((BB)->aux))->read_avail_out |

| #define | READ_LOCAL(BB) ((struct tm_memopt_bitmaps *) ((BB)->aux))->read_local |

| #define | STORE_LOCAL(BB) ((struct tm_memopt_bitmaps *) ((BB)->aux))->store_local |

| #define | AVAIL_IN_WORKLIST_P(BB) ((struct tm_memopt_bitmaps *) ((BB)->aux))->avail_in_worklist_p |

| #define | BB_VISITED_P(BB) ((struct tm_memopt_bitmaps *) ((BB)->aux))->visited_p |

| #define | TRANSFORM_RAR 1 |

| #define | TRANSFORM_RAW 2 |

| #define | TRANSFORM_RFW 3 |

| #define | TRANSFORM_WAR 1 |

| #define | TRANSFORM_WAW 2 |

Typedefs | |

| typedef struct tm_log_entry * | tm_log_entry_t |

| typedef struct tm_new_mem_map | tm_new_mem_map_t |

| typedef struct tm_region * | tm_region_p |

| typedef struct tm_memop * | tm_memop_t |

| typedef vec< cgraph_node_ptr > | cgraph_node_queue |

Enumerations | |

| enum | thread_memory_type { mem_non_local = 0, mem_thread_local, mem_transaction_local, mem_max } |

Variables | |

| static htab_t | tm_wrap_map |

| static hash_table < log_entry_hasher > | tm_log |

| static vec< tree > | tm_log_save_addresses |

| static hash_table < tm_mem_map_hasher > | tm_new_mem_hash |

| bool | pending_edge_inserts_p |

| static struct tm_region * | all_tm_regions |

| static bitmap_obstack | tm_obstack |

| static bitmap_obstack | tm_memopt_obstack |

| static unsigned int | tm_memopt_value_id |

| static hash_table < tm_memop_hasher > | tm_memopt_value_numbers |

Macro Definition Documentation

| #define A_ABORTTRANSACTION 0x0010 |

| #define A_RESTORELIVEVARIABLES 0x0008 |

| #define A_RUNINSTRUMENTEDCODE 0x0001 |

| #define A_RUNUNINSTRUMENTEDCODE 0x0002 |

Referenced by expand_transaction().

| #define A_SAVELIVEVARIABLES 0x0004 |

| #define AR_EXCEPTIONBLOCKABORT 0x0008 |

| #define AR_OUTERABORT 0x0010 |

| #define AR_TMCONFLICT 0x0004 |

| #define AR_USERABORT 0x0001 |

| #define AR_USERRETRY 0x0002 |

| #define AVAIL_IN_WORKLIST_P | ( | BB | ) | ((struct tm_memopt_bitmaps *) ((BB)->aux))->avail_in_worklist_p |

Referenced by dump_tm_memopt_sets(), and tm_memopt_accumulate_memops().

| #define BB_VISITED_P | ( | BB | ) | ((struct tm_memopt_bitmaps *) ((BB)->aux))->visited_p |

| #define DIAG_TM_OUTER 1 |

Diagnostics for tm_safe functions/regions. Called by the front end once we've lowered the function to high-gimple. Subroutine of diagnose_tm_safe_errors, called through walk_gimple_seq. Process exactly one statement. WI->INFO is set to non-null when in the context of a tm_safe function, and null for a __transaction block.

Referenced by diagnose_tm_1_op().

| #define DIAG_TM_RELAXED 4 |

| #define DIAG_TM_SAFE 2 |

Referenced by diagnose_tm_1_op().

| #define MODE_SERIALIRREVOCABLE 0x0000 |

Referenced by ipa_tm_diagnose_tm_safe().

| #define PROB_ALWAYS (REG_BR_PROB_BASE) |

| #define PROB_LIKELY (PROB_ALWAYS - PROB_VERY_LIKELY) |

| #define PROB_UNLIKELY (REG_BR_PROB_BASE / 5 - 1) |

| #define PROB_VERY_LIKELY (PROB_ALWAYS - PROB_VERY_UNLIKELY) |

| #define PROB_VERY_UNLIKELY (REG_BR_PROB_BASE / 2000 - 1) |

Passes for transactional memory support. Copyright (C) 2008-2013 Free Software Foundation, Inc.

This file is part of GCC.

GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version.

GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see http://www.gnu.org/licenses/.

| #define READ_AVAIL_IN | ( | BB | ) | ((struct tm_memopt_bitmaps *) ((BB)->aux))->read_avail_in |

Referenced by tm_memop_hasher::equal(), and tm_memopt_accumulate_memops().

| #define READ_AVAIL_OUT | ( | BB | ) | ((struct tm_memopt_bitmaps *) ((BB)->aux))->read_avail_out |

Referenced by tm_memop_hasher::equal(), and tm_memopt_accumulate_memops().

| #define READ_LOCAL | ( | BB | ) | ((struct tm_memopt_bitmaps *) ((BB)->aux))->read_local |

| #define STORE_ANTIC_IN | ( | BB | ) | ((struct tm_memopt_bitmaps *) ((BB)->aux))->store_antic_in |

| #define STORE_ANTIC_OUT | ( | BB | ) | ((struct tm_memopt_bitmaps *) ((BB)->aux))->store_antic_out |

Referenced by dump_tm_memopt_sets().

| #define STORE_AVAIL_IN | ( | BB | ) | ((struct tm_memopt_bitmaps *) ((BB)->aux))->store_avail_in |

Referenced by tm_memop_hasher::equal(), and tm_memopt_accumulate_memops().

| #define STORE_AVAIL_OUT | ( | BB | ) | ((struct tm_memopt_bitmaps *) ((BB)->aux))->store_avail_out |

Referenced by tm_memop_hasher::equal(), and tm_memopt_accumulate_memops().

| #define STORE_LOCAL | ( | BB | ) | ((struct tm_memopt_bitmaps *) ((BB)->aux))->store_local |

Referenced by dump_tm_memopt_sets().

| #define TRANSFORM_RAR 1 |

Offsets of load variants from TM_LOAD. For example, BUILT_IN_TM_LOAD_RAR* is an offset of 1 from BUILT_IN_TM_LOAD*. See gtm-builtins.def.

| #define TRANSFORM_RAW 2 |

| #define TRANSFORM_RFW 3 |

| #define TRANSFORM_WAR 1 |

Offsets of store variants from TM_STORE.

| #define TRANSFORM_WAW 2 |

Typedef Documentation

| typedef vec<cgraph_node_ptr> cgraph_node_queue |

| typedef struct tm_log_entry * tm_log_entry_t |

Instead of instrumenting thread private memory, we save the addresses in a log which we later use to save/restore the addresses upon transaction start/restart.

The log is keyed by address, where each element contains individual statements among different code paths that perform the store.

This log is later used to generate either plain save/restore of the addresses upon transaction start/restart, or calls to the ITM_L* logging functions.

So for something like:

struct large { int x[1000]; };

struct large lala = { 0 };

__transaction {

lala.x[i] = 123;

...

}

We can either save/restore:

lala = { 0 };

trxn = _ITM_startTransaction ();

if (trxn & a_saveLiveVariables)

tmp_lala1 = lala.x[i];

else if (a & a_restoreLiveVariables)

lala.x[i] = tmp_lala1;

or use the logging functions:

lala = { 0 };

trxn = _ITM_startTransaction ();

_ITM_LU4 (&lala.x[i]);

Obviously, if we use _ITM_L* to log, we prefer to call _ITM_L* as far up the dominator tree to shadow all of the writes to a given location (thus reducing the total number of logging calls), but not so high as to be called on a path that does not perform a write. One individual log entry. We may have multiple statements for the same location if neither dominate each other (on different execution paths).

| typedef struct tm_memop * tm_memop_t |

A unique TM memory operation.

| typedef struct tm_new_mem_map tm_new_mem_map_t |

| typedef struct tm_region* tm_region_p |

Enumeration Type Documentation

| enum thread_memory_type |

Function Documentation

| tree build_tm_abort_call | ( | ) |

Build a GENERIC tree for a user abort. This is called by front ends while transforming the __tm_abort statement.

|

static |

Construct a memory load in a transactional context. Return the gimple statement performing the load, or NULL if there is no TM_LOAD builtin of the appropriate size to do the load.

LOC is the location to use for the new statement(s).

|

static |

Similarly for storing TYPE in a transactional context.

Handle the easy initialization to zero.

...otherwise punt to the caller and probably use

BUILT_IN_TM_MEMMOVE, because we can't wrap a

VIEW_CONVERT_EXPR around a CONSTRUCTOR (below) and produce

valid gimple.

References gimple_build_assign, gsi_insert_before(), and GSI_SAME_STMT.

|

static |

| void compute_transaction_bits | ( | void | ) |

Set the IN_TRANSACTION for all gimple statements that appear in a transaction.

?? Perhaps we need to abstract gate_tm_init further, because we certainly don't need it to calculate CDI_DOMINATOR info.

|

static |

Save stmt for use in leaf analysis.

A function explicitly marked transaction_callable as

opposed to transaction_safe is being defined to be

unsafe as part of its ABI, regardless of its contents. ??? At present we've been considering replacements

merely transaction_callable, and therefore might

enter irrevocable. The tm_wrap attribute has not

yet made it into the new language spec. ??? Diagnostics for unmarked direct calls moved into

the IPA pass. Section 3.2 of the spec details how

functions not marked should be considered "implicitly

safe" based on having examined the function body. An unmarked indirect call. Consider it unsafe even

though optimization may yet figure out how to inline. ??? We ought to come up with a way to add attributes to

asm statements, and then add "transaction_safe" to it.

Either that or get the language spec to resurrect __tm_waiver.

Tree callback function for diagnose_tm pass.

References DIAG_TM_OUTER, DIAG_TM_SAFE, error_at(), gimple_call_fn(), gimple_location(), is_tm_may_cancel_outer(), diagnose_tm::summary_flags, TREE_CODE, and TREE_OPERAND.

|

static |

Prettily dump one of the memopt sets. BITS is the bitmap to dump.

Yeah, yeah, yeah. Whatever. This is just for debugging.

|

static |

Prettily dump all of the memopt sets in BLOCKS.

References AVAIL_IN_WORKLIST_P, bitmap_bit_p, bitmap_ior_into(), tm_region::exit_blocks, basic_block_def::index, STORE_ANTIC_OUT, and STORE_LOCAL.

|

static |

Inform about a load/store optimization.

|

static |

Mark the GIMPLE_ASSIGN statement as appropriate for being inside a transaction region.

References create_artificial_label(), gimple_build_label(), gimple_transaction_set_label(), GSI_CONTINUE_LINKING, gsi_insert_after(), and UNKNOWN_LOCATION.

Referenced by examine_call_tm().

|

static |

Mark a GIMPLE_CALL as appropriate for being inside a transaction.

Check if this call is a transaction abort.

Note that something may happen.

References examine_assign_tm(), gimple_assign_single_p(), gsi_stmt(), and walk_stmt_info::info.

|

static |

Main entry point for flattening GIMPLE_TRANSACTION constructs. After this, GIMPLE_TRANSACTION nodes still exist, but the nested body has been moved out, and all the data required for constructing a proper CFG has been recorded.

Transactional clones aren't created until a later pass.

References all_tm_regions, BITMAP_ALLOC, tm_region::entry_block, tm_region::exit_blocks, FALLTHRU_EDGE, tm_region::inner, tm_region::irr_blocks, tm_region::next, NULL, bitmap_obstack::obstack, tm_region::original_transaction_was_outer, tm_region::outer, tm_region::tm_state, and tm_region::transaction_stmt.

|

static |

Entry point to the final expansion of transactional nodes.

We've got to release the dominance info now, to indicate that it must be rebuilt completely. Otherwise we'll crash trying to update the SSA web in the TODO section following this pass.

Referenced by make_pass_tm_mark().

|

static |

Entry point to the MARK phase of TM expansion. Here we replace transactional memory statements with calls to builtins, and function calls with their transactional clones (if available). But we don't yet lower GIMPLE_TRANSACTION or add the transaction restart back-edges.

If we're sure to go irrevocable, there won't be anything to expand, since the run-time will go irrevocable right away.

References split_bb_make_tm_edge().

|

static |

Replace TM load/stores with hints for the runtime. We handle things like read-after-write, write-after-read, read-after-read, read-for-write, etc.

All the TM stores/loads in the current region.

Save all BBs for the current region.

Collect all the memory operations.

Solve data flow equations and transform each block accordingly.

|

static |

Expand an assignment statement into transactional builtins.

Add thread private addresses to log if applicable.

??? Figure out if there's any possible overlap between the LHS

and the RHS and if not, use MEMCPY. Now that we have the load/store in its instrumented form, add thread private addresses to the log if applicable.

References cgraph_create_node(), expand_call_tm(), gimple_call_set_fndecl(), cgraph_node::local, cgraph_local_info::tm_may_enter_irr, and update_stmt().

Referenced by expand_call_tm().

|

static |

Split block BB as necessary for every builtin function we added, and wire up the abnormal back edges implied by the transaction restart.

References expand_regions_1(), tm_region::next, and NULL.

|

static |

Expand all statements in BB as appropriate for being inside a transaction.

Only memory reads/writes need to be instrumented.

|

static |

Expand a call statement as appropriate for a transaction. That is, either verify that the call does not affect the transaction, or redirect the call to a clone that handles transactions, or change the transaction state to IRREVOCABLE. Return true if the call is one of the builtins that end a transaction.

Assume all non-const/pure calls write to memory, except transaction ending builtins.

For indirect calls, we already generated a call into the runtime.

We are guaranteed never to go irrevocable on a safe or pure

call, and the pure call was handled above. All calls should have cgraph here.

We can have a nodeless call here if some pass after IPA-tm

added uninstrumented calls. For example, loop distribution

can transform certain loop constructs into __builtin_mem*

calls. In this case, see if we have a suitable TM

replacement and fill in the gaps. Instrument the store if needed. If the assignment happens inside the function call (return slot optimization), there is no instrumentation to be done, since the callee should have done the right thing.

Remember if the call was going to throw.

We cannot throw in the middle of a BB. If the call was going

to throw, place the instrumentation on the fallthru edge, so

the call remains the last statement in the block.

References expand_assign_tm().

Referenced by expand_assign_tm().

|

static |

The representation of a transaction changes several times during the lowering process. In the beginning, in the front-end we have the GENERIC tree TRANSACTION_EXPR. For example,

__transaction {

local++;

if (++global == 10)

__tm_abort;

}

During initial gimplification (gimplify.c) the TRANSACTION_EXPR node is trivially replaced with a GIMPLE_TRANSACTION node.

During pass_lower_tm, we examine the body of transactions looking for aborts. Transactions that do not contain an abort may be merged into an outer transaction. We also add a TRY-FINALLY node to arrange for the transaction to be committed on any exit.

[??? Think about how this arrangement affects throw-with-commit and throw-with-abort operations. In this case we want the TRY to handle gotos, but not to catch any exceptions because the transaction will already be closed.]

GIMPLE_TRANSACTION [label=NULL] {

try {

local = local + 1;

t0 = global;

t1 = t0 + 1;

global = t1;

if (t1 == 10)

__builtin___tm_abort ();

} finally {

__builtin___tm_commit ();

}

}

During pass_lower_eh, we create EH regions for the transactions, intermixed with the regular EH stuff. This gives us a nice persistent mapping (all the way through rtl) from transactional memory operation back to the transaction, which allows us to get the abnormal edges correct to model transaction aborts and restarts:

GIMPLE_TRANSACTION [label=over]

local = local + 1;

t0 = global;

t1 = t0 + 1;

global = t1;

if (t1 == 10)

__builtin___tm_abort ();

__builtin___tm_commit ();

over:

This is the end of all_lowering_passes, and so is what is present during the IPA passes, and through all of the optimization passes.

During pass_ipa_tm, we examine all GIMPLE_TRANSACTION blocks in all functions and mark functions for cloning.

At the end of gimple optimization, before exiting SSA form, pass_tm_edges replaces statements that perform transactional memory operations with the appropriate TM builtins, and swap out function calls with their transactional clones. At this point we introduce the abnormal transaction restart edges and complete lowering of the GIMPLE_TRANSACTION node.

x = __builtin___tm_start (MAY_ABORT);

eh_label:

if (x & abort_transaction)

goto over;

local = local + 1;

t0 = __builtin___tm_load (global);

t1 = t0 + 1;

__builtin___tm_store (&global, t1);

if (t1 == 10)

__builtin___tm_abort ();

__builtin___tm_commit ();

over:Traverse the regions enclosed and including REGION. Execute CALLBACK for each region, passing DATA. CALLBACK returns NULL to continue the traversal, otherwise a non-null value which this function will return as well. TRAVERSE_CLONES is true if we should traverse transactional clones.

|

static |

Helper function for expand_regions. Expand REGION and recurse to the inner region. Call CALLBACK on each region. CALLBACK returns NULL to continue the traversal, otherwise a non-null value which this function will return as well. TRAVERSE_CLONES is true if we should traverse transactional clones.

Referenced by expand_block_edges().

|

static |

Replace the GIMPLE_TRANSACTION in this region with the corresponding call to BUILT_IN_TM_START.

??? There are plenty of bits here we're not computing.

If the transaction does not have an abort in lexical scope and is not marked as an outer transaction, then it will never abort.

References A_RUNUNINSTRUMENTEDCODE, add_bb_to_loop(), apply_probability(), build_int_cst(), edge_def::count, basic_block_def::count, create_empty_bb(), create_tmp_reg(), current_loops, EDGE_FREQUENCY, tm_region::entry_block, edge_def::flags, basic_block_def::frequency, gimple_build_assign_with_ops(), gimple_build_cond(), GSI_CONTINUE_LINKING, gsi_insert_after(), gsi_last_bb(), basic_block_def::loop_father, make_edge(), NULL, edge_def::probability, redirect_edge_pred(), REG_BR_PROB_BASE, and tm_region::restart_block.

|

static |

Return a TM-aware replacement function for DECL.

??? We may well want TM versions of most of the common <string.h> functions. For now, we've already these two defined.

Adjust expand_call_tm() attributes as necessary for the cases handled here:

|

static |

Common gateing function for several of the TM passes.

Referenced by diagnose_tm_blocks().

|

static |

The "gate" function for all transactional memory expansion and optimization passes. We collect region information for each top-level transaction, and if we don't find any, we skip all of the TM passes. Each region will have all of the exit blocks recorded, and the originating statement.

If the function is a TM_CLONE, then the entire function is the region.

For a clone, the entire function is the region. But even if

we don't need to record any exit blocks, we may need to

record irrevocable blocks. If we didn't find any regions, cleanup and skip the whole tree

of tm-related optimizations.

|

static |

References all_tm_regions, tm_ipa_cg_data::all_tm_regions, BITMAP_ALLOC, tm_region::entry_block, tm_region::exit_blocks, FOR_EACH_VEC_ELT, free_dominance_info(), get_tm_region_blocks(), ipa_tm_scan_calls_block(), ipa_uninstrument_transaction(), tm_region::next, rebuild_cgraph_edges(), and tm_ipa_cg_data::transaction_blocks_normal.

|

static |

Generate the temporary to be used for the return value of BUILT_IN_TM_START.

|

static |

Return the attributes we want to examine for X, or NULL if it's not something we examine. We look at function types, but allow pointers to function types and function decls and peek through.

FALLTHRU

FALLTHRU

References NULL, TREE_CODE, TREE_TYPE, TYPE_ATTRIBUTES, and TYPE_P.

|

static |

|

staticread |

Return the ipa data associated with NODE, allocating zeroed memory if necessary. TRAVERSE_ALIASES is true if we must traverse aliases and set *NODE accordingly.

Referenced by ipa_tm_transform_calls_1().

|

static |

Return the list of basic-blocks in REGION.

STOP_AT_IRREVOCABLE_P is true if caller is uninterested in blocks following a TM_IRREVOCABLE call.

INCLUDE_UNINSTRUMENTED_P is TRUE if we should include the uninstrumented code path blocks in the list of basic blocks returned, false otherwise.

References build_int_cst(), builtin_decl_explicit(), edge_def::flags, FOR_EACH_EDGE, gimple_bb(), gimple_build_call(), gimple_transaction_subcode(), GTMA_DOES_GO_IRREVOCABLE, GTMA_HAS_NO_INSTRUMENTATION, GTMA_HAVE_STORE, GTMA_MAY_ENTER_IRREVOCABLE, NULL, tm_region::original_transaction_was_outer, PR_DOESGOIRREVOCABLE, PR_HASNOABORT, PR_HASNOIRREVOCABLE, PR_INSTRUMENTEDCODE, PR_READONLY, PR_UNINSTRUMENTEDCODE, basic_block_def::succs, tm_region::tm_state, tm_region::transaction_stmt, and TREE_TYPE.

Referenced by gate_tm_memopt().

|

static |

Gimplify the address of a TARGET_MEM_REF. Return the SSA_NAME result, insert the new statements before GSI.

References dump_file, and FOR_EACH_HASH_TABLE_ELEMENT.

|

static |

Create a copy of the function (possibly declaration only) of OLD_NODE, appropriate for the transactional clone.

DECL_ASSEMBLER_NAME needs to be set before we call cgraph_copy_node_for_versioning below, because cgraph_node will fill the assembler_name_hash.

Perform the same remapping to the comdat group.

Remap extern inline to static inline.

??? Is it worth trying to use make_decl_one_only?

Do the same thing, but for any aliases of the original node.

References BITMAP_ALLOC, bitmap_bit_p, BITMAP_FREE, bitmap_set_bit, edge_def::dest, tm_region::exit_blocks, FOR_EACH_EDGE, basic_block_def::index, ipa_tm_transform_calls_1(), basic_block_def::succs, and vNULL.

|

static |

A subroutine of ipa_tm_create_version, called via cgraph_for_node_and_aliases. Create new tm clones for each of the existing aliases.

Based loosely on C++'s make_alias_for().

Perform the same remapping to the comdat group.

?? Do not traverse aliases here.

|

static |

|

static |

Diagnose calls from transaction_safe functions to unmarked functions that are determined to not be safe.

References build_int_cst(), builtin_decl_explicit(), cgraph_create_edge(), cgraph_get_create_node(), g, gimple_build_call(), gsi_after_labels(), gsi_insert_before(), GSI_SAME_STMT, MODE_SERIALIRREVOCABLE, NULL_TREE, split_block_after_labels(), and transaction_subcode_ior().

|

static |

Diagnose call from atomic transactions to unmarked functions that are determined to not be safe.

Atomic transactions can be nested inside relaxed.

Indirect function calls have been diagnosed already.

Stop at the end of the transaction.

Marked functions have been diagnosed already.

|

static |

Main entry point for the transactional memory IPA pass.

List of functions that will go irrevocable.

For all local functions marked tm_callable, queue them.

For all local reachable functions...

... marked tm_pure, record that fact for the runtime by

indicating that the pure function is its own tm_callable.

No need to do this if the function's address can't be taken. Scan for calls that are in each transaction, and

generate the uninstrumented code path. Put it in the worklist so we can scan the function

later (ipa_tm_scan_irr_function) and mark the

irrevocable blocks. For every local function on the callee list, scan as if we will be creating a transactional clone, queueing all new functions we find along the way.

Put it in the worklist so we can scan the function later

(ipa_tm_scan_irr_function) and mark the irrevocable

blocks. Some callees cannot be arbitrarily cloned. These will always be

irrevocable. Mark these now, so that we need not scan them. If this is an alias, make sure its base is queued as well.

we need not scan the callees now, as the base will do. Add all nodes called by this function into

tm_callees as well. Iterate scans until no more work to be done. Prefer not to use vec::pop because the worklist tends to follow a breadth-first search of the callgraph, which should allow convergance with a minimum number of scans. But we also don't want the worklist array to grow without bound, so we shift the array up periodically.

For every function on the callee list, collect the tm_may_enter_irr bit on the node.

Propagate the tm_may_enter_irr bit to callers until stable.

Propagate back to normal callers.

Propagate back to referring aliases as well.

?? Do not traverse aliases here.

Now validate all tm_safe functions, and all atomic regions in other functions.

Create clones. Do those that are not irrevocable and have a positive call count. Do those publicly visible functions that the user directed us to clone.

Redirect calls to the new clones, and insert irrevocable marks.

Free and clear all data structures.

|

static |

Construct a call to TM_GETTMCLONE and insert it before GSI.

By transforming the call into a TM_GETTMCLONE, we are technically taking the address of the original function and its clone. Explain this so inlining will know this function is needed.

Discard OBJ_TYPE_REF, since we weren't able to fold it. Cast return value from tm_gettmclone* into appropriate function pointer.

??? This is a hack to preserve the NOTHROW bit on the call, which we would have derived from the decl. Failure to save this bit means we might have to split the basic block.

Discarding OBJ_TYPE_REF above may produce incompatible LHS and RHS for a call statement. Fix it.

|

static |

Construct a call to TM_IRREVOCABLE and insert it at the beginning of BB.

|

inlinestatic |

References cgraph_get_create_node().

|

inlinestatic |

|

static |

Return true if, for the transactional clone of NODE, any call may enter irrevocable mode.

Handle some TM builtins. Ordinarily these aren't actually generated at this point, but handling these functions when written in by the user makes it easier to build unit tests.

Filter out all functions that are marked.

If we aren't seeing the final version of the function we don't know what it will contain at runtime.

If the function must go irrevocable, then of course true.

If there are any blocks marked irrevocable, then the function as a whole may enter irrevocable.

We may have previously marked this function as tm_may_enter_irr; see pass_diagnose_tm_blocks.

Recurse on the main body for aliases. In general, this will result in one of the bits above being set so that we will not have to recurse next time.

What remains is unmarked local functions without items that force the function to go irrevocable.

|

static |

The function NODE has been detected to be irrevocable. Push all of its callers onto WORKLIST for the purpose of re-scanning them.

Don't examine recursive calls.

Even if we think we can go irrevocable, believe the user

above all. Check if the callee is in a transactional region. If so,

schedule the function for normal re-scan as well.

|

static |

Propagate the irrevocable property both up and down the dominator tree. BB is the current block being scanned; EXIT_BLOCKS are the edges of the TM regions; OLD_IRR are the results of a previous scan of the dominator tree which has been fully propagated; NEW_IRR is the set of new blocks which are gaining the irrevocable property during the current scan.

If this block is in the old set, no need to rescan.

Propagate up. If my children are, I am too, but we must have

at least one child that is. Add block to new_irr if it hasn't already been processed.

Propagate down to everyone we immediately dominate.

Make sure block is actually in a TM region, and it

isn't already in old_irr.

Referenced by ipa_tm_scan_irr_block().

|

static |

A subroutine of ipa_tm_scan_calls_transaction and ipa_tm_scan_calls_clone. Queue all callees within block BB.

Referenced by gate_tm_memopt().

|

static |

Scan all calls in NODE as if this is the transactional clone, and push the destinations into the callee queue.

|

static |

Scan all calls in NODE that are within a transaction region, and push the resulting nodes into the callee queue.

References bitmap_bit_p, bitmap_set_bit, edge_def::dest, FOR_EACH_EDGE, basic_block_def::index, and basic_block_def::succs.

|

static |

A subroutine of ipa_tm_scan_irr_blocks; return true iff any statement within the block is irrevocable.

Functions with the attribute are by definition irrevocable.

For direct function calls, go ahead and check for replacement

functions, or transitive irrevocable functions. For indirect

functions, we'll ask the runtime. Return true if irrevocable, but above all, believe

the user. ??? The Approved Method of indicating that an inline

assembly statement is not relevant to the transaction

is to wrap it in a __tm_waiver block. This is not

yet implemented, so we can't check for it.

References bitmap_bit_p, ENTRY_BLOCK_PTR, ipa_tm_propagate_irr(), ipa_tm_scan_irr_blocks(), tm_ipa_cg_data::irrevocable_blocks_clone, and single_succ().

Referenced by ipa_uninstrument_transaction().

|

static |

For each of the blocks seeded witin PQUEUE, walk the CFG looking for new irrevocable blocks, marking them in NEW_IRR. Don't bother scanning past OLD_IRR or EXIT_BLOCKS.

Don't re-scan blocks we know already are irrevocable.

Referenced by ipa_tm_scan_irr_block().

|

static |

(Re-)Scan the transaction blocks in NODE for calls to irrevocable functions, as well as other irrevocable actions such as inline assembly. Mark all such blocks as irrevocable and decrement the number of calls to transactional clones. Return true if, for the transactional clone, the entire function is irrevocable.

Builtin operators (operator new, and such).

Scan each tm region, propagating irrevocable status through the tree.

If we found any new irrevocable blocks, reduce the call count for transactional clones within the irrevocable blocks. Save the new set of irrevocable blocks for next time.

|

static |

Walk the CFG for REGION, beginning at BB. Install calls to tm_irrevocable when IRR_BLOCKS are reached, redirect other calls to the generated transactional clone.

|

static |

Helper function for ipa_tm_transform_calls. For a given BB, install calls to tm_irrevocable when IRR_BLOCKS are reached, redirect other calls to the generated transactional clone.

Redirect edges to the appropriate replacement or clone.

References get_cg_data(), tm_ipa_cg_data::in_worklist, and maybe_push_queue().

Referenced by ipa_tm_create_version().

|

static |

Helper function for ipa_tm_transform_calls*. Given a call statement in GSI which resides inside transaction REGION, redirect the call to either its wrapper function, or its clone.

For indirect calls, pass the address through the runtime.

Handle some TM builtins. Ordinarily these aren't actually generated at this point, but handling these functions when written in by the user makes it easier to build unit tests.

Fixup recursive calls inside clones.

??? Why did cgraph_copy_node_for_versioning update the call edges for recursion but not update the call statements themselves?

If there is a replacement, use it.

??? Mark all transaction_wrap functions tm_may_enter_irr.

We can't do this earlier in record_tm_replacement because

cgraph_remove_unreachable_nodes is called before we inject

references to the node. Further, we can't do this in some

nice central place in ipa_tm_execute because we don't have

the exact list of wrapper functions that would be used.

Marking more wrappers than necessary results in the creation

of unnecessary cgraph_nodes, which can cause some of the

other IPA passes to crash.

We do need to mark these nodes so that we get the proper

result in expand_call_tm. ??? This seems broken. How is it that we're marking the

CALLEE as may_enter_irr? Surely we should be marking the

CALLER. Also note that find_tm_replacement_function also

contains mappings into the TM runtime, e.g. memcpy. These

we know won't go irrevocable. As we've already skipped pure calls and appropriate builtins,

and we've already marked irrevocable blocks, if we can't come

up with a static replacement, then ask the runtime.

|

static |

Transform the calls within the transactional clone of NODE.

If this function makes no calls and has no irrevocable blocks, then there's nothing to do.

??? Remove non-aborting top-level transactions.

|

static |

Transform the calls within the TM regions within NODE.

If we're sure to go irrevocable, don't transform anything.

|

static |

Duplicate the basic blocks in QUEUE for use in the uninstrumented code path. QUEUE are the basic blocks inside the transaction represented in REGION.

Later in split_code_paths() we will add the conditional to choose between the two alternatives.

References BITMAP_ALLOC, bitmap_bit_p, bitmap_set_bit, edge_def::dest, FOR_EACH_EDGE, basic_block_def::index, ipa_tm_scan_irr_block(), and basic_block_def::succs.

Referenced by gate_tm_memopt().

|

static |

Return true if FNDECL is BUILT_IN_TM_ABORT.

|

static |

Return true if X has been marked TM_CALLABLE.

| bool is_tm_ending_fndecl | ( | ) |

Return true for built in functions that "end" a transaction.

|

static |

Return true if X has been marked TM_IRREVOCABLE.

A call to the irrevocable builtin is by definition, irrevocable.

|

static |

Return true if STMT is a TM load.

References BUILT_IN_NORMAL, DECL_BUILT_IN_CLASS, DECL_FUNCTION_CODE, and gimple_call_fndecl().

| bool is_tm_may_cancel_outer | ( | ) |

Return true if X has been marked TRANSACTION_MAY_CANCEL_OUTER.

Referenced by diagnose_tm_1_op().

| bool is_tm_pure | ( | ) |

Return true if X has been marked TM_PURE.

FALLTHRU

|

static |

Return true if CALL is const, or tm_pure.

| bool is_tm_safe | ( | ) |

Return true if X has been marked TM_SAFE.

|

static |

Same as above, but for simple TM loads, that is, not the after-write, after-read, etc optimized variants.

|

static |

Same as above, but for simple TM stores, that is, not the after-write, after-read, etc optimized variants.

|

static |

Return true if STMT is a TM store.

References BUILT_IN_NORMAL, DECL_BUILT_IN_CLASS, DECL_FUNCTION_CODE, and gimple_call_fndecl().

|

static |

Iterate through the statements in the sequence, lowering them all as appropriate for being outside of a transaction.

|

static |

Iterate through the statements in the sequence, lowering them all as appropriate for being in a transaction.

Only memory reads/writes need to be instrumented.

|

static |

Lower a GIMPLE_TRANSACTION statement.

First, lower the body. The scanning that we do inside gives us some idea of what we're dealing with.

If there was absolutely nothing transaction related inside the transaction, we may elide it. Likewise if this is a nested transaction and does not contain an abort.

Wrap the body of the transaction in a try-finally node so that the commit call is always properly called.

If the transaction calls abort or if this is an outer transaction, add an "over" label afterwards.

Record the set of operations found for use later.

| gimple_opt_pass* make_pass_diagnose_tm_blocks | ( | ) |

References tm_log_entry::addr, tm_log_entry::entry_block, tm_log_entry::save_var, and tm_log_entry::stmts.

| simple_ipa_opt_pass* make_pass_ipa_tm | ( | ) |

| gimple_opt_pass* make_pass_lower_tm | ( | ) |

| gimple_opt_pass* make_pass_tm_edges | ( | ) |

| gimple_opt_pass* make_pass_tm_init | ( | ) |

References build_int_cst(), and CONSTRUCTOR_ELTS.

| gimple_opt_pass* make_pass_tm_mark | ( | ) |

References execute(), and execute_tm_edges().

| gimple_opt_pass* make_pass_tm_memopt | ( | ) |

|

static |

Add NODE to the end of QUEUE, unless IN_QUEUE_P indicates that it is already present.

Referenced by ipa_tm_transform_calls_1().

|

static |

| void record_tm_replacement | ( | ) |

|

static |

Determine whether X has to be instrumented using a read or write barrier.

ENTRY_BLOCK is the entry block for the region where stmt resides in. NULL if unknown.

STMT is the statement in which X occurs in. It is used for thread private memory instrumentation. If no TPM instrumentation is desired, STMT should be null.

?? Should we pass `orig', or the INDIRECT_REF X. ??

Transaction-locals require nothing at all. For malloc, a

transaction restart frees the memory and we reallocate.

For alloca, the stack pointer gets reset by the retry and

we reallocate. FALLTHRU

??? This value is a pointer, but aggregate_value_p has been

jigged to return true which confuses needs_to_live_in_memory.

This ought to be cleaned up generically.

FIXME: Verify this still happens after the next mainline

merge. Testcase ie g++.dg/tm/pr47554.C. For local memory that doesn't escape (aka thread private

memory), we can either save the value at the beginning of

the transaction and restore on restart, or call a tm

function to dynamically save and restore on restart

(ITM_L*).

|

inlinestatic |

Create an abnormal edge from STMT at iter, splitting the block as necessary. Adjust *PNEXT as needed for the split block.

Referenced by execute_tm_mark().

|

static |

Evaluate an address X being dereferenced and determine if it originally points to a non aliased new chunk of memory (malloc, alloca, etc).

Return MEM_THREAD_LOCAL if it points to a thread-local address. Return MEM_TRANSACTION_LOCAL if it points to a transaction-local address. Return MEM_NON_LOCAL otherwise.

ENTRY_BLOCK is the entry block to the transaction containing the dereference of X.

Possible uninitialized use, or a function argument. In either case, we don't care.

Look in cache first. Optimistically assume the memory is transaction local during processing. This catches recursion into this variable.

Search DEF chain to find the original definition of this address.

Address escapes. This is not thread-private.

If the malloc call is outside the transaction, this is

thread-local. x = foo ==> foo

x = foo + n ==> foo

x = (cast*) foo ==> foo

x = c ? op1 : op2 == > op1 or op2 just like a PHI

If any of the ancestors are non-local, we are sure to

be non-local. Otherwise we can avoid doing anything

and inherit what has already been generated. Exclude self-assignment.

Thread-local or transaction-local.

Referenced by tm_log_emit_restores().

|

static |

Given an address ADDR in STMT, find it in the memory log or add it, making sure to keep only the addresses highest in the dominator tree.

ENTRY_BLOCK is the entry_block for the transaction.

If we find the address in the log, make sure it's either the same address, or an equivalent one that dominates ADDR.

If we find the address, but neither ADDR dominates the found address, nor the found one dominates ADDR, we're on different execution paths. Add it.

If known, ENTRY_BLOCK is the entry block for the region, otherwise NULL.

Small invariant addresses can be handled as save/restores.

We must be able to copy this type normally. I.e., no

special constructors and the like. Save addresses separately in dominator order so we don't

get confused by overlapping addresses in the save/restore

sequence. Use the logging functions.

If we're generating a save/restore sequence, we don't care

about statements. We already have a store to the same address, higher up the

dominator tree. Nothing to do. We should be processing blocks in dominator tree order.

Store is on a different code path.

|

static |

Free logging data structures.

|

static |

Go through the log and instrument address that must be instrumented with the logging functions. Leave the save/restore addresses for later.

References tm_log_entry::addr, tm_log_entry::entry_block, hash_table< Descriptor, Allocator >::find_slot(), gcc_assert, gimple_build_assign, GSI_CONTINUE_LINKING, gsi_insert_after(), gsi_start_bb(), NULL, tm_log_entry::save_var, and unshare_expr().

|

static |

Emit the restore sequence for the corresponding addresses in the log. ENTRY_BLOCK is the entry block for the transaction. BB is the basic block to insert the code in.

We only care about variables in the current transaction.

Restores are in LIFO order from the saves in case we have

overlaps.

References CDI_DOMINATORS, dominated_by_p(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs3(), gimple_assign_rhs_code(), is_gimple_assign(), mem_non_local, mem_thread_local, MIN, ptr_deref_may_alias_global_p(), SSA_NAME_DEF_STMT, and thread_private_new_memory().

|

static |

Emit the save sequence for the corresponding addresses in the log. ENTRY_BLOCK is the entry block for the transaction. BB is the basic block to insert the code in.

We only care about variables in the current transaction.

Make sure we can create an SSA_NAME for this type. For

instance, aggregates aren't allowed, in which case the system

will create a VOP for us and everything will just work.

References hash_table< Descriptor, Allocator >::find_slot(), tm_new_mem_map::local_new_memory, mem_non_local, mem_transaction_local, NULL, SSA_NAME_IS_DEFAULT_DEF, TREE_CODE, and tm_new_mem_map::val.

|

static |

Instrument one address with the logging functions. ADDR is the address to save. STMT is the statement before which to place it.

References tm_log_entry::addr, dump_file, and print_generic_expr().

|

static |

Initialize logging data structures.

References tm_log_entry::addr, and TREE_TYPE.

| void tm_malloc_replacement | ( | ) |

When appropriate, record TM replacement for memory allocation functions.

FROM is the FNDECL to wrap.

If we have a previous replacement, the user must be explicitly wrapping malloc/calloc/free. They better know what they're doing...

|

static |

Return a transactional mangled name for the DECL_ASSEMBLER_NAME in OLD_DECL. The returned value is a freshly malloced pointer that should be freed by the caller.

Determine if the symbol is already a valid C++ mangled name. Do this even for C, which might be interfacing with C++ code via appropriately ugly identifiers.

??? We could probably do just as well checking for "_Z" and be done.

Don't play silly games, you!

I'd really like to know if we can ever be passed one of

these from the C++ front end. The Logical Thing would

seem that hidden-alias should be outer-most, so that we

get hidden-alias of a transaction-clone and not vice-versa.

References create_tmp_reg(), fold_build1, gimple_build_assign, gimple_call_set_lhs(), gsi_insert_after(), GSI_SAME_STMT, and TREE_TYPE.

|

static |

Accumulate TM memory operations in BB into STORE_LOCAL and READ_LOCAL.

References AVAIL_IN_WORKLIST_P, bitmap_bit_p, bitmap_ior_into(), edge_def::dest, EXIT_BLOCK_PTR, tm_region::exit_blocks, FOR_EACH_EDGE, basic_block_def::index, READ_AVAIL_IN, READ_AVAIL_OUT, STORE_AVAIL_IN, STORE_AVAIL_OUT, basic_block_def::succs, tm_memopt_compute_avin(), and worklist.

|

static |

Clear the visited bit for every basic block in BLOCKS.

References add_phi_args_after_copy(), copy_bbs(), gimple_bb(), make_edge(), NULL, and tm_region::transaction_stmt.

|

static |

Compute ANTIC sets for every basic block in BLOCKS.

We compute STORE_ANTIC_OUT as follows:

STORE_ANTIC_OUT[bb] = union(STORE_ANTIC_IN[bb], STORE_LOCAL[bb]) STORE_ANTIC_IN[bb] = intersect(STORE_ANTIC_OUT[successors])

REGION is the TM region. BLOCKS are the basic blocks in the region.

Allocate a worklist array/queue. Entries are only added to the list if they were not already on the list. So the size is bounded by the number of basic blocks in the region.

Seed ANTIC_OUT with the LOCAL set.

Put every block in the region on the worklist.

No need to insert exit blocks, since their ANTIC_IN is NULL,

and their ANTIC_OUT has already been seeded in. The exit blocks have been initialized with the local sets.

Iterate until the worklist is empty.

Take the first entry off the worklist.

This block can be added to the worklist again if necessary.

Note: We do not add the LOCAL sets here because we already

seeded the ANTIC_OUT sets with them. If the out state of this block changed, then we need to add

its predecessors to the worklist if they are not already in.

|

static |

Compute the STORE_ANTIC_IN for the basic block BB.

Seed with the ANTIC_OUT of any successor.

Make sure we have already visited this BB, and is thus

initialized.

|

static |

Compute the AVAIL sets for every basic block in BLOCKS.

We compute {STORE,READ}_AVAIL_{OUT,IN} as follows:

AVAIL_OUT[bb] = union (AVAIL_IN[bb], LOCAL[bb]) AVAIL_IN[bb] = intersect (AVAIL_OUT[predecessors])

This is basically what we do in lcm's compute_available(), but here we calculate two sets of sets (one for STOREs and one for READs), and we work on a region instead of the entire CFG.

REGION is the TM region. BLOCKS are the basic blocks in the region.

Allocate a worklist array/queue. Entries are only added to the list if they were not already on the list. So the size is bounded by the number of basic blocks in the region.

Put every block in the region on the worklist.

Seed AVAIL_OUT with the LOCAL set.

No need to insert the entry block, since it has an AVIN of

null, and an AVOUT that has already been seeded in. The entry block has been initialized with the local sets.

Iterate until the worklist is empty.

Take the first entry off the worklist.

This block can be added to the worklist again if necessary.

Note: We do not add the LOCAL sets here because we already

seeded the AVAIL_OUT sets with them. If the out state of this block changed, then we need to add

its successors to the worklist if they are not already in.

|

static |

Compute {STORE,READ}_AVAIL_IN for the basic block BB.

Seed with the AVOUT of any predecessor.

Make sure we have already visited this BB, and is thus

initialized.

If e->src->aux is NULL, this predecessor is actually on an

enclosing transaction. We only care about the current

transaction, so ignore it.

Referenced by tm_memopt_accumulate_memops().

|

static |

Free sets computed for each BB.

|

staticread |

Return a new set of bitmaps for a BB.

|

static |

Perform the actual TM memory optimization transformations in the basic blocks in BLOCKS.

References all_tm_regions, and cgraph_node::clone.

|

static |

Perform a read/write optimization. Replaces the TM builtin in STMT by a builtin that is OFFSET entries down in the builtins table in gtm-builtins.def.

|

static |

Given a TM load/store in STMT, return the value number for the address it accesses.

|

static |

Collect all of the transaction regions within the current function and record them in ALL_TM_REGIONS. The REGION parameter may specify an "outermost" region for use by tm clones.

We could store this information in bb->aux, but we may get called through get_all_tm_blocks() from another pass that may be already using bb->aux.

Record exit and irrevocable blocks.

Check for the last statement in the block beginning a new region.

Process subsequent blocks.

If the current block started a new region, make sure that only

the entry block of the new region is associated with this region.

Other successors are still part of the old region.

Referenced by tm_region_init_1().

|

staticread |

A subroutine of tm_region_init. Record the existence of the GIMPLE_TRANSACTION statement in a tree of tm_region elements.

There are either one or two edges out of the block containing the GIMPLE_TRANSACTION, one to the actual region and one to the "over" label if the region contains an abort. The former will always be the one marked FALLTHRU.

|

staticread |

A subroutine of tm_region_init. Record all the exit and irrevocable blocks in BB into the region's exit_blocks and irr_blocks bitmaps. Returns the new region being scanned.

Check to see if this is the end of a region by seeing if it contains a call to __builtin_tm_commit{,_eh}. Note that the outermost region for DECL_IS_TM_CLONE need not collect this.

References bitmap_obstack_release(), NULL, and tm_region_init().

|

static |

Return true if MEM is a transaction invariant memory for the TM region starting at REGION_ENTRY_BLOCK.

|

inlinestatic |

Add FLAGS to the GIMPLE_TRANSACTION subcode for the transaction region represented by STATE.

Referenced by ipa_tm_diagnose_tm_safe().

|

static |

Return true if T is a volatile variable of some kind.

References gsi_stmt(), walk_stmt_info::info, and diagnose_tm::stmt.

Variable Documentation

|

static |

Referenced by execute_lower_tm(), gate_tm_memopt(), and tm_memopt_transform_blocks().

| bool pending_edge_inserts_p |

True if there are pending edge statements to be committed for the current function being scanned in the tmmark pass.

|

static |

The actual log.

Addresses to log with a save/restore sequence. These should be in dominator order.

|

static |

|

static |

Unique counter for TM loads and stores. Loads and stores of the same address get the same ID.

|

static |

|

static |

Map for an SSA_NAME originally pointing to a non aliased new piece of memory (malloc, alloc, etc).

|

static |

|

static |

Map for aribtrary function replacement under TM, as created by the tm_wrap attribute.