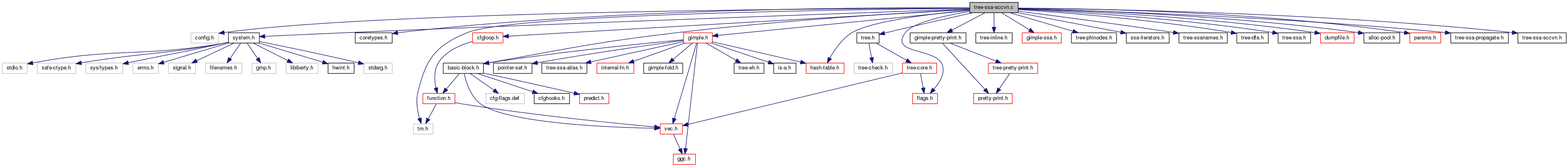

#include "config.h"#include "system.h"#include "coretypes.h"#include "tm.h"#include "tree.h"#include "basic-block.h"#include "gimple-pretty-print.h"#include "tree-inline.h"#include "gimple.h"#include "gimple-ssa.h"#include "tree-phinodes.h"#include "ssa-iterators.h"#include "tree-ssanames.h"#include "tree-dfa.h"#include "tree-ssa.h"#include "dumpfile.h"#include "hash-table.h"#include "alloc-pool.h"#include "flags.h"#include "cfgloop.h"#include "params.h"#include "tree-ssa-propagate.h"#include "tree-ssa-sccvn.h"

Data Structures | |

| struct | vn_nary_op_hasher |

| struct | vn_phi_hasher |

| struct | vn_reference_hasher |

| struct | vn_tables_s |

| struct | vn_constant_hasher |

Macros | |

| #define | SSA_VAL(x) (VN_INFO ((x))->valnum) |

Typedefs | |

| typedef hash_table < vn_nary_op_hasher > | vn_nary_op_table_type |

| typedef vn_nary_op_table_type::iterator | vn_nary_op_iterator_type |

| typedef hash_table< vn_phi_hasher > | vn_phi_table_type |

| typedef vn_phi_table_type::iterator | vn_phi_iterator_type |

| typedef hash_table < vn_reference_hasher > | vn_reference_table_type |

| typedef vn_reference_table_type::iterator | vn_reference_iterator_type |

| typedef struct vn_tables_s * | vn_tables_t |

Variables | |

| static hash_table < vn_constant_hasher > | constant_to_value_id |

| static bitmap | constant_value_ids |

| static vn_tables_t | valid_info |

| static vn_tables_t | optimistic_info |

| static vn_tables_t | current_info |

| static int * | rpo_numbers |

| tree | VN_TOP |

| static unsigned int | next_value_id |

| static unsigned int | next_dfs_num |

| static vec< tree > | sccstack |

| static vec< vn_ssa_aux_t > | vn_ssa_aux_table |

| static struct obstack | vn_ssa_aux_obstack |

| static vec< vn_reference_op_s > | shared_lookup_references |

| static tree * | last_vuse_ptr |

| static vn_lookup_kind | vn_walk_kind |

| static vn_lookup_kind | default_vn_walk_kind |

| static vec< tree > | shared_lookup_phiargs |

Macro Definition Documentation

| #define SSA_VAL | ( | x | ) | (VN_INFO ((x))->valnum) |

Referenced by vn_nary_op_insert_into(), vn_nary_op_insert_stmt(), and vn_reference_lookup().

Typedef Documentation

| typedef vn_nary_op_table_type::iterator vn_nary_op_iterator_type |

| typedef vn_phi_table_type::iterator vn_phi_iterator_type |

| typedef hash_table<vn_phi_hasher> vn_phi_table_type |

| typedef vn_reference_table_type::iterator vn_reference_iterator_type |

| typedef struct vn_tables_s * vn_tables_t |

The set of hashtables and alloc_pool's for their items.

Function Documentation

|

static |

Allocate and initialize a vn_nary_op_t on CURRENT_INFO's obstack.

|

static |

Allocate a vn_nary_op_t with LENGTH operands on STACK.

|

static |

Allocate a value number table.

References OEP_PURE_SAME, operand_equal_p(), and TREE_CODE.

| bool ao_ref_init_from_vn_reference | ( | ao_ref * | ref, |

| alias_set_type | set, | ||

| tree | type, | ||

| vec< vn_reference_op_s > | ops | ||

| ) |

Build a alias-oracle reference abstraction in *REF from the vn_reference operands in *OPS, the reference alias set SET and the reference type TYPE. Return true if something useful was produced.

First get the final access size from just the outermost expression.

Initially, maxsize is the same as the accessed element size. In the following it will only grow (or become -1).

Compute cumulative bit-offset for nested component-refs and array-refs, and find the ultimate containing object.

These may be in the reference ops, but we cannot do anything

sensible with them here. Apart from ADDR_EXPR arguments to MEM_REF.

Fallthru.

Record the base objects.

And now the usual component-reference style ops.

We do not have a complete COMPONENT_REF tree here so we

cannot use component_ref_field_offset. Do the interesting

parts manually. We recorded the lower bound and the element size.

We discount volatiles from value-numbering elsewhere.

|

static |

Compare two operands by reverse postorder index

|

static |

|

static |

Insert the no longer used phi OPHI to the hash INFO.

|

static |

Insert the no longer used reference OREF to the hash INFO.

References hash_table< Descriptor, Allocator >::create(), create_alloc_pool(), gcc_obstack_init, vn_tables_s::nary, vn_tables_s::nary_obstack, vn_tables_s::phis, vn_tables_s::phis_pool, vn_tables_s::references, and vn_tables_s::references_pool.

| void copy_reference_ops_from_call | ( | gimple | call, |

| vec< vn_reference_op_s > * | result | ||

| ) |

Copy the operations present in load/store/call REF into RESULT, a vector of vn_reference_op_s's.

If 2 calls have a different non-ssa lhs, vdef value numbers should be different. By adding the lhs here in the vector, we ensure that the hashcode is different, guaranteeing a different value number.

Copy the type, opcode, function being called and static chain.

Copy the call arguments. As they can be references as well, just chain them together.

Referenced by insert_aux().

| void copy_reference_ops_from_ref | ( | ) |

Copy the operations present in load/store REF into RESULT, a vector of vn_reference_op_s's.

For non-calls, store the information that makes up the address.

The base address gets its own vn_reference_op_s structure.

Record bits and position.

The field decl is enough to unambiguously specify the field,

a matching type is not necessary and a mismatching type

is always a spurious difference. Record index as operand.

Always record lower bounds and element size.

Fallthru.

Canonicalize decls to MEM[&decl] which is what we end up with

when valueizing MEM[ptr] with ptr = &decl. Fallthrough.

These are only interesting for their operands, their

existence, and their type. They will never be the last

ref in the chain of references (IE they require an

operand), so we don't have to put anything

for op* as it will be handled by the iteration This is only interesting for its constant offset.

|

static |

Create a vector of vn_reference_op_s structures from CALL, a call statement. The vector is not shared.

|

static |

Set all definitions in STMT to value number to themselves. Return true if a value number changed.

|

static |

Depth first search on NAME to discover and process SCC's in the SSA graph. Execution of this algorithm relies on the fact that the SCC's are popped off the stack in topological order. Returns true if successful, false if we stopped processing SCC's due to resource constraints.

SCC info

Recursively DFS on our operands, looking for SCC's.

Push a new iterator.

If we are done processing uses of a name, go up the stack

of iterators and process SCCs as we found them. See if we found an SCC.

Check if we are done.

Restore the last use walker and continue walking there.

Since we handle phi nodes, we will sometimes get

invariants in the use expression. Recurse by pushing the current use walking state on

the stack and starting over.

References DECL_ARGUMENTS, DECL_CHAIN, dump_file, dump_flags, free_scc_vn(), get_next_value_id(), get_or_alloc_constant_value_id(), has_zero_uses(), init_scc_vn(), is_gimple_min_invariant(), num_ssa_names, print_generic_expr(), set_hashtable_value_ids(), ssa_default_def(), ssa_name, TREE_CODE, valid_info, vn_ssa_aux::valnum, vn_ssa_aux::value_id, visited, and VN_INFO().

Referenced by simplify_binary_expression().

|

static |

Return true if EXPR contains constants.

Constants inside reference ops are rarely interesting, but it can take a lot of looking to find them.

References fold_ternary, gimple_assign_rhs1(), TREE_OPERAND, and TREE_TYPE.

| bool expressions_equal_p | ( | ) |

Compare two expressions E1 and E2 and return true if they are equal.

The obvious case.

If only one of them is null, they cannot be equal.

Now perform the actual comparison.

Referenced by vn_nary_op_lookup(), and vn_reference_compute_hash().

|

static |

Pop the components of the found SCC for NAME off the SCC stack and process them. Returns true if all went well, false if we run into resource limits.

Found an SCC, pop the components off the SCC stack and process them.

Bail out of SCCVN in case a SCC turns out to be incredibly large.

References release_ssa_name(), ssa_name, and VN_INFO().

|

inlinestatic |

Free a reference operation structure VP.

| void free_scc_vn | ( | void | ) |

Referenced by DFS().

|

static |

Free a value number table.

| tree fully_constant_vn_reference_p | ( | ) |

Optimize the reference REF to a constant if possible or return NULL_TREE if not.

Try to simplify the translated expression if it is a call to a builtin function with at most two arguments.

Simplify reads from constant strings.

| unsigned int get_constant_value_id | ( | ) |

Lookup a value id for CONSTANT and return it. If it does not exist returns 0.

References bitmap_bit_p.

| unsigned int get_max_value_id | ( | void | ) |

Return the maximum value id we have ever seen.

| unsigned int get_next_value_id | ( | void | ) |

Return the next unique value id.

Referenced by create_component_ref_by_pieces(), and DFS().

| unsigned int get_or_alloc_constant_value_id | ( | ) |

Lookup a value id for CONSTANT, and if it does not exist, create a new one and return it. If it does exist, return it.

References FOR_EACH_VEC_ELT, HOST_WIDE_INT, vn_reference_op_struct::off, vn_reference_op_struct::opcode, and vn_reference_s::operands.

Referenced by DFS().

|

static |

VEC_alloc doesn't actually grow it to the right size, it just preallocates the space to do so.

RPO numbers is an array of rpo ordering, rpo[i] = bb means that the i'th block in RPO order is bb. We want to map bb's to RPO numbers, so we need to rearrange this array.

Create the VN_INFO structures, and initialize value numbers to TOP.

Create the valid and optimistic value numbering tables.

References FLOAT_TYPE_P, INTEGRAL_TYPE_P, vn_nary_op_s::type, and TYPE_OVERFLOW_TRAPS.

Referenced by DFS().

|

static |

Initialize VNO from OP.

References sizeof_vn_nary_op().

|

static |

Initialize VNO from the pieces provided.

References init_vn_nary_op_from_stmt(), sizeof_vn_nary_op(), vn_nary_length_from_stmt(), and vn_nary_op_lookup_1().

Referenced by vn_nary_op_eq().

|

static |

Initialize VNO from STMT.

Referenced by init_vn_nary_op_from_pieces().

|

static |

Mark as processed all the definitions in the defining stmt of USE, or the USE itself.

|

static |

Print set of components in strongly connected component SCC to OUT.

|

static |

Process a strongly connected component in the SSA graph.

If the SCC has a single member, just visit it.

We need to make sure it doesn't form a cycle itself, which can

happen for self-referential PHI nodes. In that case we would

end up inserting an expression with VN_TOP operands into the

valid table which makes us derive bogus equivalences later.

The cheapest way to check this is to assume it for all PHI nodes. Fallthru to iteration.

Iterate over the SCC with the optimistic table until it stops changing.

As we are value-numbering optimistically we have to

clear the expression tables and the simplified expressions

in each iteration until we converge. Finally, copy the contents of the no longer used optimistic table to the valid table.

References hash_table< Descriptor, Allocator >::dispose(), free_alloc_pool(), vn_tables_s::nary, vn_tables_s::nary_obstack, vn_tables_s::phis, vn_tables_s::phis_pool, vn_tables_s::references, and vn_tables_s::references_pool.

| bool run_scc_vn | ( | ) |

Do SCCVN. Returns true if it finished, false if we bailed out due to resource constraints. DEFAULT_VN_WALK_KIND_ specifies how we use the alias oracle walking during the VN process.

Initialize the value ids.

Propagate.

|

static |

Set the value ids in the valid hash tables.

Now set the value ids of the things we had put in the hash table.

Referenced by DFS().

|

inlinestatic |

Set the value number of FROM to TO, return true if it has changed as a result.

The only thing we allow as value numbers are VN_TOP, ssa_names and invariants. So assert that here.

??? For addresses involving volatile objects or types operand_equal_p

does not reliably detect ADDR_EXPRs as equal. We know we are only

getting invariant gimple addresses here, so can use

get_addr_base_and_unit_offset to do this comparison.

Referenced by simplify_binary_expression(), visit_nary_op(), and visit_reference_op_load().

|

static |

Set *ID according to RESULT.

|

static |

Simplify the binary expression RHS, and return the result if simplified.

This will not catch every single case we could combine, but will catch those with constants. The goal here is to simultaneously combine constants between expressions, but avoid infinite expansion of expressions during simplification.

Pointer plus constant can be represented as invariant address. Do so to allow further propatation, see also tree forwprop.

Avoid folding if nothing changed.

Make sure result is not a complex expression consisting of operators of operators (IE (a + b) + (a + c)) Otherwise, we will end up with unbounded expressions if fold does anything at all.

References dump_file, dump_flags, vn_ssa_aux::expr, expr_has_constants(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs_code(), vn_ssa_aux::has_constants, is_gimple_min_invariant(), NULL_TREE, print_generic_expr(), print_gimple_expr(), set_ssa_val_to(), stmt_has_constants(), TREE_CODE, try_to_simplify(), unshare_expr(), visit_copy(), and VN_INFO().

|

static |

Simplify the unary expression RHS, and return the result if simplified.

We handle some tcc_reference codes here that are all GIMPLE_ASSIGN_SINGLE codes.

We want to do tree-combining on conversion-like expressions.

Make sure we feed only SSA_NAMEs or constants to fold though. Avoid folding if nothing changed, but remember the expression.

|

static |

Sort an array containing members of a strongly connected component SCC so that the members are ordered by RPO number. This means that when the sort is complete, iterating through the array will give you the members in RPO order.

|

static |

Return true if STMT contains constants.

Fallthru.

Fallthru.

Constants inside reference ops are rarely interesting, but

it can take a lot of looking to find them.

Referenced by simplify_binary_expression().

|

static |

Try to simplify RHS using equivalences and constant folding.

For stores we can end up simplifying a SSA_NAME rhs. Just return in this case, there is no point in doing extra work.

First try constant folding based on our current lattice.

If that didn't work try combining multiple statements.

Fallthrough for some unary codes that can operate on registers.

We could do a little more with unary ops, if they expand

into binary ops, but it's debatable whether it is worth it.

Referenced by simplify_binary_expression().

| bool value_id_constant_p | ( | ) |

Return true if V is a value id for a constant.

References iterative_hash_hashval_t(), vn_reference_op_struct::op0, and vn_reference_op_struct::opcode.

Referenced by bitmap_set_and(), bitmap_set_contains_expr(), and bitmap_set_new().

|

static |

Replace SSA_NAMES in expr with their value numbers, and return the result. This is performed in place.

Fallthru.

|

static |

|

static |

Transform any SSA_NAME's in a vector of vn_reference_op_s structures into their value numbers. This is done in-place, and the vector passed in is returned. *VALUEIZED_ANYTHING will specify whether any operands were valueized.

If it transforms from an SSA_NAME to a constant, update the opcode.

If it transforms from an SSA_NAME to an address, fold with

a preceding indirect reference.

If it transforms a non-constant ARRAY_REF into a constant

one, adjust the constant offset.

|

static |

Create a vector of vn_reference_op_s structures from CALL, a call statement. The vector is shared among all callers of this function.

|

static |

Create a vector of vn_reference_op_s structures from REF, a REFERENCE_CLASS_P tree. The vector is shared among all callers of this function. *VALUEIZED_ANYTHING will specify whether any operands were valueized.

References vn_reference_s::operands, vn_reference_s::result, vn_reference_s::value_id, and vn_reference_s::vuse.

|

static |

Visit a copy between LHS and RHS, return true if the value number changed.

The copy may have a more interesting constant filled expression (we don't, since we know our RHS is just an SSA name).

And finally valueize.

References dump_file, and print_generic_expr().

Referenced by simplify_binary_expression().

|

static |

Visit a nary operator RHS, value number it, and return true if the value number of LHS has changed as a result.

References NULL_TREE, set_ssa_val_to(), and vn_reference_insert().

|

static |

Visit and value number PHI, return true if the value number changed.

TODO: We could check for this in init_sccvn, and replace this with a gcc_assert.

See if all non-TOP arguments have the same value. TOP is equivalent to everything, so we can ignore it.

If all value numbered to the same value, the phi node has that value.

Otherwise, see if it is equivalent to a phi node in this block.

|

static |

Visit a call STMT storing into LHS. Return true if the value number of the LHS has changed as a result.

Non-ssa lhs is handled in copy_reference_ops_from_call.

|

static |

Visit a load from a reference operator RHS, part of STMT, value number it, and return true if the value number of the LHS has changed as a result.

If we have a VCE, try looking up its operand as it might be stored in a different type.

We handle type-punning through unions by value-numbering based on offset and size of the access. Be prepared to handle a type-mismatch here via creating a VIEW_CONVERT_EXPR.

We will be setting the value number of lhs to the value number

of VIEW_CONVERT_EXPR <TREE_TYPE (result)> (result).

So first simplify and lookup this expression to see if it

is already available. If the expression is not yet available, value-number lhs to

a new SSA_NAME we create. Initialize value-number information properly.

As all "inserted" statements are singleton SCCs, insert

to the valid table. This is strictly needed to

avoid re-generating new value SSA_NAMEs for the same

expression during SCC iteration over and over (the

optimistic table gets cleared after each iteration).

We do not need to insert into the optimistic table, as

lookups there will fall back to the valid table.

References dump_file, dump_flags, and set_ssa_val_to().

|

static |

Visit a store to a reference operator LHS, part of STMT, value number it, and return true if the value number of the LHS has changed as a result.

First we want to lookup using the <em>vuses</em> from the store and see if there the last store to this location with the same address had the same value. The vuses represent the memory state before the store. If the memory state, address, and value of the store is the same as the last store to this location, then this store will produce the same memory state as that store. In this case the vdef versions for this store are value numbered to those vuse versions, since they represent the same memory state after this store. Otherwise, the vdefs for the store are used when inserting into the table, since the store generates a new memory state.

Have to set value numbers before insert, since insert is

going to valueize the references in-place. Do not insert structure copies into the tables.

We had a match, so value number the vdef to have the value

number of the vuse it came from.

|

static |

Visit and value number USE, return true if the value number changed.

Handle uninitialized uses.

Shortcut for copies. Simplifying copies is pointless,

since we copy the expression and value they represent. Setting value numbers to constants will occasionally

screw up phi congruence because constants are not

uniquely associated with a single ssa name that can be

looked up. We have to unshare the expression or else

valuizing may change the IL stream. We reset expr and constantness here because we may

have been value numbering optimistically, and

iterating. They may become non-constant in this case,

even if they were optimistically constant. We can substitute SSA_NAMEs that are live over

abnormal edges with their constant value. Stores or copies from SSA_NAMEs that are live over

abnormal edges are a problem. First try to lookup the simplified expression.

Otherwise visit the original statement.

??? We could try to simplify calls.

We reset expr and constantness here because we may

have been value numbering optimistically, and

iterating. They may become non-constant in this case,

even if they were optimistically constant. If calls have a vdef, subsequent calls won't have

the same incoming vuse. So, if 2 calls with vdef have the

same vuse, we know they're not subsequent.

We can value number 2 calls to the same function with the

same vuse and the same operands which are not subsequent

the same, because there is no code in the program that can

compare the 2 values... ... unless the call returns a pointer which does

not alias with anything else. In which case the

information that the values are distinct are encoded

in the IL.

| tree vn_get_expr_for | ( | ) |

Get the representative expression for the SSA_NAME NAME. Returns the representative SSA_NAME if there is no expression associated with it.

If the value-number is a constant it is the representative expression.

Get to the information of the value of this SSA_NAME.

If the value-number is a constant it is the representative expression.

Else if we have an expression, return it.

Otherwise use the defining statement to build the expression.

If the value number is not an assignment use it directly.

FIXME tuples. This is incomplete and likely will miss some simplifications.

Cache the expression.

| enum vn_kind vn_get_stmt_kind | ( | ) |

Return the vn_kind the expression computed by the stmt should be associated with.

VOP-less references can go through unary case.

Fallthrough.

| vn_ssa_aux_t VN_INFO | ( | ) |

Return the value numbering information for a given SSA name.

Referenced by bitmap_find_leader(), copy_nary(), create_component_ref_by_pieces(), DFS(), extract_and_process_scc_for_name(), get_expr_type(), phi_translate_1(), simplify_binary_expression(), vn_nary_op_insert_into(), and vn_phi_eq().

| vn_ssa_aux_t VN_INFO_GET | ( | ) |

Initialize the value numbering info for a given SSA name. This should be called just once for every SSA name.

Referenced by create_component_ref_by_pieces().

|

inlinestatic |

Set the value numbering info for a given SSA name to a given value.

|

static |

Return the number of operands for a vn_nary ops structure from STMT.

Referenced by init_vn_nary_op_from_pieces().

| bool vn_nary_may_trap | ( | ) |

Return true if the nary operation NARY may trap. This is a copy of stmt_could_throw_1_p adjusted to the SCCVN IL.

| hashval_t vn_nary_op_compute_hash | ( | ) |

Compute and return the hash value for nary operation VBO1.

References hash_table< Descriptor, Allocator >::find_slot_with_hash(), vn_nary_op_s::hashcode, vn_tables_s::nary, NULL, NULL_TREE, and vn_nary_op_s::result.

| bool vn_nary_op_eq | ( | ) |

Compare nary operations VNO1 and VNO2 and return true if they are equivalent.

References init_vn_nary_op_from_pieces(), sizeof_vn_nary_op(), and vn_nary_op_lookup_1().

Referenced by vn_nary_op_hasher::hash().

| vn_nary_op_t vn_nary_op_insert | ( | ) |

Insert OP into the current hash table with a value number of RESULT. Return the vn_nary_op_t structure we created and put in the hashtable.

|

static |

Insert VNO into TABLE. If COMPUTE_HASH is true, then compute VNO->HASHCODE first.

References vn_phi_s::block, gimple_bb(), gimple_phi_num_args(), gimple_phi_result(), vn_phi_s::hashcode, PHI_ARG_DEF, vn_phi_s::phiargs, vn_tables_s::phis_pool, pool_alloc(), vn_phi_s::result, SSA_VAL, TREE_CODE, TREE_TYPE, vn_phi_s::type, vn_phi_s::value_id, vn_ssa_aux::value_id, VN_INFO(), vn_phi_compute_hash(), and vNULL.

| vn_nary_op_t vn_nary_op_insert_pieces | ( | unsigned int | length, |

| enum tree_code | code, | ||

| tree | type, | ||

| tree * | ops, | ||

| tree | result, | ||

| unsigned int | value_id | ||

| ) |

Insert a n-ary operation into the current hash table using it's pieces. Return the vn_nary_op_t structure we created and put in the hashtable.

| vn_nary_op_t vn_nary_op_insert_stmt | ( | ) |

Insert the rhs of STMT into the current hash table with a value number of RESULT.

References dump_file, dump_flags, SSA_VAL, and TDF_DETAILS.

| tree vn_nary_op_lookup | ( | ) |

Lookup OP in the current hash table, and return the resulting value number if it exists in the hash table. Return NULL_TREE if it does not exist in the hash table or if the result field of the operation is NULL. VNRESULT will contain the vn_nary_op_t from the hashtable if it exists.

References expressions_equal_p(), and vn_phi_s::phiargs.

|

static |

Compute the hashcode for VNO and look for it in the hash table; return the resulting value number if it exists in the hash table. Return NULL_TREE if it does not exist in the hash table or if the result field of the operation is NULL. VNRESULT will contain the vn_nary_op_t from the hashtable if it exists.

Referenced by init_vn_nary_op_from_pieces(), and vn_nary_op_eq().

| tree vn_nary_op_lookup_pieces | ( | unsigned int | length, |

| enum tree_code | code, | ||

| tree | type, | ||

| tree * | ops, | ||

| vn_nary_op_t * | vnresult | ||

| ) |

Lookup a n-ary operation by its pieces and return the resulting value number if it exists in the hash table. Return NULL_TREE if it does not exist in the hash table or if the result field of the operation is NULL. VNRESULT will contain the vn_nary_op_t from the hashtable if it exists.

| tree vn_nary_op_lookup_stmt | ( | ) |

Lookup the rhs of STMT in the current hash table, and return the resulting value number if it exists in the hash table. Return NULL_TREE if it does not exist in the hash table. VNRESULT will contain the vn_nary_op_t from the hashtable if it exists.

|

inlinestatic |

Compute a hashcode for PHI operation VP1 and return it.

If all PHI arguments are constants we need to distinguish the PHI node via its type.

Referenced by vn_nary_op_insert_into().

|

static |

vn_phi hashtable helpers.

Referenced by vn_phi_hasher::hash().

|

static |

Compare two phi entries for equality, ignoring VN_TOP arguments.

If the PHI nodes do not have compatible types they are not the same.

Any phi in the same block will have it's arguments in the

same edge order, because of how we store phi nodes.

References dump_file, dump_flags, vn_ssa_aux::valnum, and VN_INFO().

|

static |

Insert PHI into the current hash table with a value number of RESULT.

Canonicalize the SSA_NAME's to their value number.

Because we iterate over phi operations more than once, it's possible the slot might already exist here, hence no assert.

|

static |

Lookup PHI in the current hash table, and return the resulting value number if it exists in the hash table. Return NULL_TREE if it does not exist in the hash table.

Canonicalize the SSA_NAME's to their value number.

| hashval_t vn_reference_compute_hash | ( | ) |

Compute a hash for the reference operation VR1 and return it.

References expressions_equal_p(), vn_reference_s::hashcode, HOST_WIDE_INT, INTEGRAL_TYPE_P, vn_reference_s::operands, TREE_INT_CST_LOW, vn_reference_s::type, TYPE_PRECISION, TYPE_SIZE, and vn_reference_s::vuse.

| bool vn_reference_eq | ( | ) |

Return true if reference operations VR1 and VR2 are equivalent. This means they have the same set of operands and vuses.

Early out if this is not a hash collision.

The VOP needs to be the same.

If the operands are the same we are done.

| void vn_reference_fold_indirect | ( | vec< vn_reference_op_s > * | ops, |

| unsigned int * | i_p | ||

| ) |

Fold *& at position *I_P in a vn_reference_op_s vector *OPS. Updates *I_P to point to the last element of the replacement.

The only thing we have to do is from &OBJ.foo.bar add the offset from .foo.bar to the preceding MEM_REF offset and replace the address with &OBJ.

| vn_reference_t vn_reference_insert | ( | ) |

Insert OP into the current hash table with a value number of RESULT, and return the resulting reference structure we created.

Because we lookup stores using vuses, and value number failures using the vdefs (see visit_reference_op_store for how and why), it's possible that on failure we may try to insert an already inserted store. This is not wrong, there is no ssa name for a store that we could use as a differentiator anyway. Thus, unlike the other lookup functions, you cannot gcc_assert (!*slot) here.

But free the old slot in case of a collision.

Referenced by visit_nary_op().

| vn_reference_t vn_reference_insert_pieces | ( | tree | vuse, |

| alias_set_type | set, | ||

| tree | type, | ||

| vec< vn_reference_op_s > | operands, | ||

| tree | result, | ||

| unsigned int | value_id | ||

| ) |

Insert a reference by it's pieces into the current hash table with a value number of RESULT. Return the resulting reference structure we created.

At this point we should have all the things inserted that we have seen before, and we should never try inserting something that already exists.

| tree vn_reference_lookup | ( | tree | op, |

| tree | vuse, | ||

| vn_lookup_kind | kind, | ||

| vn_reference_t * | vnresult | ||

| ) |

Lookup OP in the current hash table, and return the resulting value number if it exists in the hash table. Return NULL_TREE if it does not exist in the hash table or if the result field of the structure was NULL.. VNRESULT will be filled in with the vn_reference_t stored in the hashtable if one exists.

Make sure to use a valueized reference if we valueized anything. Otherwise preserve the full reference for advanced TBAA.

References commutative_tree_code(), iterative_hash_expr(), iterative_hash_hashval_t(), vn_nary_op_s::length, vn_nary_op_s::op, SSA_VAL, TREE_CODE, and tree_swap_operands_p().

|

static |

Lookup a SCCVN reference operation VR in the current hash table. Returns the resulting value number if it exists in the hash table, NULL_TREE otherwise. VNRESULT will be filled in with the actual vn_reference_t stored in the hashtable if something is found.

References gimple_assign_lhs(), HOST_WIDE_INT, is_gimple_assign(), offset, SSA_NAME_DEF_STMT, and vNULL.

Callback for walk_non_aliased_vuses. Adjusts the vn_reference_t VR_ with the current VUSE and performs the expression lookup.

This bounds the stmt walks we perform on reference lookups to O(1) instead of O(N) where N is the number of dominating stores.

Fixup vuse and hash.

|

static |

Callback for walk_non_aliased_vuses. Tries to perform a lookup from the statement defining VUSE and if not successful tries to translate *REFP and VR_ through an aggregate copy at the definition of VUSE.

First try to disambiguate after value-replacing in the definitions LHS.

Avoid re-allocation overhead.

If we cannot constrain the size of the reference we cannot test if anything kills it.

We can't deduce anything useful from clobbers.

def_stmt may-defs *ref. See if we can derive a value for *ref from that definition. 1) Memset.

2) Assignment from an empty CONSTRUCTOR.

3) Assignment from a constant. We can use folds native encode/interpret routines to extract the assigned bits.

We support up to 512-bit values (for V8DFmode).

4) Assignment from an SSA name which definition we may be able to access pieces from.

5) For aggregate copies translate the reference through them if the copy kills ref.

See if the assignment kills REF.

Find the common base of ref and the lhs. lhs_ops already

contains valueized operands for the lhs. ??? The innermost op should always be a MEM_REF and we already

checked that the assignment to the lhs kills vr. Thus for

aggregate copies using char[] types the vn_reference_op_eq

may fail when comparing types for compatibility. But we really

don't care here - further lookups with the rewritten operands

will simply fail if we messed up types too badly. i now points to the first additional op.

??? LHS may not be completely contained in VR, one or more

VIEW_CONVERT_EXPRs could be in its way. We could at least

try handling outermost VIEW_CONVERT_EXPRs. Now re-write REF to be based on the rhs of the assignment.

We need to pre-pend vr->operands[0..i] to rhs.

Adjust *ref from the new operands.

This can happen with bitfields.

Do not update last seen VUSE after translating.

Keep looking for the adjusted *REF / VR pair.

6) For memcpy copies translate the reference through them if the copy kills ref.

??? Handle BCOPY as well.

Only handle non-variable, addressable refs.

Extract a pointer base and an offset for the destination.

Extract a pointer base and an offset for the source.

The bases of the destination and the references have to agree.

And the access has to be contained within the memcpy destination.

Make room for 2 operands in the new reference.

The looked-through reference is a simple MEM_REF.

Adjust *ref from the new operands.

This can happen with bitfields.

Do not update last seen VUSE after translating.

Keep looking for the adjusted *REF / VR pair.

Bail out and stop walking.

References BITS_PER_UNIT, buffer, get_ref_base_and_extent(), gimple_assign_lhs(), gimple_assign_rhs1(), native_encode_expr(), native_interpret_expr(), operand_equal_p(), vn_reference_s::operands, vn_reference_s::set, ao_ref_s::size, vn_reference_s::type, and vn_reference_lookup_or_insert_for_pieces().

|

static |

Lookup an existing or insert a new vn_reference entry into the value table for the VUSE, SET, TYPE, OPERANDS reference which has the value VALUE which is either a constant or an SSA name.

References build_zero_cst(), vn_reference_s::operands, vn_reference_s::set, and vn_reference_s::type.

Referenced by vn_reference_lookup_3().

| tree vn_reference_lookup_pieces | ( | tree | vuse, |

| alias_set_type | set, | ||

| tree | type, | ||

| vec< vn_reference_op_s > | operands, | ||

| vn_reference_t * | vnresult, | ||

| vn_lookup_kind | kind | ||

| ) |

Lookup a reference operation by it's parts, in the current hash table. Returns the resulting value number if it exists in the hash table, NULL_TREE otherwise. VNRESULT will be filled in with the actual vn_reference_t stored in the hashtable if something is found.

Referenced by insert_aux().

|

static |

Fold *& at position *I_P in a vn_reference_op_s vector *OPS. Updates *I_P to point to the last element of the replacement.

The only thing we have to do is from &OBJ.foo.bar add the offset from .foo.bar to the preceding MEM_REF offset and replace the address with &OBJ.

And recurse.

References build_call_expr(), is_gimple_min_invariant(), NULL, vn_reference_op_struct::op0, vn_reference_op_struct::opcode, tcc_constant, TREE_CODE, TREE_CODE_CLASS, and TREE_OPERAND.

|

static |

Compute the hash for a reference operand VRO1.

References iterative_hash_expr(), iterative_hash_hashval_t(), vn_reference_op_struct::op0, TREE_CODE, and TREE_OPERAND.

|

static |

Compare two reference operands P1 and P2 for equality. Return true if they are equal, and false otherwise.

We do not care for differences in type qualification.

Variable Documentation

|

static |

|

static |

|

static |

Pointer to the set of hashtables that is currently being used. Should always point to either the optimistic_info, or the valid_info.

|

static |

|

static |

|

static |

Next DFS number and the stack for strongly connected component detection.

|

static |

Unique counter for our value ids.

|

static |

Optimistic hashtables storing information we are making assumptions about during iterations.

|

static |

Reverse post order index for each basic block.

|

static |

|

static |

Valid hashtables storing information we have proven to be correct.

Referenced by DFS().

|

static |

|

static |

Table of vn_ssa_aux_t's, one per ssa_name. The vn_ssa_aux_t objects are allocated on an obstack for locality reasons, and to free them without looping over the vec.

| tree VN_TOP |

This represents the top of the VN lattice, which is the universal value.

|

static |