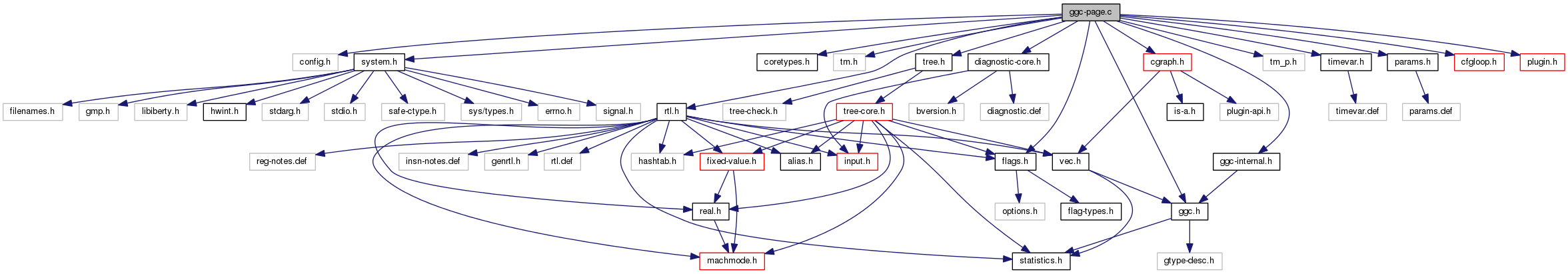

#include "config.h"#include "system.h"#include "coretypes.h"#include "tm.h"#include "tree.h"#include "rtl.h"#include "tm_p.h"#include "diagnostic-core.h"#include "flags.h"#include "ggc.h"#include "ggc-internal.h"#include "timevar.h"#include "params.h"#include "cgraph.h"#include "cfgloop.h"#include "plugin.h"

Data Structures | |

| struct | max_alignment |

| struct | page_entry |

| struct | page_group |

| struct | globals |

| struct | ggc_pch_ondisk |

| struct | ggc_pch_data |

Macros | |

| #define | USING_MALLOC_PAGE_GROUPS |

| #define | GGC_DEBUG_LEVEL (0) |

| #define | HOST_BITS_PER_PTR HOST_BITS_PER_LONG |

| #define | PAGE_L1_BITS (8) |

| #define | PAGE_L2_BITS (32 - PAGE_L1_BITS - G.lg_pagesize) |

| #define | PAGE_L1_SIZE ((uintptr_t) 1 << PAGE_L1_BITS) |

| #define | PAGE_L2_SIZE ((uintptr_t) 1 << PAGE_L2_BITS) |

| #define | LOOKUP_L1(p) (((uintptr_t) (p) >> (32 - PAGE_L1_BITS)) & ((1 << PAGE_L1_BITS) - 1)) |

| #define | LOOKUP_L2(p) (((uintptr_t) (p) >> G.lg_pagesize) & ((1 << PAGE_L2_BITS) - 1)) |

| #define | OBJECTS_PER_PAGE(ORDER) objects_per_page_table[ORDER] |

| #define | OBJECTS_IN_PAGE(P) ((P)->bytes / OBJECT_SIZE ((P)->order)) |

| #define | OBJECT_SIZE(ORDER) object_size_table[ORDER] |

| #define | DIV_MULT(ORDER) inverse_table[ORDER].mult |

| #define | DIV_SHIFT(ORDER) inverse_table[ORDER].shift |

| #define | OFFSET_TO_BIT(OFFSET, ORDER) (((OFFSET) * DIV_MULT (ORDER)) >> DIV_SHIFT (ORDER)) |

| #define | MAX_ALIGNMENT (offsetof (struct max_alignment, u)) |

| #define | NUM_EXTRA_ORDERS ARRAY_SIZE (extra_order_size_table) |

| #define | RTL_SIZE(NSLOTS) (RTX_HDR_SIZE + (NSLOTS) * sizeof (rtunion)) |

| #define | TREE_EXP_SIZE(OPS) (sizeof (struct tree_exp) + ((OPS) - 1) * sizeof (tree)) |

| #define | NUM_ORDERS (HOST_BITS_PER_PTR + NUM_EXTRA_ORDERS) |

| #define | ROUND_UP_VALUE(x, f) ((f) - 1 - ((f) - 1 + (x)) % (f)) |

| #define | ROUND_UP(x, f) (CEIL (x, f) * (f)) |

| #define | PAGE_ALIGN(x) (((x) + G.pagesize - 1) & ~(G.pagesize - 1)) |

| #define | BITMAP_SIZE(Num_objects) (CEIL ((Num_objects), HOST_BITS_PER_LONG) * sizeof (long)) |

| #define | GGC_QUIRE_SIZE 16 |

| #define | INITIAL_PTE_COUNT 128 |

| #define | prefetch(X) ((void) X) |

| #define | save_in_use_p_i(__i) (G.save_in_use[__i]) |

| #define | save_in_use_p(__p) (save_in_use_p_i (__p->index_by_depth)) |

| #define | NUM_SIZE_LOOKUP 512 |

| #define | poison_pages() |

| #define | validate_free_objects() |

| #define | SCALE(x) |

| #define | STAT_LABEL(x) ((x) < 1024*10 ? ' ' : ((x) < 1024*1024*10 ? 'k' : 'M')) |

Typedefs | |

| typedef struct page_entry | page_entry |

| typedef struct page_group | page_group |

| typedef page_entry ** | page_table [PAGE_L1_SIZE] |

Functions | |

| static int | ggc_allocated_p (const void *) |

| static page_entry * | lookup_page_table_entry (const void *) |

| static void | set_page_table_entry (void *, page_entry *) |

| static size_t | page_group_index (char *, char *) |

| static void | set_page_group_in_use (page_group *, char *) |

| static void | clear_page_group_in_use (page_group *, char *) |

| static struct page_entry * | alloc_page (unsigned) |

| static void | free_page (struct page_entry *) |

| static void | release_pages (void) |

| static void | clear_marks (void) |

| static void | sweep_pages (void) |

| static void | ggc_recalculate_in_use_p (page_entry *) |

| static void | compute_inverse (unsigned) |

| static void | adjust_depth (void) |

| static void | move_ptes_to_front (int, int) |

| void | debug_print_page_list (int) |

| static void | push_depth (unsigned int) |

| static void | push_by_depth (page_entry *, unsigned long *) |

| static void | push_depth () |

| static void | push_by_depth () |

| static int | ggc_allocated_p () |

| static page_entry * | lookup_page_table_entry () |

| static void | set_page_table_entry () |

| DEBUG_FUNCTION void | debug_print_page_list () |

| static size_t | page_group_index () |

| static void | set_page_group_in_use () |

| static void | clear_page_group_in_use () |

| static struct page_entry * | alloc_page () |

| static void | free_page () |

| static void | ggc_round_alloc_size_1 (size_t requested_size, size_t *size_order, size_t *alloced_size) |

| size_t | ggc_round_alloc_size () |

| void * | ggc_internal_alloc_stat () |

| void | gt_ggc_m_S () |

| void | gt_ggc_mx () |

| int | ggc_set_mark () |

| int | ggc_marked_p () |

| size_t | ggc_get_size () |

| void | ggc_free () |

| static void | compute_inverse () |

| void | init_ggc () |

| static void | ggc_recalculate_in_use_p () |

| void | ggc_collect () |

| void | ggc_print_statistics () |

| struct ggc_pch_data * | init_ggc_pch () |

| void | ggc_pch_count_object (struct ggc_pch_data *d, void *x, size_t size, bool is_string) |

| size_t | ggc_pch_total_size () |

| void | ggc_pch_this_base () |

| char * | ggc_pch_alloc_object (struct ggc_pch_data *d, void *x, size_t size, bool is_string) |

| void | ggc_pch_prepare_write (struct ggc_pch_data *d, FILE *f) |

| void | ggc_pch_write_object (struct ggc_pch_data *d, FILE *f, void *x, void *newx, size_t size, bool is_string) |

| void | ggc_pch_finish () |

| static void | move_ptes_to_front () |

| void | ggc_pch_read () |

Variables | |

| static const size_t | extra_order_size_table [] |

| static unsigned | objects_per_page_table [NUM_ORDERS] |

| static size_t | object_size_table [NUM_ORDERS] |

| struct { | |

| size_t mult | |

| unsigned int shift | |

| } | inverse_table [NUM_ORDERS] |

| static struct globals | G |

| static unsigned char | size_lookup [NUM_SIZE_LOOKUP] |

Macro Definition Documentation

| #define BITMAP_SIZE | ( | Num_objects | ) | (CEIL ((Num_objects), HOST_BITS_PER_LONG) * sizeof (long)) |

The size in bytes required to maintain a bitmap for the objects on a page-entry.

Referenced by compute_inverse(), and debug_print_page_list().

| #define DIV_MULT | ( | ORDER | ) | inverse_table[ORDER].mult |

For speed, we avoid doing a general integer divide to locate the offset in the allocation bitmap, by precalculating numbers M, S such that (O * M) >> S == O / Z (modulo 2^32), for any offset O within the page which is evenly divisible by the object size Z.

| #define DIV_SHIFT | ( | ORDER | ) | inverse_table[ORDER].shift |

| #define GGC_DEBUG_LEVEL (0) |

Strategy:

This garbage-collecting allocator allocates objects on one of a set of pages. Each page can allocate objects of a single size only; available sizes are powers of two starting at four bytes. The size of an allocation request is rounded up to the next power of two (`order'), and satisfied from the appropriate page.

Each page is recorded in a page-entry, which also maintains an in-use bitmap of object positions on the page. This allows the allocation state of a particular object to be flipped without touching the page itself.

Each page-entry also has a context depth, which is used to track pushing and popping of allocation contexts. Only objects allocated in the current (highest-numbered) context may be collected.

Page entries are arranged in an array of singly-linked lists. The array is indexed by the allocation size, in bits, of the pages on it; i.e. all pages on a list allocate objects of the same size. Pages are ordered on the list such that all non-full pages precede all full pages, with non-full pages arranged in order of decreasing context depth.

Empty pages (of all orders) are kept on a single page cache list, and are considered first when new pages are required; they are deallocated at the start of the next collection if they haven't been recycled by then. Define GGC_DEBUG_LEVEL to print debugging information. 0: No debugging output. 1: GC statistics only. 2: Page-entry allocations/deallocations as well. 3: Object allocations as well. 4: Object marks as well.

Referenced by gt_ggc_m_S().

| #define GGC_QUIRE_SIZE 16 |

Allocate pages in chunks of this size, to throttle calls to memory allocation routines. The first page is used, the rest go onto the free list. This cannot be larger than HOST_BITS_PER_INT for the in_use bitmask for page_group. Hosts that need a different value can override this by defining GGC_QUIRE_SIZE explicitly.

Referenced by debug_print_page_list().

| #define HOST_BITS_PER_PTR HOST_BITS_PER_LONG |

| #define INITIAL_PTE_COUNT 128 |

Initial guess as to how many page table entries we might need.

| #define LOOKUP_L1 | ( | p | ) | (((uintptr_t) (p) >> (32 - PAGE_L1_BITS)) & ((1 << PAGE_L1_BITS) - 1)) |

| #define LOOKUP_L2 | ( | p | ) | (((uintptr_t) (p) >> G.lg_pagesize) & ((1 << PAGE_L2_BITS) - 1)) |

| #define MAX_ALIGNMENT (offsetof (struct max_alignment, u)) |

The biggest alignment required.

| #define NUM_EXTRA_ORDERS ARRAY_SIZE (extra_order_size_table) |

The number of extra orders, not corresponding to power-of-two sized objects.

| #define NUM_ORDERS (HOST_BITS_PER_PTR + NUM_EXTRA_ORDERS) |

The total number of orders.

| #define NUM_SIZE_LOOKUP 512 |

This table provides a fast way to determine ceil(log_2(size)) for allocation requests. The minimum allocation size is eight bytes.

| #define OBJECT_SIZE | ( | ORDER | ) | object_size_table[ORDER] |

The size of an object on a page of the indicated ORDER.

Referenced by clear_marks(), debug_print_page_list(), and gt_ggc_m_S().

| #define OBJECTS_IN_PAGE | ( | P | ) | ((P)->bytes / OBJECT_SIZE ((P)->order)) |

The number of objects in P.

Referenced by clear_marks(), and compute_inverse().

| #define OBJECTS_PER_PAGE | ( | ORDER | ) | objects_per_page_table[ORDER] |

The number of objects per allocation page, for objects on a page of the indicated ORDER.

Referenced by debug_print_page_list().

Referenced by gt_ggc_m_S(), and sweep_pages().

| #define PAGE_ALIGN | ( | x | ) | (((x) + G.pagesize - 1) & ~(G.pagesize - 1)) |

Round X to next multiple of the page size

Referenced by debug_print_page_list(), and ggc_collect().

| #define PAGE_L1_BITS (8) |

A two-level tree is used to look up the page-entry for a given pointer. Two chunks of the pointer's bits are extracted to index the first and second levels of the tree, as follows:

HOST_PAGE_SIZE_BITS

32 | |

msb +—————-+—-+——+——+ lsb

| | |

PAGE_L1_BITS |

| |

PAGE_L2_BITS

The bottommost HOST_PAGE_SIZE_BITS are ignored, since page-entry pages are aligned on system page boundaries. The next most significant PAGE_L2_BITS and PAGE_L1_BITS are the second and first index values in the lookup table, respectively.

For 32-bit architectures and the settings below, there are no leftover bits. For architectures with wider pointers, the lookup tree points to a list of pages, which must be scanned to find the correct one.

| #define PAGE_L1_SIZE ((uintptr_t) 1 << PAGE_L1_BITS) |

| #define PAGE_L2_BITS (32 - PAGE_L1_BITS - G.lg_pagesize) |

| #define PAGE_L2_SIZE ((uintptr_t) 1 << PAGE_L2_BITS) |

| #define poison_pages | ( | ) |

| #define prefetch | ( | X | ) | ((void) X) |

| #define ROUND_UP | ( | x, | |

| f | |||

| ) | (CEIL (x, f) * (f)) |

Compute the smallest multiple of F that is >= X.

| #define ROUND_UP_VALUE | ( | x, | |

| f | |||

| ) | ((f) - 1 - ((f) - 1 + (x)) % (f)) |

Compute the smallest nonnegative number which when added to X gives a multiple of F.

| #define RTL_SIZE | ( | NSLOTS | ) | (RTX_HDR_SIZE + (NSLOTS) * sizeof (rtunion)) |

| #define save_in_use_p | ( | __p | ) | (save_in_use_p_i (__p->index_by_depth)) |

Referenced by compute_inverse().

| #define save_in_use_p_i | ( | __i | ) | (G.save_in_use[__i]) |

| #define SCALE | ( | x | ) |

Print allocation statistics.

| #define STAT_LABEL | ( | x | ) | ((x) < 1024*10 ? ' ' : ((x) < 1024*1024*10 ? 'k' : 'M')) |

| #define USING_MALLOC_PAGE_GROUPS |

"Bag-of-pages" garbage collector for the GNU compiler. Copyright (C) 1999-2013 Free Software Foundation, Inc.

This file is part of GCC.

GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version.

GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see http://www.gnu.org/licenses/. Prefer MAP_ANON(YMOUS) to /dev/zero, since we don't need to keep a file open. Prefer either to valloc.

| #define validate_free_objects | ( | ) |

Typedef Documentation

| typedef struct page_entry page_entry |

A page_entry records the status of an allocation page. This structure is dynamically sized to fit the bitmap in_use_p.

| typedef struct page_group page_group |

A page_group describes a large allocation from malloc, from which we parcel out aligned pages.

| typedef page_entry** page_table[PAGE_L1_SIZE] |

On 32-bit hosts, we use a two level page table, as pictured above.

Function Documentation

|

inlinestatic |

Adjust the size of G.depth so that no index greater than the one used by the top of the G.by_depth is used.

Peel back indices in depth that index into by_depth, so that as new elements are added to by_depth, we note the indices of those elements, if they are for new context depths.

|

staticread |

|

staticread |

Allocate a new page for allocating objects of size 2^ORDER, and return an entry for it. The entry is not added to the appropriate page_table list.

Check the list of free pages for one we can use.

Recycle the allocated memory from this page ...

... and, if possible, the page entry itself.

Allocate a large block of memory and serve out the aligned

pages therein. This results in much less memory wastage

than the traditional implementation of valloc. We allocated N pages, which are likely not aligned, leaving

us with N-1 usable pages. We plan to place the page_group

structure somewhere in the slop. We magically got an aligned allocation. Too bad, we have

to waste a page anyway. Remember that we allocated this memory.

If we allocated multiple pages, put the rest on the free list.

Set the one-past-the-end in-use bit. This acts as a sentry as we increment the hint.

|

static |

Unmark all objects.

The data should be page-aligned.

Pages that aren't in the topmost context are not collected;

nevertheless, we need their in-use bit vectors to store GC

marks. So, back them up first. Reset reset the number of free objects and clear the

in-use bits. These will be adjusted by mark_obj. Make sure the one-past-the-end bit is always set.

References page_entry::context_depth, globals::context_depth, G, HOST_BITS_PER_LONG, page_entry::in_use_p, page_entry::next, NULL, OBJECT_SIZE, OBJECTS_IN_PAGE, page_entry::page, globals::pages, and VALGRIND_DISCARD.

|

static |

|

inlinestatic |

|

static |

|

static |

Subroutine of init_ggc which computes the pair of numbers used to perform division by OBJECT_SIZE (order) and fills in inverse_table[].

This algorithm is taken from Granlund and Montgomery's paper "Division by Invariant Integers using Multiplication" (Proc. SIGPLAN PLDI, 1994), section 9 (Exact division by constants).

References BITMAP_SIZE, page_entry::context_depth, globals::context_depth, G, gcc_assert, page_entry::in_use_p, page_entry::num_free_objects, OBJECTS_IN_PAGE, page_entry::page, globals::pagesize, and save_in_use_p.

| void debug_print_page_list | ( | int | ) |

| DEBUG_FUNCTION void debug_print_page_list | ( | ) |

Prints the page-entry for object size ORDER, for debugging.

References BITMAP_SIZE, page_entry::bytes, globals::bytes_mapped, page_entry::discarded, globals::free_pages, G, GGC_QUIRE_SIZE, page_entry::group, page_entry::next, NULL, OBJECT_SIZE, OBJECTS_PER_PAGE, page_entry::order, page_entry::page, PAGE_ALIGN, and globals::pagesize.

|

static |

|

static |

For a page that is no longer needed, put it on the free page list.

Mark the page as inaccessible. Discard the handle to avoid handle leak.

We cannot free a page from a context deeper than the current

one. Put top element into freed slot.

|

static |

|

inlinestatic |

Returns nonzero if P was allocated in GC'able memory.

Extract the level 1 and 2 indices.

| void ggc_collect | ( | void | ) |

Top level mark-and-sweep routine.

Avoid frequent unnecessary work by skipping collection if the total allocations haven't expanded much since the last collection.

Zero the total allocated bytes. This will be recalculated in the sweep phase.

Release the pages we freed the last time we collected, but didn't reuse in the interim.

Indicate that we've seen collections at this context depth.

References ggc_pch_data::base, ggc_pch_data::d, PAGE_ALIGN, and ggc_pch_ondisk::totals.

Referenced by ggc_splay_alloc(), and gcc::pass_manager::pass_manager().

| void ggc_free | ( | ) |

Release the memory for object P.

Let valgrind know the object is free.

Mark the object not-in-use.

If the page is completely full, then it's supposed to

be after all pages that aren't. Since we've freed one

object from a page that was full, we need to move the

page to the head of the list.

PE is the node we want to move. Q is the previous node

and P is the next node in the list. If PE was at the end of the list, then Q becomes the

new end of the list. If PE was not the end of the

list, then we need to update the PREV field for P. Move PE to the head of the list.

Reset the hint bit to point to the only free object.

| size_t ggc_get_size | ( | ) |

Return the size of the gc-able object P.

| void* ggc_internal_alloc_stat | ( | ) |

Allocate a chunk of memory of SIZE bytes. Its contents are undefined.

If there are non-full pages for this size allocation, they are at the head of the list.

If there is no page for this object size, or all pages in this context are full, allocate a new page.

We can skip context depths, if we do, make sure we go all the

way to the new depth. If this is the only entry, it's also the tail. If it is not

the only entry, then we must update the PREV pointer of the

ENTRY (G.pages[order]) to point to our new page entry. Put new pages at the head of the page list. By definition the

entry at the head of the list always has a NULL pointer. For a new page, we know the word and bit positions (in the

in_use bitmap) of the first available object – they're zero. First try to use the hint left from the previous allocation

to locate a clear bit in the in-use bitmap. We've made sure

that the one-past-the-end bit is always set, so if the hint

has run over, this test will fail. If the hint didn't work, scan the bitmap from the beginning.

Next time, try the next bit.

Set the in-use bit.

Keep a running total of the number of free objects. If this page fills up, we may have to move it to the end of the list if the next page isn't full. If the next page is full, all subsequent pages are full, so there's no need to move it.

We have a new head for the list.

We are moving ENTRY to the end of the page table list.

The new page at the head of the list will have NULL in

its PREV field and ENTRY will have NULL in its NEXT field. Append ENTRY to the tail of the list.

Calculate the object's address.

Tell Valgrind that the memory is there, but its content isn't defined. The bytes at the end of the object are still marked unaccessible.

Keep track of how many bytes are being allocated. This information is used in deciding when to collect.

For timevar statistics.

| int ggc_marked_p | ( | ) |

Return 1 if P has been marked, zero otherwise. P must have been allocated by the GC allocator; it mustn't point to static objects, stack variables, or memory allocated with malloc.

Look up the page on which the object is alloced. If the object wasn't allocated by the collector, we'll probably die.

Calculate the index of the object on the page; this is its bit position in the in_use_p bitmap.

Referenced by stringpool_statistics().

| char* ggc_pch_alloc_object | ( | struct ggc_pch_data * | , |

| void * | , | ||

| size_t | , | ||

| bool | |||

| ) |

Assuming that the objects really do end up at the address passed to ggc_pch_this_base, return the address of this object. The bool argument should be true if the object is a string.

| void ggc_pch_count_object | ( | struct ggc_pch_data * | , |

| void * | , | ||

| size_t | , | ||

| bool | |||

| ) |

The second parameter and third parameters give the address and size of an object. Update the ggc_pch_data structure with as much of that information as is necessary. The bool argument should be true if the object is a string.

| void ggc_pch_finish | ( | ) |

| void ggc_pch_prepare_write | ( | struct ggc_pch_data * | , |

| FILE * | |||

| ) |

Write out any initial information required.

Nothing to do.

| void ggc_pch_read | ( | ) |

We've just read in a PCH file. So, every object that used to be allocated is now free.

Since we free all the allocated objects, the free list becomes useless. Validate it now, which will also clear it.

No object read from a PCH file should ever be freed. So, set the context depth to 1, and set the depth of all the currently-allocated pages to be 1 too. PCH pages will have depth 0.

Allocate the appropriate page-table entries for the pages read from the PCH file.

We start off by just adding all the new information to the

end of the varrays, later, we will move the new information

to the front of the varrays, as the PCH page tables are at

context 0. Now, we update the various data structures that speed page table handling.

Update the statistics.

| void ggc_pch_this_base | ( | ) |

| size_t ggc_pch_total_size | ( | ) |

| void ggc_pch_write_object | ( | struct ggc_pch_data * | , |

| FILE * | , | ||

| void * | , | ||

| void * | , | ||

| size_t | , | ||

| bool | |||

| ) |

Write out this object, including any padding. The last argument should be true if the object is a string.

If SIZE is not the same as OBJECT_SIZE(order), then we need to pad the object out to OBJECT_SIZE(order). This happens for strings.

To speed small writes, we use a nulled-out array that's larger

than most padding requests as the source for our null bytes. This

permits us to do the padding with fwrite() rather than fseek(), and

limits the chance the OS may try to flush any outstanding writes. Larger than our buffer? Just default to fseek.

| void ggc_print_statistics | ( | void | ) |

Statistics. Print allocation statistics.

Clear the statistics.

Make sure collection will really occur.

Collect and print the statistics common across collectors.

Release free pages so that we will not count the bytes allocated there as part of the total allocated memory.

Collect some information about the various sizes of allocation.

Skip empty entries.

Figure out the total number of bytes allocated for objects of

this size, and how many of them are actually in use. Also figure

out how much memory the page table is using.

References fatal_error().

|

static |

|

static |

Merge the SAVE_IN_USE_P and IN_USE_P arrays in P so that IN_USE_P reflects reality. Recalculate NUM_FREE_OBJECTS as well.

Because the past-the-end bit in in_use_p is always set, we pretend there is one additional object.

Reset the free object count.

Combine the IN_USE_P and SAVE_IN_USE_P arrays.

Something is in use if it is marked, or if it was in use in a

context further down the context stack. Decrement the free object count for every object allocated.

| size_t ggc_round_alloc_size | ( | ) |

For a given size of memory requested for allocation, return the actual size that is going to be allocated.

|

static |

For a given size of memory requested for allocation, return the actual size that is going to be allocated, as well as the size order.

| int ggc_set_mark | ( | ) |

If P is not marked, marks it and return false. Otherwise return true. P must have been allocated by the GC allocator; it mustn't point to static objects, stack variables, or memory allocated with malloc.

Look up the page on which the object is alloced. If the object wasn't allocated by the collector, we'll probably die.

Calculate the index of the object on the page; this is its bit position in the in_use_p bitmap.

If the bit was previously set, skip it.

Otherwise set it, and decrement the free object count.

Referenced by ggc_register_cache_tab().

| void gt_ggc_m_S | ( | ) |

Mark function for strings.

Look up the page on which the object is alloced. .

Calculate the index of the object on the page; this is its bit position in the in_use_p bitmap. Note that because a char* might point to the middle of an object, we need special code here to make sure P points to the start of an object.

Here we've seen a char* which does not point to the beginning

of an allocated object. We assume it points to the middle of

a STRING_CST. If the bit was previously set, skip it.

Otherwise set it, and decrement the free object count.

References globals::allocated, globals::debug_file, G, GGC_DEBUG_LEVEL, ggc_free_overhead(), HOST_BITS_PER_LONG, page_entry::in_use_p, lookup_page_table_entry(), page_entry::num_free_objects, OBJECT_SIZE, OFFSET_TO_BIT, page_entry::order, page_entry::page, page_entry::prev, and VALGRIND_DISCARD.

| void gt_ggc_mx | ( | ) |

User-callable entry points for marking string X.

Nothing to do. Vectors of atomic types wrt GC do not need to be traversed.

References G, page_entry::next, NULL, order, globals::page_tails, globals::pages, and page_entry::prev.

| void init_ggc | ( | void | ) |

Initialize the ggc-mmap allocator.

Initialize the object size table.

If S is not a multiple of the MAX_ALIGNMENT, then round it up

so that we're sure of getting aligned memory. Initialize the objects-per-page and inverse tables.

Reset the size_lookup array to put appropriately sized objects in the special orders. All objects bigger than the previous power of two, but no greater than the special size, should go in the new order.

|

read |

Return a new ggc_pch_data structure.

References page_entry::bytes.

|

static |

Referenced by gt_ggc_m_S(), and sweep_pages().

|

inlinestatic |

Traverse the page table and find the entry for a page. Die (probably) if the object wasn't allocated via GC.

Extract the level 1 and 2 indices.

References globals::bytes_mapped, FATAL_EXIT_CODE, G, MAP_FAILED, NULL, page_entry::page, and pref.

|

static |

|

static |

Move the PCH PTE entries just added to the end of by_depth, to the front.

First, we swap the new entries to the front of the varrays.

Now update all the index_by_depth fields.

And last, we update the depth pointers in G.depth. The first entry is already 0, and context 0 entries always start at index 0, so there is nothing to update in the first slot. We need a second slot, only if we have old ptes, and if we do, they start at index count_new_page_tables.

|

static |

|

inlinestatic |

Compute the index for this page into the page group.

References G, NULL, and globals::pagesize.

|

static |

|

inlinestatic |

Push an entry onto G.by_depth and G.save_in_use.

|

static |

|

inlinestatic |

Push an entry onto G.depth.

References G, and globals::lookup.

|

static |

Release the free page cache to the system.

Remove all pages from free page groups from the list.

Remove all free page groups, and release the storage.

|

static |

|

inlinestatic |

Set and clear the in_use bit for this page in the page group.

References page_entry::bytes, G, globals::lg_pagesize, page_entry::next, page_entry::order, order, page_entry::page, and globals::pagesize.

|

static |

|

static |

Set the page table entry for a page.

Extract the level 1 and 2 indices.

|

static |

Free all empty pages. Partially empty pages need no attention because the `mark' bit doubles as an `unused' bit.

The last page-entry to consider, regardless of entries

placed at the end of the list.

Loop until all entries have been examined.

Add all live objects on this page to the count of

allocated memory. Only objects on pages in the topmost context should get

collected. Remove the page if it's empty.

If P was the first page in the list, then NEXT

becomes the new first page in the list, otherwise

splice P out of the forward pointers. Splice P out of the back pointers too.

Are we removing the last element?

If the page is full, move it to the end.

Don't move it if it's already at the end.

Move p to the end of the list.

Update the tail pointer...

... and the head pointer, if necessary.

And update the backpointer in NEXT if necessary.

If we've fallen through to here, it's a page in the

topmost context that is neither full nor empty. Such a

page must precede pages at lesser context depth in the

list, so move it to the head. Update the backchain in the next node if it exists.

Move P to the head of the list.

Update the head pointer.

Are we moving the last element?

Now, restore the in_use_p vectors for any pages from contexts

other than the current one.

References page_entry::context_depth, globals::context_depth, G, gcc_assert, HOST_BITS_PER_LONG, page_entry::in_use_p, lookup_page_table_entry(), NULL, OFFSET_TO_BIT, page_entry::order, and page_entry::page.

Variable Documentation

|

static |

The Ith entry is the maximum size of an object to be stored in the Ith extra order. Adding a new entry to this array is the only thing you need to do to add a new special allocation size.

|

static |

| struct { ... } inverse_table[NUM_ORDERS] |

The Ith entry is a pair of numbers (mult, shift) such that ((k * mult) >> shift) mod 2^32 == (k / OBJECT_SIZE(I)) mod 2^32, for all k evenly divisible by OBJECT_SIZE(I).

| size_t mult |

Referenced by coalesce_cost_bb(), and update_costs_from_allocno().

|

static |

The Ith entry is the size of an object on a page of order I.

|

static |

The Ith entry is the number of objects on a page or order I.

| unsigned int shift |

|

static |