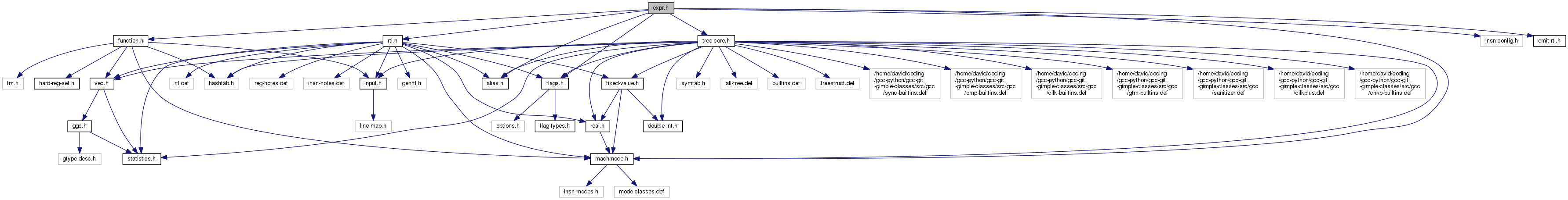

#include "function.h"#include "rtl.h"#include "flags.h"#include "tree-core.h"#include "machmode.h"#include "insn-config.h"#include "alias.h"#include "emit-rtl.h"

Go to the source code of this file.

Data Structures | |

| struct | args_size |

| struct | locate_and_pad_arg_data |

| struct | separate_ops |

Macros | |

| #define | NO_DEFER_POP (inhibit_defer_pop += 1) |

| #define | OK_DEFER_POP (inhibit_defer_pop -= 1) |

| #define | ADD_PARM_SIZE(TO, INC) |

| #define | SUB_PARM_SIZE(TO, DEC) |

| #define | ARGS_SIZE_TREE(SIZE) |

| #define | ARGS_SIZE_RTX(SIZE) |

| #define | memory_address(MODE, RTX) memory_address_addr_space ((MODE), (RTX), ADDR_SPACE_GENERIC) |

| #define | adjust_address(MEMREF, MODE, OFFSET) adjust_address_1 (MEMREF, MODE, OFFSET, 1, 1, 0, 0) |

| #define | adjust_address_nv(MEMREF, MODE, OFFSET) adjust_address_1 (MEMREF, MODE, OFFSET, 0, 1, 0, 0) |

| #define | adjust_bitfield_address(MEMREF, MODE, OFFSET) adjust_address_1 (MEMREF, MODE, OFFSET, 1, 1, 1, 0) |

| #define | adjust_bitfield_address_size(MEMREF, MODE, OFFSET, SIZE) adjust_address_1 (MEMREF, MODE, OFFSET, 1, 1, 1, SIZE) |

| #define | adjust_bitfield_address_nv(MEMREF, MODE, OFFSET) adjust_address_1 (MEMREF, MODE, OFFSET, 0, 1, 1, 0) |

| #define | adjust_automodify_address(MEMREF, MODE, ADDR, OFFSET) adjust_automodify_address_1 (MEMREF, MODE, ADDR, OFFSET, 1) |

| #define | adjust_automodify_address_nv(MEMREF, MODE, ADDR, OFFSET) adjust_automodify_address_1 (MEMREF, MODE, ADDR, OFFSET, 0) |

Typedefs | |

| typedef struct separate_ops * | sepops |

Enumerations | |

| enum | expand_modifier { EXPAND_NORMAL = 0, EXPAND_STACK_PARM, EXPAND_SUM, EXPAND_CONST_ADDRESS, EXPAND_INITIALIZER, EXPAND_WRITE, EXPAND_MEMORY } |

| enum | direction { none, upward, downward } |

| enum | optab_methods { OPTAB_DIRECT, OPTAB_LIB, OPTAB_WIDEN, OPTAB_LIB_WIDEN, OPTAB_MUST_WIDEN } |

| enum | block_op_methods { BLOCK_OP_NORMAL, BLOCK_OP_NO_LIBCALL, BLOCK_OP_CALL_PARM, BLOCK_OP_TAILCALL } |

| enum | save_level { SAVE_BLOCK, SAVE_FUNCTION, SAVE_NONLOCAL } |

Variables | |

| tree | block_clear_fn |

Macro Definition Documentation

| #define ADD_PARM_SIZE | ( | TO, | |

| INC | |||

| ) |

Add the value of the tree INC to the `struct args_size' TO.

Referenced by initialize_argument_information().

| #define adjust_address | ( | MEMREF, | |

| MODE, | |||

| OFFSET | |||

| ) | adjust_address_1 (MEMREF, MODE, OFFSET, 1, 1, 0, 0) |

Return a memory reference like MEMREF, but with its mode changed to MODE and its address offset by OFFSET bytes.

Referenced by apply_args_size(), move_by_pieces_1(), set_storage_via_setmem(), and sjlj_assign_call_site_values().

| #define adjust_address_nv | ( | MEMREF, | |

| MODE, | |||

| OFFSET | |||

| ) | adjust_address_1 (MEMREF, MODE, OFFSET, 0, 1, 0, 0) |

Likewise, but the reference is not required to be valid.

Referenced by assign_mem_slot(), assign_parm_setup_reg(), ceiling(), clear_storage(), distribute_and_simplify_rtx(), gen_lowpart_if_possible(), insert_restore(), replace_reg_with_saved_mem(), set_storage_via_setmem(), spill_failure(), and subreg_lowpart_offset().

| #define adjust_automodify_address | ( | MEMREF, | |

| MODE, | |||

| ADDR, | |||

| OFFSET | |||

| ) | adjust_automodify_address_1 (MEMREF, MODE, ADDR, OFFSET, 1) |

Return a memory reference like MEMREF, but with its mode changed to MODE and its address changed to ADDR, which is assumed to be increased by OFFSET bytes from MEMREF.

| #define adjust_automodify_address_nv | ( | MEMREF, | |

| MODE, | |||

| ADDR, | |||

| OFFSET | |||

| ) | adjust_automodify_address_1 (MEMREF, MODE, ADDR, OFFSET, 0) |

Likewise, but the reference is not required to be valid.

| #define adjust_bitfield_address | ( | MEMREF, | |

| MODE, | |||

| OFFSET | |||

| ) | adjust_address_1 (MEMREF, MODE, OFFSET, 1, 1, 1, 0) |

Return a memory reference like MEMREF, but with its mode changed to MODE and its address offset by OFFSET bytes. Assume that it's for a bitfield and conservatively drop the underlying object if we cannot be sure to stay within its bounds.

| #define adjust_bitfield_address_nv | ( | MEMREF, | |

| MODE, | |||

| OFFSET | |||

| ) | adjust_address_1 (MEMREF, MODE, OFFSET, 0, 1, 1, 0) |

Likewise, but the reference is not required to be valid.

| #define adjust_bitfield_address_size | ( | MEMREF, | |

| MODE, | |||

| OFFSET, | |||

| SIZE | |||

| ) | adjust_address_1 (MEMREF, MODE, OFFSET, 1, 1, 1, SIZE) |

As for adjust_bitfield_address, but specify that the width of BLKmode accesses is SIZE bytes.

| #define ARGS_SIZE_RTX | ( | SIZE | ) |

Convert the implicit sum in a `struct args_size' into an rtx.

| #define ARGS_SIZE_TREE | ( | SIZE | ) |

| #define memory_address | ( | MODE, | |

| RTX | |||

| ) | memory_address_addr_space ((MODE), (RTX), ADDR_SPACE_GENERIC) |

Like memory_address_addr_space, except assume the memory address points to the generic named address space.

Referenced by expand_builtin_prefetch(), and expand_builtin_return_addr().

| #define NO_DEFER_POP (inhibit_defer_pop += 1) |

Prevent the compiler from deferring stack pops. See inhibit_defer_pop for more information.

Referenced by move_by_pieces_1().

| #define OK_DEFER_POP (inhibit_defer_pop -= 1) |

Allow the compiler to defer stack pops. See inhibit_defer_pop for more information.

| #define SUB_PARM_SIZE | ( | TO, | |

| DEC | |||

| ) |

Typedef Documentation

| typedef struct separate_ops * sepops |

This structure is used to pass around information about exploded unary, binary and trinary expressions between expand_expr_real_1 and friends.

Enumeration Type Documentation

| enum block_op_methods |

| enum direction |

| enum expand_modifier |

Definitions for code generation pass of GNU compiler. Copyright (C) 1987-2013 Free Software Foundation, Inc.

This file is part of GCC.

GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version.

GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see http://www.gnu.org/licenses/. For inhibit_defer_pop For XEXP, GEN_INT, rtx_code For optimize_size For host_integerp, tree_low_cst, fold_convert, size_binop, ssize_int, TREE_CODE, TYPE_SIZE, int_size_in_bytes, For GET_MODE_BITSIZE, word_mode This is the 4th arg to `expand_expr'. EXPAND_STACK_PARM means we are possibly expanding a call param onto the stack. EXPAND_SUM means it is ok to return a PLUS rtx or MULT rtx. EXPAND_INITIALIZER is similar but also record any labels on forced_labels. EXPAND_CONST_ADDRESS means it is ok to return a MEM whose address is a constant that is not a legitimate address. EXPAND_WRITE means we are only going to write to the resulting rtx. EXPAND_MEMORY means we are interested in a memory result, even if the memory is constant and we could have propagated a constant value.

| enum optab_methods |

Functions from optabs.c, commonly used, and without need for the optabs tables: Passed to expand_simple_binop and expand_binop to say which options to try to use if the requested operation can't be open-coded on the requisite mode. Either OPTAB_LIB or OPTAB_LIB_WIDEN says try using a library call. Either OPTAB_WIDEN or OPTAB_LIB_WIDEN says try using a wider mode. OPTAB_MUST_WIDEN says try widening and don't try anything else.

| enum save_level |

Function Documentation

| rtx adjust_address_1 | ( | rtx | memref, |

| enum machine_mode | mode, | ||

| HOST_WIDE_INT | offset, | ||

| int | validate, | ||

| int | adjust_address, | ||

| int | adjust_object, | ||

| HOST_WIDE_INT | size | ||

| ) |

Return a memory reference like MEMREF, but with its mode changed to MODE and its address offset by OFFSET bytes. If VALIDATE is nonzero, the memory address is forced to be valid. If ADJUST_ADDRESS is zero, OFFSET is only used to update MEM_ATTRS and the caller is responsible for adjusting MEMREF base register. If ADJUST_OBJECT is zero, the underlying object associated with the memory reference is left unchanged and the caller is responsible for dealing with it. Otherwise, if the new memory reference is outside the underlying object, even partially, then the object is dropped. SIZE, if nonzero, is the size of an access in cases where MODE has no inherent size.

VOIDmode means no mode change for change_address_1.

Take the size of non-BLKmode accesses from the mode.

If there are no changes, just return the original memory reference.

??? Prefer to create garbage instead of creating shared rtl. This may happen even if offset is nonzero – consider (plus (plus reg reg) const_int) – so do this always.

Convert a possibly large offset to a signed value within the range of the target address space.

If MEMREF is a LO_SUM and the offset is within the alignment of the

object, we can merge it into the LO_SUM. If the address is a REG, change_address_1 rightfully returns memref, but this would destroy memref's MEM_ATTRS.

Conservatively drop the object if we don't know where we start from.

Compute the new values of the memory attributes due to this adjustment. We add the offsets and update the alignment.

Drop the object if the new left end is not within its bounds.

Compute the new alignment by taking the MIN of the alignment and the lowest-order set bit in OFFSET, but don't change the alignment if OFFSET if zero.

Drop the object if the new right end is not within its bounds.

??? The store_by_pieces machinery generates negative sizes,

so don't assert for that here.

| rtx adjust_automodify_address_1 | ( | rtx | memref, |

| enum machine_mode | mode, | ||

| rtx | addr, | ||

| HOST_WIDE_INT | offset, | ||

| int | validate | ||

| ) |

Return a memory reference like MEMREF, but with its mode changed to MODE and its address changed to ADDR, which is assumed to be MEMREF offset by OFFSET bytes. If VALIDATE is nonzero, the memory address is forced to be valid.

| void adjust_stack | ( | rtx | ) |

Remove some bytes from the stack. An rtx says how many.

| rtx allocate_dynamic_stack_space | ( | rtx | size, |

| unsigned | size_align, | ||

| unsigned | required_align, | ||

| bool | cannot_accumulate | ||

| ) |

Allocate some space on the stack dynamically and return its address.

Return an rtx representing the address of an area of memory dynamically pushed on the stack.

Any required stack pointer alignment is preserved.

SIZE is an rtx representing the size of the area.

SIZE_ALIGN is the alignment (in bits) that we know SIZE has. This parameter may be zero. If so, a proper value will be extracted from SIZE if it is constant, otherwise BITS_PER_UNIT will be assumed.

REQUIRED_ALIGN is the alignment (in bits) required for the region of memory.

If CANNOT_ACCUMULATE is set to TRUE, the caller guarantees that the stack space allocated by the generated code cannot be added with itself in the course of the execution of the function. It is always safe to pass FALSE here and the following criterion is sufficient in order to pass TRUE: every path in the CFG that starts at the allocation point and loops to it executes the associated deallocation code.

If we're asking for zero bytes, it doesn't matter what we point to since we can't dereference it. But return a reasonable address anyway.

Otherwise, show we're calling alloca or equivalent.

If stack usage info is requested, look into the size we are passed. We need to do so this early to avoid the obfuscation that may be introduced later by the various alignment operations.

Look into the last emitted insn and see if we can deduce

something for the register. If the size is not constant, we can't say anything.

Ensure the size is in the proper mode.

Adjust SIZE_ALIGN, if needed.

Watch out for overflow truncating to "unsigned".

We can't attempt to minimize alignment necessary, because we don't know the final value of preferred_stack_boundary yet while executing this code.

We will need to ensure that the address we return is aligned to REQUIRED_ALIGN. If STACK_DYNAMIC_OFFSET is defined, we don't always know its final value at this point in the compilation (it might depend on the size of the outgoing parameter lists, for example), so we must align the value to be returned in that case. (Note that STACK_DYNAMIC_OFFSET will have a default nonzero value if STACK_POINTER_OFFSET or ACCUMULATE_OUTGOING_ARGS are defined). We must also do an alignment operation on the returned value if the stack pointer alignment is less strict than REQUIRED_ALIGN. If we have to align, we must leave space in SIZE for the hole that might result from the alignment operation.

??? STACK_POINTER_OFFSET is always defined now.

Round the size to a multiple of the required stack alignment. Since the stack if presumed to be rounded before this allocation, this will maintain the required alignment. If the stack grows downward, we could save an insn by subtracting SIZE from the stack pointer and then aligning the stack pointer. The problem with this is that the stack pointer may be unaligned between the execution of the subtraction and alignment insns and some machines do not allow this. Even on those that do, some signal handlers malfunction if a signal should occur between those insns. Since this is an extremely rare event, we have no reliable way of knowing which systems have this problem. So we avoid even momentarily mis-aligning the stack.

The size is supposed to be fully adjusted at this point so record it if stack usage info is requested.

??? This is gross but the only safe stance in the absence

of stack usage oriented flow analysis. If we are splitting the stack, we need to ask the backend whether there is enough room on the current stack. If there isn't, or if the backend doesn't know how to tell is, then we need to call a function to allocate memory in some other way. This memory will be released when we release the current stack segment. The effect is that stack allocation becomes less efficient, but at least it doesn't cause a stack overflow.

The __morestack_allocate_stack_space function will allocate

memory using malloc. If the alignment of the memory returned

by malloc does not meet REQUIRED_ALIGN, we increase SIZE to

make sure we allocate enough space. We ought to be called always on the toplevel and stack ought to be aligned properly.

If needed, check that we have the required amount of stack. Take into account what has already been checked.

Don't let anti_adjust_stack emit notes.

Perform the required allocation from the stack. Some systems do this differently than simply incrementing/decrementing from the stack pointer, such as acquiring the space by calling malloc().

Check stack bounds if necessary.

Even if size is constant, don't modify stack_pointer_delta.

The constant size alloca should preserve

crtl->preferred_stack_boundary alignment. Finish up the split stack handling.

CEIL_DIV_EXPR needs to worry about the addition overflowing,

but we know it can't. So add ourselves and then do

TRUNC_DIV_EXPR. Now that we've committed to a return value, mark its alignment.

Record the new stack level for nonlocal gotos.

References BITS_PER_UNIT, and PREFERRED_STACK_BOUNDARY.

Referenced by initialize_argument_information().

| void anti_adjust_stack | ( | rtx | ) |

Add some bytes to the stack. An rtx says how many.

Add some bytes to the stack while probing it. An rtx says how many.

| rtx assemble_static_space | ( | unsigned | HOST_WIDE_INT | ) |

Referenced by set_cfun().

| rtx assemble_trampoline_template | ( | void | ) |

Assemble the static constant template for function entry trampolines.

By default, put trampoline templates in read-only data section.

Write the assembler code to define one.

Record the rtl to refer to it.

| tree build_libfunc_function | ( | const char * | ) |

Build a decl for a libfunc named NAME.

| rtx builtin_strncpy_read_str | ( | void * | data, |

| HOST_WIDE_INT | offset, | ||

| enum machine_mode | mode | ||

| ) |

Callback routine for store_by_pieces. Read GET_MODE_BITSIZE (MODE) bytes from constant string DATA + OFFSET and return it as target constant.

| int can_store_by_pieces | ( | unsigned HOST_WIDE_INT | len, |

| rtx(*)(void *, HOST_WIDE_INT, enum machine_mode) | constfun, | ||

| void * | constfundata, | ||

| unsigned int | align, | ||

| bool | memsetp | ||

| ) |

Return nonzero if it is desirable to store LEN bytes generated by CONSTFUN with several move instructions by store_by_pieces function. CONSTFUNDATA is a pointer which will be passed as argument in every CONSTFUN call. ALIGN is maximum alignment we can assume. MEMSETP is true if this is a real memset/bzero, not a copy of a const string.

Determine whether the LEN bytes generated by CONSTFUN can be stored to memory using several move instructions. CONSTFUNDATA is a pointer which will be passed as argument in every CONSTFUN call. ALIGN is maximum alignment we can assume. MEMSETP is true if this is a memset operation and false if it's a copy of a constant string. Return nonzero if a call to store_by_pieces should succeed.

cst is set but not used if LEGITIMATE_CONSTANT doesn't use it.

We would first store what we can in the largest integer mode, then go to successively smaller modes.

The code above should have handled everything.

References const0_rtx.

Return a memory reference like MEMREF, but with its mode changed to MODE and its address changed to ADDR. (VOIDmode means don't change the mode. NULL for ADDR means don't change the address.)

| unsigned HOST_WIDE_INT choose_multiplier | ( | unsigned HOST_WIDE_INT | d, |

| int | n, | ||

| int | precision, | ||

| unsigned HOST_WIDE_INT * | multiplier_ptr, | ||

| int * | post_shift_ptr, | ||

| int * | lgup_ptr | ||

| ) |

Choose a minimal N + 1 bit approximation to 1/D that can be used to replace division by D, and put the least significant N bits of the result in *MULTIPLIER_PTR and return the most significant bit.

Choose a minimal N + 1 bit approximation to 1/D that can be used to replace division by D, and put the least significant N bits of the result in *MULTIPLIER_PTR and return the most significant bit.

The width of operations is N (should be <= HOST_BITS_PER_WIDE_INT), the needed precision is in PRECISION (should be <= N).

PRECISION should be as small as possible so this function can choose multiplier more freely.

The rounded-up logarithm of D is placed in *lgup_ptr. A shift count that is to be used for a final right shift is placed in *POST_SHIFT_PTR.

Using this function, x/D will be equal to (x * m) >> (*POST_SHIFT_PTR), where m is the full HOST_BITS_PER_WIDE_INT + 1 bit multiplier.

lgup = ceil(log2(divisor));

We could handle this with some effort, but this case is much better handled directly with a scc insn, so rely on caller using that.

mlow = 2^(N + lgup)/d

mhigh = (2^(N + lgup) + 2^(N + lgup - precision))/d

Assert that mlow < mhigh.

If precision == N, then mlow, mhigh exceed 2^N (but they do not exceed 2^(N+1)).

Reduce to lowest terms.

| void clear_pending_stack_adjust | ( | void | ) |

When exiting from function, if safe, clear out any pending stack adjust so the adjustment won't get done.

When exiting from function, if safe, clear out any pending stack adjust so the adjustment won't get done.

Note, if the current function calls alloca, then it must have a frame pointer regardless of the value of flag_omit_frame_pointer.

Write zeros through the storage of OBJECT. If OBJECT has BLKmode, SIZE is its length in bytes.

| rtx clear_storage_hints | ( | rtx | object, |

| rtx | size, | ||

| enum block_op_methods | method, | ||

| unsigned int | expected_align, | ||

| HOST_WIDE_INT | expected_size | ||

| ) |

Write zeros through the storage of OBJECT. If OBJECT has BLKmode, SIZE is its length in bytes.

If OBJECT is not BLKmode and SIZE is the same size as its mode, just move a zero. Otherwise, do this a piece at a time.

References byte_mode, create_convert_operand_from(), create_convert_operand_to(), create_fixed_operand(), create_integer_operand(), gcc_assert, insn_data, and maybe_expand_insn().

Convert an rtx to MODE from OLDMODE and return the result.

Emit some rtl insns to move data between rtx's, converting machine modes. Both modes must be floating or both fixed.

Convert an rtx to specified machine mode and return the result.

Like copy_to_reg but always make the reg the specified mode MODE.

Copy given rtx to given temp reg and return that.

| rtx default_expand_builtin | ( | tree | exp, |

| rtx | target, | ||

| rtx | subtarget, | ||

| enum machine_mode | mode, | ||

| int | ignore | ||

| ) |

Default target-specific builtin expander that does nothing.

References NULL, NULL_TREE, and validate_arg().

| void discard_pending_stack_adjust | ( | void | ) |

Discard any pending stack adjustment.

Discard any pending stack adjustment. This avoid relying on the RTL optimizers to remove useless adjustments when we know the stack pointer value is dead.

References cfun, discard_pending_stack_adjust(), and EXIT_IGNORE_STACK.

Referenced by discard_pending_stack_adjust().

| void do_compare_rtx_and_jump | ( | rtx | op0, |

| rtx | op1, | ||

| enum rtx_code | code, | ||

| int | unsignedp, | ||

| enum machine_mode | mode, | ||

| rtx | size, | ||

| rtx | if_false_label, | ||

| rtx | if_true_label, | ||

| int | prob | ||

| ) |

Like do_compare_and_jump but expects the values to compare as two rtx's. The decision as to signed or unsigned comparison must be made by the caller.

If MODE is BLKmode, SIZE is an RTX giving the size of the objects being compared.

Reverse the comparison if that is safe and we want to jump if it is false. Also convert to the reverse comparison if the target can implement it.

Canonicalize to UNORDERED for the libcall.

If one operand is constant, make it the second one. Only do this if the other operand is not constant as well.

Never split ORDERED and UNORDERED.

These must be implemented. Split a floating-point comparison if

we can jump on other conditions... ... or if there is no libcall for it.

If there are no NaNs, the first comparison should always fall

through. If we only jump if true, just bypass the second jump.

References const0_rtx, CONST0_RTX, and emit_jump().

Referenced by do_jump_by_parts_zero_rtx(), and expand_return().

Generate code to evaluate EXP and jump to IF_FALSE_LABEL if the result is zero, or IF_TRUE_LABEL if the result is one.

| void do_jump_1 | ( | enum tree_code | code, |

| tree | op0, | ||

| tree | op1, | ||

| rtx | if_false_label, | ||

| rtx | if_true_label, | ||

| int | prob | ||

| ) |

Subroutine of do_jump, dealing with exploded comparisons of the type OP0 CODE OP1 . IF_FALSE_LABEL and IF_TRUE_LABEL like in do_jump. PROB is probability of jump to if_true_label, or -1 if unknown.

Spread the probability that the expression is false evenly between

the two conditions. So the first condition is false half the total

probability of being false. The second condition is false the other

half of the total probability of being false, so its jump has a false

probability of half the total, relative to the probability we

reached it (i.e. the first condition was true).

Get the probability that each jump below is true.

Spread the probability evenly between the two conditions. So

the first condition has half the total probability of being true.

The second condition has the other half of the total probability,

so its jump has a probability of half the total, relative to

the probability we reached it (i.e. the first condition was false).

| void do_pending_stack_adjust | ( | void | ) |

Pop any previously-pushed arguments that have not been popped yet.

Referenced by build_libfunc_function(), emit_jump(), expand_computed_goto(), expand_float(), and have_sub2_insn().

Return an rtx like arg but sans any constant terms. Returns the original rtx if it has no constant terms. The constant terms are added and stored via a second arg.

| rtx emit_block_move_hints | ( | rtx | x, |

| rtx | y, | ||

| rtx | size, | ||

| enum block_op_methods | method, | ||

| unsigned int | expected_align, | ||

| HOST_WIDE_INT | expected_size | ||

| ) |

Emit code to move a block Y to a block X. This may be done with string-move instructions, with multiple scalar move instructions, or with a library call.

Both X and Y must be MEM rtx's (perhaps inside VOLATILE) with mode BLKmode. SIZE is an rtx that says how long they are. ALIGN is the maximum alignment we can assume they have. METHOD describes what kind of copy this is, and what mechanisms may be used.

Return the address of the new block, if memcpy is called and returns it, 0 otherwise.

Make inhibit_defer_pop nonzero around the library call to force it to pop the arguments right away.

Make sure we've got BLKmode addresses; store_one_arg can decide that block copy is more efficient for other large modes, e.g. DCmode. Set MEM_SIZE as appropriate for this block copy. The main place this can be incorrect is coming from __builtin_memcpy.

Since x and y are passed to a libcall, mark the corresponding

tree EXPR as addressable.

| void emit_cmp_and_jump_insns | ( | rtx | x, |

| rtx | y, | ||

| enum rtx_code | comparison, | ||

| rtx | size, | ||

| enum machine_mode | mode, | ||

| int | unsignedp, | ||

| rtx | label, | ||

| int | prob | ||

| ) |

Emit a pair of rtl insns to compare two rtx's and to jump to a label if the comparison is true.

Generate code to compare X with Y so that the condition codes are set and to jump to LABEL if the condition is true. If X is a constant and Y is not a constant, then the comparison is swapped to ensure that the comparison RTL has the canonical form.

UNSIGNEDP nonzero says that X and Y are unsigned; this matters if they need to be widened. UNSIGNEDP is also used to select the proper branch condition code.

If X and Y have mode BLKmode, then SIZE specifies the size of both X and Y.

MODE is the mode of the inputs (in case they are const_int).

COMPARISON is the rtl operator to compare with (EQ, NE, GT, etc.). It will be potentially converted into an unsigned variant based on UNSIGNEDP to select a proper jump instruction.

PROB is the probability of jumping to LABEL.

Swap operands and condition to ensure canonical RTL.

If OP0 is still a constant, then both X and Y must be constants or the opposite comparison is not supported. Force X into a register to create canonical RTL.

Referenced by expand_float(), have_sub2_insn(), and node_has_low_bound().

| rtx emit_conditional_add | ( | rtx | target, |

| enum rtx_code | code, | ||

| rtx | op0, | ||

| rtx | op1, | ||

| enum machine_mode | cmode, | ||

| rtx | op2, | ||

| rtx | op3, | ||

| enum machine_mode | mode, | ||

| int | unsignedp | ||

| ) |

Emit a conditional addition instruction if the machine supports one for that condition and machine mode.

OP0 and OP1 are the operands that should be compared using CODE. CMODE is the mode to use should they be constants. If it is VOIDmode, they cannot both be constants.

OP2 should be stored in TARGET if the comparison is false, otherwise OP2+OP3 should be stored there. MODE is the mode to use should they be constants. If it is VOIDmode, they cannot both be constants.

The result is either TARGET (perhaps modified) or NULL_RTX if the operation is not supported.

If one operand is constant, make it the second one. Only do this if the other operand is not constant as well.

get_condition will prefer to generate LT and GT even if the old comparison was against zero, so undo that canonicalization here since comparisons against zero are cheaper.

We can get const0_rtx or const_true_rtx in some circumstances. Just return NULL and let the caller figure out how best to deal with this situation.

Load a BLKmode value into non-consecutive registers represented by a PARALLEL.

Move a non-consecutive group of registers represented by a PARALLEL into a non-consecutive group of registers represented by a PARALLEL.

Move a group of registers represented by a PARALLEL into pseudos.

Store a BLKmode value from non-consecutive registers represented by a PARALLEL.

| void emit_indirect_jump | ( | rtx | ) |

Generate code to indirectly jump to a location given in the rtx LOC.

Referenced by emit_jump().

Emit code to make a call to a constant function or a library call.

| void emit_push_insn | ( | rtx | x, |

| enum machine_mode | mode, | ||

| tree | type, | ||

| rtx | size, | ||

| unsigned int | align, | ||

| int | partial, | ||

| rtx | reg, | ||

| int | extra, | ||

| rtx | args_addr, | ||

| rtx | args_so_far, | ||

| int | reg_parm_stack_space, | ||

| rtx | alignment_pad | ||

| ) |

Generate code to push something onto the stack, given its mode and type.

Generate code to push X onto the stack, assuming it has mode MODE and type TYPE. MODE is redundant except when X is a CONST_INT (since they don't carry mode info). SIZE is an rtx for the size of data to be copied (in bytes), needed only if X is BLKmode.

ALIGN (in bits) is maximum alignment we can assume.

If PARTIAL and REG are both nonzero, then copy that many of the first bytes of X into registers starting with REG, and push the rest of X. The amount of space pushed is decreased by PARTIAL bytes. REG must be a hard register in this case. If REG is zero but PARTIAL is not, take any all others actions for an argument partially in registers, but do not actually load any registers.

EXTRA is the amount in bytes of extra space to leave next to this arg. This is ignored if an argument block has already been allocated.

On a machine that lacks real push insns, ARGS_ADDR is the address of the bottom of the argument block for this call. We use indexing off there to store the arg. On machines with push insns, ARGS_ADDR is 0 when a argument block has not been preallocated.

ARGS_SO_FAR is the size of args previously pushed for this call.

REG_PARM_STACK_SPACE is nonzero if functions require stack space for arguments passed in registers. If nonzero, it will be the number of bytes required.

Decide where to pad the argument: `downward' for below, `upward' for above, or `none' for don't pad it. Default is below for small data on big-endian machines; else above.

Invert direction if stack is post-decrement. FIXME: why?

Copy a block into the stack, entirely or partially.

A value is to be stored in an insufficiently aligned

stack slot; copy via a suitably aligned slot if

necessary. USED is now the # of bytes we need not copy to the stack

because registers will take care of them. If the partial register-part of the arg counts in its stack size,

skip the part of stack space corresponding to the registers.

Otherwise, start copying to the beginning of the stack space,

by setting SKIP to 0. Otherwise make space on the stack and copy the data

to the address of that space. Deduct words put into registers from the size we must copy.

Get the address of the stack space.

In this case, we do not deal with EXTRA separately.

A single stack adjust will do. If the source is referenced relative to the stack pointer,

copy it to another register to stabilize it. We do not need

to do this if we know that we won't be changing sp. We do *not* set_mem_attributes here, because incoming arguments

may overlap with sibling call outgoing arguments and we cannot

allow reordering of reads from function arguments with stores

to outgoing arguments of sibling calls. We do, however, want

to record the alignment of the stack slot. ALIGN may well be better aligned than TYPE, e.g. due to

PARM_BOUNDARY. Assume the caller isn't lying. Scalar partly in registers.

# bytes of start of argument

that we must make space for but need not store. Push padding now if padding above and stack grows down,

or if padding below and stack grows up.

But if space already allocated, this has already been done. If we make space by pushing it, we might as well push

the real data. Otherwise, we can leave OFFSET nonzero

and leave the space uninitialized. Now NOT_STACK gets the number of words that we don't need to

allocate on the stack. Convert OFFSET to words too. If the partial register-part of the arg counts in its stack size,

skip the part of stack space corresponding to the registers.

Otherwise, start copying to the beginning of the stack space,

by setting SKIP to 0. If X is a hard register in a non-integer mode, copy it into a pseudo;

SUBREGs of such registers are not allowed. Loop over all the words allocated on the stack for this arg.

We can do it by words, because any scalar bigger than a word

has a size a multiple of a word. Push padding now if padding above and stack grows down,

or if padding below and stack grows up.

But if space already allocated, this has already been done. We do *not* set_mem_attributes here, because incoming arguments

may overlap with sibling call outgoing arguments and we cannot

allow reordering of reads from function arguments with stores

to outgoing arguments of sibling calls. We do, however, want

to record the alignment of the stack slot. ALIGN may well be better aligned than TYPE, e.g. due to

PARM_BOUNDARY. Assume the caller isn't lying. If part should go in registers, copy that part into the appropriate registers. Do this now, at the end, since mem-to-mem copies above may do function calls.

Handle calls that pass values in multiple non-contiguous locations.

The Irix 6 ABI has examples of this.

References emit_group_load(), gcc_assert, GET_CODE, move_block_to_reg(), and REGNO.

| void emit_stack_probe | ( | rtx | ) |

Emit one stack probe at ADDRESS, an address within the stack.

| void emit_stack_restore | ( | enum | save_level, |

| rtx | |||

| ) |

Restore the stack pointer from a save area of the specified level.

| void emit_stack_save | ( | enum | save_level, |

| rtx * | |||

| ) |

Save the stack pointer at the specified level.

| rtx emit_store_flag | ( | rtx | target, |

| enum rtx_code | code, | ||

| rtx | op0, | ||

| rtx | op1, | ||

| enum machine_mode | mode, | ||

| int | unsignedp, | ||

| int | normalizep | ||

| ) |

Emit a store-flag operation.

Emit a store-flags instruction for comparison CODE on OP0 and OP1 and storing in TARGET. Normally return TARGET. Return 0 if that cannot be done.

MODE is the mode to use for OP0 and OP1 should they be CONST_INTs. If it is VOIDmode, they cannot both be CONST_INT.

UNSIGNEDP is for the case where we have to widen the operands to perform the operation. It says to use zero-extension.

NORMALIZEP is 1 if we should convert the result to be either zero or one. Normalize is -1 if we should convert the result to be either zero or -1. If NORMALIZEP is zero, the result will be left "raw" out of the scc insn.

If we reached here, we can't do this with a scc insn, however there are some comparisons that can be done in other ways. Don't do any of these cases if branches are very cheap.

See what we need to return. We can only return a 1, -1, or the sign bit.

If optimizing, use different pseudo registers for each insn, instead of reusing the same pseudo. This leads to better CSE, but slows down the compiler, since there are more pseudos

For floating-point comparisons, try the reverse comparison or try changing the "orderedness" of the comparison.

For the reverse comparison, use either an addition or a XOR.

Cannot split ORDERED and UNORDERED, only try the above trick.

If there are no NaNs, the first comparison should always fall through.

Effectively change the comparison to the other one. The remaining tricks only apply to integer comparisons.

If this is an equality comparison of integers, we can try to exclusive-or (or subtract) the two operands and use a recursive call to try the comparison with zero. Don't do any of these cases if branches are very cheap.

For integer comparisons, try the reverse comparison. However, for small X and if we'd have anyway to extend, implementing "X != 0" as "-(int)X >> 31" is still cheaper than inverting "(int)X == 0".

Again, for the reverse comparison, use either an addition or a XOR.

Some other cases we can do are EQ, NE, LE, and GT comparisons with the constant zero. Reject all other comparisons at this point. Only do LE and GT if branches are expensive since they are expensive on 2-operand machines.

Try to put the result of the comparison in the sign bit. Assume we can't do the necessary operation below.

To see if A <= 0, compute (A | (A - 1)). A <= 0 iff that result has the sign bit set.

This is destructive, so SUBTARGET can't be OP0.

To see if A > 0, compute (((signed) A) << BITS) - A, where BITS is the number of bits in the mode of OP0, minus one.

For EQ or NE, one way to do the comparison is to apply an operation

that converts the operand into a positive number if it is nonzero

or zero if it was originally zero. Then, for EQ, we subtract 1 and

for NE we negate. This puts the result in the sign bit. Then we

normalize with a shift, if needed.

Two operations that can do the above actions are ABS and FFS, so try

them. If that doesn't work, and MODE is smaller than a full word,

we can use zero-extension to the wider mode (an unsigned conversion)

as the operation. Note that ABS doesn't yield a positive number for INT_MIN, but

that is compensated by the subsequent overflow when subtracting

one / negating. If we couldn't do it that way, for NE we can "or" the two's complement

of the value with itself. For EQ, we take the one's complement of

that "or", which is an extra insn, so we only handle EQ if branches

are expensive.

Referenced by expand_mult_highpart_adjust().

| rtx emit_store_flag_force | ( | rtx | target, |

| enum rtx_code | code, | ||

| rtx | op0, | ||

| rtx | op1, | ||

| enum machine_mode | mode, | ||

| int | unsignedp, | ||

| int | normalizep | ||

| ) |

Like emit_store_flag, but always succeeds.

First see if emit_store_flag can do the job.

If this failed, we have to do this with set/compare/jump/set code. For foo != 0, if foo is in OP0, just replace it with 1 if nonzero.

Jump in the right direction if the target cannot implement CODE but can jump on its reverse condition.

Canonicalize to UNORDERED for the libcall.

Expand an assignment that stores the value of FROM into TO.

| rtx expand_atomic_fetch_op | ( | rtx | target, |

| rtx | mem, | ||

| rtx | val, | ||

| enum rtx_code | code, | ||

| enum memmodel | model, | ||

| bool | after | ||

| ) |

This function expands an atomic fetch_OP or OP_fetch operation: TARGET is an option place to stick the return value. const0_rtx indicates the result is unused. atomically fetch MEM, perform the operation with VAL and return it to MEM. CODE is the operation being performed (OP) MEMMODEL is the memory model variant to use. AFTER is true to return the result of the operation (OP_fetch). AFTER is false to return the value before the operation (fetch_OP).

Add/sub can be implemented by doing the reverse operation with -(val).

PLUS worked so emit the insns and return.

PLUS did not work, so throw away the negation code and continue.

Try the __sync libcalls only if we can't do compare-and-swap inline.

We need the original code for any further attempts.

If nothing else has succeeded, default to a compare and swap loop.

If the result is used, get a register for it.

If fetch_before, copy the value now.

For after, copy the value now.

References insn_data.

| void expand_atomic_signal_fence | ( | enum | memmodel | ) |

Referenced by expand_builtin_compare_and_swap().

| void expand_atomic_thread_fence | ( | enum | memmodel | ) |

Functions from builtins.c:

Expand an expression EXP that calls a built-in function, with result going to TARGET if that's convenient (and in mode MODE if that's convenient). SUBTARGET may be used as the target for computing one of EXP's operands. IGNORE is nonzero if the value is to be ignored.

When not optimizing, generate calls to library functions for a certain set of builtins.

The built-in function expanders test for target == const0_rtx to determine whether the function's result will be ignored.

If the result of a pure or const built-in function is ignored, and none of its arguments are volatile, we can avoid expanding the built-in call and just evaluate the arguments for side-effects.

Just do a normal library call if we were unable to fold

the values. Treat these like sqrt only if unsafe math optimizations are allowed,

because of possible accuracy problems. __builtin_apply (FUNCTION, ARGUMENTS, ARGSIZE) invokes

FUNCTION with a copy of the parameters described by

ARGUMENTS, and ARGSIZE. It returns a block of memory

allocated on the stack into which is stored all the registers

that might possibly be used for returning the result of a

function. ARGUMENTS is the value returned by

__builtin_apply_args. ARGSIZE is the number of bytes of

arguments that must be copied. ??? How should this value be

computed? We'll also need a safe worst case value for varargs

functions. __builtin_return (RESULT) causes the function to return the

value described by RESULT. RESULT is address of the block of

memory returned by __builtin_apply. All valid uses of __builtin_va_arg_pack () are removed during

inlining. All valid uses of __builtin_va_arg_pack_len () are removed during

inlining. Return the address of the first anonymous stack arg.

Returns the address of the area where the structure is returned. 0 otherwise.

If the allocation stems from the declaration of a variable-sized

object, it cannot accumulate. This should have been lowered to the builtins below.

__builtin_setjmp_setup is passed a pointer to an array of five words

and the receiver label. This is copied from the handling of non-local gotos.

??? Do not let expand_label treat us as such since we would

not want to be both on the list of non-local labels and on

the list of forced labels. __builtin_setjmp_dispatcher is passed the dispatcher label.

Remove the dispatcher label from the list of non-local labels

since the receiver labels have been added to it above. __builtin_setjmp_receiver is passed the receiver label.

__builtin_longjmp is passed a pointer to an array of five words.

It's similar to the C library longjmp function but works with

__builtin_setjmp above. This updates the setjmp buffer that is its argument with the value

of the current stack pointer. Various hooks for the DWARF 2 __throw routine.

If this is turned into an external library call, the weak parameter

must be dropped to match the expected parameter list. Skip the boolean weak parameter.

We allow user CHKP builtins if Pointer Bounds

Checker is off. FALLTHROUGH

Software implementation of pointers checker is NYI.

Target support is required. The switch statement above can drop through to cause the function to be called normally.

| rtx expand_builtin_saveregs | ( | void | ) |

Expand a call to __builtin_saveregs, generating the result in TARGET, if that's convenient.

Don't do __builtin_saveregs more than once in a function. Save the result of the first call and reuse it.

When this function is called, it means that registers must be saved on entry to this function. So we migrate the call to the first insn of this function.

Do whatever the machine needs done in this case.

Put the insns after the NOTE that starts the function. If this is inside a start_sequence, make the outer-level insn chain current, so the code is placed at the start of the function.

| void expand_builtin_setjmp_receiver | ( | rtx | ) |

| void expand_builtin_trap | ( | void | ) |

|

inlinestatic |

Generate code for computing expression EXP. An rtx for the computed value is returned. The value is never null. In the case of a void EXP, const0_rtx is returned.

Referenced by dw2_output_call_site_table(), expand_builtin_expect(), expand_builtin_prefetch(), expand_builtin_sincos(), expand_builtin_strcpy_args(), expand_builtin_va_end(), expand_builtin_va_start(), expand_call(), expand_expr_real_1(), expand_value_return(), get_frame_arg(), init_block_clear_fn(), initializer_constant_valid_p_1(), and stabilize_va_list_loc().

| rtx expand_expr_real | ( | tree | exp, |

| rtx | target, | ||

| enum machine_mode | tmode, | ||

| enum expand_modifier | modifier, | ||

| rtx * | alt_rtl | ||

| ) |

Work horses for expand_expr.

expand_expr: generate code for computing expression EXP. An rtx for the computed value is returned. The value is never null. In the case of a void EXP, const0_rtx is returned.

The value may be stored in TARGET if TARGET is nonzero. TARGET is just a suggestion; callers must assume that the rtx returned may not be the same as TARGET.

If TARGET is CONST0_RTX, it means that the value will be ignored.

If TMODE is not VOIDmode, it suggests generating the result in mode TMODE. But this is done only when convenient. Otherwise, TMODE is ignored and the value generated in its natural mode. TMODE is just a suggestion; callers must assume that the rtx returned may not have mode TMODE.

Note that TARGET may have neither TMODE nor MODE. In that case, it probably will not be used.

If MODIFIER is EXPAND_SUM then when EXP is an addition we can return an rtx of the form (MULT (REG ...) (CONST_INT ...)) or a nest of (PLUS ...) and (MINUS ...) where the terms are products as above, or REG or MEM, or constant. Ordinarily in such cases we would output mul or add instructions and then return a pseudo reg containing the sum.

EXPAND_INITIALIZER is much like EXPAND_SUM except that it also marks a label as absolutely required (it can't be dead). It also makes a ZERO_EXTEND or SIGN_EXTEND instead of emitting extend insns. This is used for outputting expressions used in initializers.

EXPAND_CONST_ADDRESS says that it is okay to return a MEM with a constant address even if that address is not normally legitimate. EXPAND_INITIALIZER and EXPAND_SUM also have this effect.

EXPAND_STACK_PARM is used when expanding to a TARGET on the stack for a call parameter. Such targets require special care as we haven't yet marked TARGET so that it's safe from being trashed by libcalls. We don't want to use TARGET for anything but the final result; Intermediate values must go elsewhere. Additionally, calls to emit_block_move will be flagged with BLOCK_OP_CALL_PARM.

If EXP is a VAR_DECL whose DECL_RTL was a MEM with an invalid address, and ALT_RTL is non-NULL, then *ALT_RTL is set to the DECL_RTL of the VAR_DECL. *ALT_RTL is also set if EXP is a COMPOUND_EXPR whose second argument is such a VAR_DECL, and so on recursively.

Handle ERROR_MARK before anybody tries to access its type.

An operation in what may be a bit-field type needs the result to be reduced to the precision of the bit-field type, which is narrower than that of the type's mode.

If we are going to ignore this result, we need only do something if there is a side-effect somewhere in the expression. If there is, short-circuit the most common cases here. Note that we must not call expand_expr with anything but const0_rtx in case this is an initial expansion of a size that contains a PLACEHOLDER_EXPR.

Ensure we reference a volatile object even if value is ignored, but

don't do this if all we are doing is taking its address. Use subtarget as the target for operand 0 of a binary operation.

??? ivopts calls expander, without any preparation from

out-of-ssa. So fake instructions as if this was an access to the

base variable. This unnecessarily allocates a pseudo, see how we can

reuse it, if partition base vars have it set already. For EXPAND_INITIALIZER try harder to get something simpler.

If a static var's type was incomplete when the decl was written,

but the type is complete now, lay out the decl now. ... fall through ...

Record writes to register variables.

Ensure variable marked as used even if it doesn't go through

a parser. If it hasn't be used yet, write out an external

definition. Show we haven't gotten RTL for this yet.

Variables inherited from containing functions should have

been lowered by this point. ??? C++ creates functions that are not TREE_STATIC.

This is the case of an array whose size is to be determined

from its initializer, while the initializer is still being parsed.

??? We aren't parsing while expanding anymore. If DECL_RTL is memory, we are in the normal case and the

address is not valid, get the address into a register. If we got something, return it. But first, set the alignment

if the address is a register. If the mode of DECL_RTL does not match that of the decl,

there are two cases: we are dealing with a BLKmode value

that is returned in a register, or we are dealing with

a promoted value. In the latter case, return a SUBREG

of the wanted mode, but mark it so that we know that it

was already extended. Get the signedness to be used for this variable. Ensure we get

the same mode we got when the variable was declared. If optimized, generate immediate CONST_DOUBLE

which will be turned into memory by reload if necessary.

We used to force a register so that loop.c could see it. But

this does not allow gen_* patterns to perform optimizations with

the constants. It also produces two insns in cases like "x = 1.0;".

On most machines, floating-point constants are not permitted in

many insns, so we'd end up copying it to a register in any case.

Now, we do the copying in expand_binop, if appropriate. Handle evaluating a complex constant in a CONCAT target.

Move the real and imaginary parts separately.

... fall through ...

temp contains a constant address.

On RISC machines where a constant address isn't valid,

make some insns to get that address into a register. We can indeed still hit this case, typically via builtin

expanders calling save_expr immediately before expanding

something. Assume this means that we only have to deal

with non-BLKmode values. If we don't need the result, just ensure we evaluate any

subexpressions. If the target does not have special handling for unaligned

loads of mode then it can use regular moves for them. We've already validated the memory, and we're creating a

new pseudo destination. The predicates really can't fail,

nor can the generator. Handle expansion of non-aliased memory with non-BLKmode. That

might end up in a register. We've already validated the memory, and we're creating a

new pseudo destination. The predicates really can't fail,

nor can the generator. Fold an expression like: "foo"[2].

This is not done in fold so it won't happen inside &.

Don't fold if this is for wide characters since it's too

difficult to do correctly and this is a very rare case. If this is a constant index into a constant array,

just get the value from the array. Handle both the cases when

we have an explicit constructor and when our operand is a variable

that was declared const. If VALUE is a CONSTRUCTOR, this

optimization is only useful if

this doesn't store the CONSTRUCTOR

into memory. If it does, it is more

efficient to just load the data from

the array directly. Optimize the special case of a zero lower bound.

We convert the lower bound to sizetype to avoid problems

with constant folding. E.g. suppose the lower bound is

1 and its mode is QI. Without the conversion

(ARRAY + (INDEX - (unsigned char)1))

becomes

(ARRAY + (-(unsigned char)1) + INDEX)

which becomes

(ARRAY + 255 + INDEX). Oops! If the operand is a CONSTRUCTOR, we can just extract the

appropriate field if it is present. We can normally use the value of the field in the

CONSTRUCTOR. However, if this is a bitfield in

an integral mode that we can fit in a HOST_WIDE_INT,

we must mask only the number of bits in the bitfield,

since this is done implicitly by the constructor. If

the bitfield does not meet either of those conditions,

we can't do this optimization. If we got back the original object, something is wrong. Perhaps

we are evaluating an expression too early. In any event, don't

infinitely recurse. If TEM's type is a union of variable size, pass TARGET to the inner

computation, since it will need a temporary and TARGET is known

to have to do. This occurs in unchecked conversion in Ada. If the bitfield is volatile, we want to access it in the

field's mode, not the computed mode.

If a MEM has VOIDmode (external with incomplete type),

use BLKmode for it instead. If we have either an offset, a BLKmode result, or a reference

outside the underlying object, we must force it to memory.

Such a case can occur in Ada if we have unchecked conversion

of an expression from a scalar type to an aggregate type or

for an ARRAY_RANGE_REF whose type is BLKmode, or if we were

passed a partially uninitialized object or a view-conversion

to a larger size. Handle CONCAT first.

Otherwise force into memory.

If this is a constant, put it in a register if it is a legitimate

constant and we don't need a memory reference. Otherwise, if this is a constant, try to force it to the constant

pool. Note that back-ends, e.g. MIPS, may refuse to do so if it

is a legitimate constant. Otherwise, if this is a constant or the object is not in memory

and need be, put it there. A constant address in OP0 can have VOIDmode, we must

not try to call force_reg in that case. If OFFSET is making OP0 more aligned than BIGGEST_ALIGNMENT,

record its alignment as BIGGEST_ALIGNMENT. Don't forget about volatility even if this is a bitfield.

In cases where an aligned union has an unaligned object

as a field, we might be extracting a BLKmode value from

an integer-mode (e.g., SImode) object. Handle this case

by doing the extract into an object as wide as the field

(which we know to be the width of a basic mode), then

storing into memory, and changing the mode to BLKmode. If the field is volatile, we always want an aligned

access. Do this in following two situations:

1. the access is not already naturally

aligned, otherwise "normal" (non-bitfield) volatile fields

become non-addressable.

2. the bitsize is narrower than the access size. Need

to extract bitfields from the access. If the field isn't aligned enough to fetch as a memref,

fetch it as a bit field. If the type and the field are a constant size and the

size of the type isn't the same size as the bitfield,

we must use bitfield operations. In this case, BITPOS must start at a byte boundary and

TARGET, if specified, must be a MEM. If the result is a record type and BITSIZE is narrower than

the mode of OP0, an integral mode, and this is a big endian

machine, we must put the field into the high-order bits. If the result type is BLKmode, store the data into a temporary

of the appropriate type, but with the mode corresponding to the

mode for the data we have (op0's mode). It's tempting to make

this a constant type, since we know it's only being stored once,

but that can cause problems if we are taking the address of this

COMPONENT_REF because the MEM of any reference via that address

will have flags corresponding to the type, which will not

necessarily be constant. If the result is BLKmode, use that to access the object

now as well. Get a reference to just this component.

If op0 is a temporary because of forcing to memory, pass only the

type to set_mem_attributes so that the original expression is never

marked as ADDRESSABLE through MEM_EXPR of the temporary. All valid uses of __builtin_va_arg_pack () are removed during

inlining. Check for a built-in function.

If we are converting to BLKmode, try to avoid an intermediate

temporary by fetching an inner memory reference. ??? We should work harder and deal with non-zero offsets.

See the normal_inner_ref case for the rationale.

Get a reference to just this component.

If the input and output modes are both the same, we are done.

If neither mode is BLKmode, and both modes are the same size

then we can use gen_lowpart. If both types are integral, convert from one mode to the other.

As a last resort, spill op0 to memory, and reload it in a

different mode. If the operand is not a MEM, force it into memory. Since we

are going to be changing the mode of the MEM, don't call

force_const_mem for constants because we don't allow pool

constants to change mode. At this point, OP0 is in the correct mode. If the output type is

such that the operand is known to be aligned, indicate that it is.

Otherwise, we need only be concerned about alignment for non-BLKmode

results. ??? Copying the MEM without substantially changing it might

run afoul of the code handling volatile memory references in

store_expr, which assumes that TARGET is returned unmodified

if it has been used. If the target does have special handling for unaligned

loads of mode then use them. We've already validated the memory, and we're creating a

new pseudo destination. The predicates really can't

fail. Nor can the insn generator.

Check for |= or &= of a bitfield of size one into another bitfield

of size 1. In this case, (unless we need the result of the

assignment) we can do this more efficiently with a

test followed by an assignment, if necessary.

??? At this point, we can't get a BIT_FIELD_REF here. But if

things change so we do, this code should be enhanced to

support it. Expanded in cfgexpand.c.

Lowered by tree-eh.c.

Lowered by gimplify.c.

Function descriptors are not valid except for as

initialization constants, and should not be expanded. WITH_SIZE_EXPR expands to its first argument. The caller should

have pulled out the size to use in whatever context it needed.

References array_ref_low_bound(), compare_tree_int(), CONSTRUCTOR_ELTS, expand_constructor(), expand_expr(), fold(), fold_convert_loc(), FOR_EACH_CONSTRUCTOR_ELT, gen_int_mode(), GET_MODE_CLASS, integer_zerop(), NULL_RTX, size_diffop_loc(), sizetype, TREE_CODE, tree_int_cst_equal(), TREE_INT_CST_LOW, TREE_SIDE_EFFECTS, TREE_STRING_LENGTH, TREE_STRING_POINTER, TREE_TYPE, TYPE_MODE, and expand_operand::value.

We should be called only on simple (binary or unary) expressions, exactly those that are valid in gimple expressions that aren't GIMPLE_SINGLE_RHS (or invalid).

We should be called only if we need the result.

An operation in what may be a bit-field type needs the result to be reduced to the precision of the bit-field type, which is narrower than that of the type's mode.

Use subtarget as the target for operand 0 of a binary operation.

If both input and output are BLKmode, this conversion isn't doing

anything except possibly changing memory attribute. Store data into beginning of memory target.

Store this field into a union of the proper type.

Return the entire union.

If the signedness of the conversion differs and OP0 is

a promoted SUBREG, clear that indication since we now

have to do the proper extension. If OP0 is a constant, just convert it into the proper mode.

Conversions between pointers to the same address space should

have been implemented via CONVERT_EXPR / NOP_EXPR. Ask target code to handle conversion between pointers

to overlapping address spaces. For disjoint address spaces, converting anything but

a null pointer invokes undefined behaviour. We simply

always return a null pointer here. Even though the sizetype mode and the pointer's mode can be different

expand is able to handle this correctly and get the correct result out

of the PLUS_EXPR code. Make sure to sign-extend the sizetype offset in a POINTER_PLUS_EXPR

if sizetype precision is smaller than pointer precision. If sizetype precision is larger than pointer precision, truncate the

offset to have matching modes. If we are adding a constant, a VAR_DECL that is sp, fp, or ap, and

something else, make sure we add the register to the constant and

then to the other thing. This case can occur during strength

reduction and doing it this way will produce better code if the

frame pointer or argument pointer is eliminated.

fold-const.c will ensure that the constant is always in the inner

PLUS_EXPR, so the only case we need to do anything about is if

sp, ap, or fp is our second argument, in which case we must swap

the innermost first argument and our second argument. If the result is to be ptr_mode and we are adding an integer to

something, we might be forming a constant. So try to use

plus_constant. If it produces a sum and we can't accept it,

use force_operand. This allows P = &ARR[const] to generate

efficient code on machines where a SYMBOL_REF is not a valid

address.

If this is an EXPAND_SUM call, always return the sum. Use immed_double_const to ensure that the constant is

truncated according to the mode of OP1, then sign extended

to a HOST_WIDE_INT. Using the constant directly can result

in non-canonical RTL in a 64x32 cross compile. Return a PLUS if modifier says it's OK.

Use immed_double_const to ensure that the constant is

truncated according to the mode of OP1, then sign extended

to a HOST_WIDE_INT. Using the constant directly can result

in non-canonical RTL in a 64x32 cross compile. Use TER to expand pointer addition of a negated value

as pointer subtraction. No sense saving up arithmetic to be done

if it's all in the wrong mode to form part of an address.

And force_operand won't know whether to sign-extend or

zero-extend. For initializers, we are allowed to return a MINUS of two

symbolic constants. Here we handle all cases when both operands

are constant. Handle difference of two symbolic constants,

for the sake of an initializer. If the last operand is a CONST_INT, use plus_constant of

the negated constant. Else make the MINUS. No sense saving up arithmetic to be done

if it's all in the wrong mode to form part of an address.

And force_operand won't know whether to sign-extend or

zero-extend. Convert A - const to A + (-const).

If first operand is constant, swap them.

Thus the following special case checks need only

check the second operand. First, check if we have a multiplication of one signed and one

unsigned operand. op0 and op1 might still be constant, despite the above

!= INTEGER_CST check. Handle it. Check for a multiplication with matching signedness.

op0 and op1 might still be constant, despite the above

!= INTEGER_CST check. Handle it. op0 and op1 might still be constant, despite the above

!= INTEGER_CST check. Handle it. If there is no insn for FMA, emit it as __builtin_fma{,f,l}

call. If this is a fixed-point operation, then we cannot use the code

below because "expand_mult" doesn't support sat/no-sat fixed-point

multiplications. If first operand is constant, swap them.

Thus the following special case checks need only

check the second operand. Attempt to return something suitable for generating an

indexed address, for machines that support that. If this is a fixed-point operation, then we cannot use the code

below because "expand_divmod" doesn't support sat/no-sat fixed-point

divisions. Possible optimization: compute the dividend with EXPAND_SUM

then if the divisor is constant can optimize the case

where some terms of the dividend have coeffs divisible by it. expand_float can't figure out what to do if FROM has VOIDmode.

So give it the correct mode. With -O, cse will optimize this. ABS_EXPR is not valid for complex arguments.

Unsigned abs is simply the operand. Testing here means we don't

risk generating incorrect code below. First try to do it with a special MIN or MAX instruction.

If that does not win, use a conditional jump to select the proper

value. At this point, a MEM target is no longer useful; we will get better

code without it. If op1 was placed in target, swap op0 and op1.

We generate better code and avoid problems with op1 mentioning

target by forcing op1 into a pseudo if it isn't a constant. Canonicalize to comparisons against 0.

Converting (a >= 1 ? a : 1) into (a > 0 ? a : 1)

or (a != 0 ? a : 1) for unsigned.

For MIN we are safe converting (a <= 1 ? a : 1)

into (a <= 0 ? a : 1) Converting (a >= -1 ? a : -1) into (a >= 0 ? a : -1)

and (a <= -1 ? a : -1) into (a < 0 ? a : -1) In case we have to reduce the result to bitfield precision

for unsigned bitfield expand this as XOR with a proper constant

instead. ??? Can optimize bitwise operations with one arg constant.

Can optimize (a bitwise1 n) bitwise2 (a bitwise3 b)

and (a bitwise1 b) bitwise2 b (etc)

but that is probably not worth while. fall through

If this is a fixed-point operation, then we cannot use the code

below because "expand_shift" doesn't support sat/no-sat fixed-point

shifts. Could determine the answer when only additive constants differ. Also,

the addition of one can be handled by changing the condition. Use a compare and a jump for BLKmode comparisons, or for function

type comparisons is HAVE_canonicalize_funcptr_for_compare. Make sure we don't have a hard reg (such as function's return

value) live across basic blocks, if not optimizing. Get the rtx code of the operands.

If target overlaps with op1, then either we need to force

op1 into a pseudo (if target also overlaps with op0),

or write the complex parts in reverse order. Move the imaginary (op1) and real (op0) parts to their

location. Move the real (op0) and imaginary (op1) parts to their location.

The signedness is determined from input operand.

Careful here: if the target doesn't support integral vector modes,

a constant selection vector could wind up smooshed into a normal

integral constant. A COND_EXPR with its type being VOID_TYPE represents a

conditional jump and is handled in

expand_gimple_cond_expr. Note that COND_EXPRs whose type is a structure or union

are required to be constructed to contain assignments of

a temporary variable, so that we can evaluate them here

for side effect only. If type is void, we must do likewise. If we are not to produce a result, we have no target. Otherwise,

if a target was specified use it; it will not be used as an

intermediate target unless it is safe. If no target, use a

temporary. Here to do an ordinary binary operator.

Bitwise operations do not need bitfield reduction as we expect their operands being properly truncated.

Perform a multiplication and return an rtx for the result. MODE is mode of value; OP0 and OP1 are what to multiply (rtx's); TARGET is a suggestion for where to store the result (an rtx).

We check specially for a constant integer as OP1. If you want this check for OP0 as well, then before calling you should swap the two operands if OP0 would be constant.

For vectors, there are several simplifications that can be made if all elements of the vector constant are identical.

These are the operations that are potentially turned into

a sequence of shifts and additions. synth_mult does an `unsigned int' multiply. As long as the mode is

less than or equal in size to `unsigned int' this doesn't matter.

If the mode is larger than `unsigned int', then synth_mult works

only if the constant value exactly fits in an `unsigned int' without

any truncation. This means that multiplying by negative values does

not work; results are off by 2^32 on a 32 bit machine. If we are multiplying in DImode, it may still be a win

to try to work with shifts and adds. We used to test optimize here, on the grounds that it's better to

produce a smaller program when -O is not used. But this causes

such a terrible slowdown sometimes that it seems better to always

use synth_mult. Special case powers of two.

Attempt to handle multiplication of DImode values by negative

coefficients, by performing the multiplication by a positive

multiplier and then inverting the result. Its safe to use -coeff even for INT_MIN, as the

result is interpreted as an unsigned coefficient.

Exclude cost of op0 from max_cost to match the cost

calculation of the synth_mult. Special case powers of two.

Exclude cost of op0 from max_cost to match the cost

calculation of the synth_mult. Expand x*2.0 as x+x.

This used to use umul_optab if unsigned, but for non-widening multiply there is no difference between signed and unsigned.

References choose_mult_variant(), CONST_INT_P, convert_modes(), convert_to_mode(), EXACT_POWER_OF_2_OR_ZERO_P, expand_binop(), expand_mult_const(), expand_shift(), floor_log2(), GET_MODE, HWI_COMPUTABLE_MODE_P, INTVAL, mul_widen_cost(), OPTAB_LIB_WIDEN, and optimize_insn_for_speed_p().

| rtx expand_mult_highpart_adjust | ( | enum machine_mode | mode, |

| rtx | adj_operand, | ||

| rtx | op0, | ||

| rtx | op1, | ||

| rtx | target, | ||

| int | unsignedp | ||

| ) |

Emit code to adjust ADJ_OPERAND after multiplication of wrong signedness flavor of OP0 and OP1. ADJ_OPERAND is already the high half of the product OP0 x OP1. If UNSIGNEDP is nonzero, adjust the signed product to become unsigned, if UNSIGNEDP is zero, adjust the unsigned product to become signed.

The result is put in TARGET if that is convenient.

MODE is the mode of operation.

References const0_rtx, emit_store_flag(), floor_log2(), force_reg(), GEN_INT, gen_reg_rtx(), GET_MODE_BITSIZE, HOST_WIDE_INT, optimize_insn_for_speed_p(), and shift.

Referenced by expand_widening_mult().

|

inlinestatic |

| rtx expand_simple_binop | ( | enum machine_mode | mode, |

| enum rtx_code | code, | ||

| rtx | op0, | ||

| rtx | op1, | ||

| rtx | target, | ||

| int | unsignedp, | ||

| enum optab_methods | methods | ||

| ) |

Generate code for a simple binary or unary operation. "Simple" in this case means "can be unambiguously described by a (mode, code) pair and mapped to a single optab."

Wrapper around expand_binop which takes an rtx code to specify the operation to perform, not an optab pointer. All other arguments are the same.

Referenced by expand_builtin_sync_operation(), expand_mem_thread_fence(), noce_try_addcc(), noce_try_store_flag_constants(), and split_edge_and_insert().

| rtx expand_simple_unop | ( | enum machine_mode | mode, |

| enum rtx_code | code, | ||

| rtx | op0, | ||

| rtx | target, | ||

| int | unsignedp | ||

| ) |

Wrapper around expand_unop which takes an rtx code to specify the operation to perform, not an optab pointer. All other arguments are the same.

Referenced by expand_atomic_load(), expand_builtin_sync_operation(), and expand_mem_thread_fence().

Like expand_case but special-case for SJLJ exception dispatching.

Expand the dispatch to a short decrement chain if there are few cases to dispatch to. Likewise if neither casesi nor tablejump is available, or if flag_jump_tables is set. Otherwise, expand as a casesi or a tablejump. The index mode is always the mode of integer_type_node. Trap if no case matches the index.

DISPATCH_INDEX is the index expression to switch on. It should be a memory or register operand.

DISPATCH_TABLE is a set of case labels. The set should be sorted in ascending order, be contiguous, starting with value 0, and contain only single-valued case labels.

Expand as a decrement-chain if there are 5 or fewer dispatch labels. This covers more than 98% of the cases in libjava, and seems to be a reasonable compromise between the "old way" of expanding as a decision tree or dispatch table vs. the "new way" with decrement chain or dispatch table.

Expand the dispatch as a decrement chain:

"switch(index) {case 0: do_0; case 1: do_1; ...; case N: do_N;}"

==>

if (index == 0) do_0; else index–;

if (index == 0) do_1; else index–;

...

if (index == 0) do_N; else index–;

This is more efficient than a dispatch table on most machines.

The last "index--" is redundant but the code is trivially dead

and will be cleaned up by later passes. Similar to expand_case, but much simpler.

Dispatching something not handled? Trap!

| rtx extract_bit_field | ( | rtx | str_rtx, |

| unsigned HOST_WIDE_INT | bitsize, | ||

| unsigned HOST_WIDE_INT | bitnum, | ||

| int | unsignedp, | ||

| rtx | target, | ||

| enum machine_mode | mode, | ||

| enum machine_mode | tmode | ||

| ) |

Generate code to extract a byte-field from STR_RTX containing BITSIZE bits, starting at BITNUM, and put it in TARGET if possible (if TARGET is nonzero). Regardless of TARGET, we return the rtx for where the value is placed.

STR_RTX is the structure containing the byte (a REG or MEM). UNSIGNEDP is nonzero if this is an unsigned bit field. MODE is the natural mode of the field value once extracted. TMODE is the mode the caller would like the value to have; but the value may be returned with type MODE instead.

If a TARGET is specified and we can store in it at no extra cost, we do so, and return TARGET. Otherwise, we return a REG of mode TMODE or MODE, with TMODE preferred if they are equally easy.

Referenced by emit_group_load_1().

| void fixup_tail_calls | ( | void | ) |

A sibling call sequence invalidates any REG_EQUIV notes made for this function's incoming arguments.

At the start of RTL generation we know the only REG_EQUIV notes in the rtl chain are those for incoming arguments, so we can look for REG_EQUIV notes between the start of the function and the NOTE_INSN_FUNCTION_BEG.

This is (slight) overkill. We could keep track of the highest argument we clobber and be more selective in removing notes, but it does not seem to be worth the effort.

There are never REG_EQUIV notes for the incoming arguments after the NOTE_INSN_FUNCTION_BEG note, so stop if we see it.

As label_rtx, but additionally the label is placed on the forced label list of its containing function (i.e. it is treated as reachable even if how is not obvious).

Given an rtx that may include add and multiply operations, generate them as insns and return a pseudo-reg containing the value. Useful after calling expand_expr with 1 as sum_ok.

Copy a value to a register if it isn't already a register. Args are mode (in case value is a constant) and the value.

Create but don't emit one rtl instruction to perform certain operations. Modes must match; operands must meet the operation's predicates. Likewise for subtraction and for just copying.

Referenced by do_output_reload(), and get_def_for_expr().

Generate a conditional trap instruction.

Referenced by cond_exec_find_if_block().

Referenced by find_invariants_to_move(), insert_insn_end_basic_block(), and reg_class_from_constraints().

| int get_mem_align_offset | ( | rtx | , |

| unsigned | int | ||

| ) |

Return OFFSET if XEXP (MEM, 0) - OFFSET is known to be ALIGN bits aligned for 0 <= OFFSET < ALIGN / BITS_PER_UNIT, or -1 if not known.

| rtx hard_function_value | ( | const_tree | valtype, |

| const_tree | func, | ||

| const_tree | fntype, | ||

| int | outgoing | ||

| ) |

Return an rtx that refers to the value returned by a function in its original home. This becomes invalid if any more code is emitted.