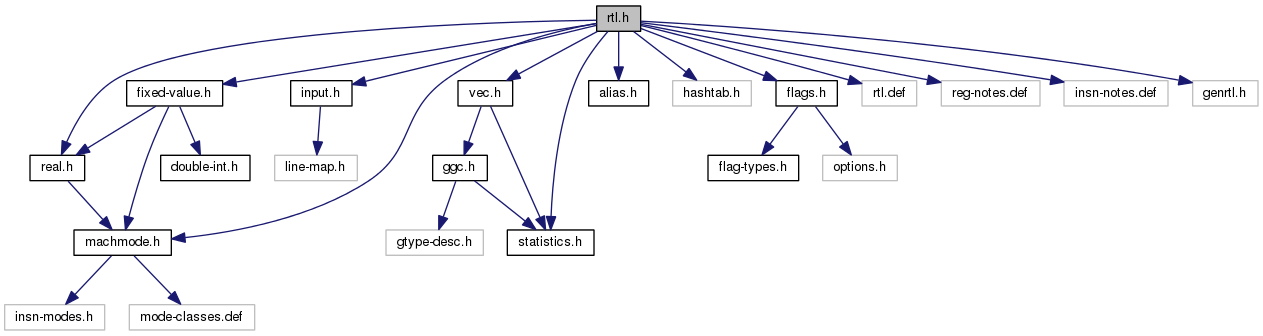

#include "statistics.h"#include "machmode.h"#include "input.h"#include "real.h"#include "vec.h"#include "fixed-value.h"#include "alias.h"#include "hashtab.h"#include "flags.h"#include "rtl.def"#include "reg-notes.def"#include "insn-notes.def"#include "genrtl.h"

Go to the source code of this file.

Data Structures | |

| struct | addr_diff_vec_flags |

| struct | mem_attrs |

| struct | reg_attrs |

| union | rtunion_def |

| struct | block_symbol |

| struct | object_block |

| struct | rtx_def |

| union | rtx_def::u |

| struct | rtvec_def |

| struct | full_rtx_costs |

| struct | address_info |

| struct | replace_label_data |

| struct | subreg_info |

| struct | target_rtl |

| struct | rtl_hooks |

Macros | |

| #define | NOOP_MOVE_INSN_CODE INT_MAX |

| #define | RTX_CODE enum rtx_code |

| #define | DEF_RTL_EXPR(ENUM, NAME, FORMAT, CLASS) ENUM , |

| #define | NUM_RTX_CODE ((int) LAST_AND_UNUSED_RTX_CODE) |

| #define | RTX_OBJ_MASK (~1) |

| #define | RTX_OBJ_RESULT (RTX_OBJ & RTX_OBJ_MASK) |

| #define | RTX_COMPARE_MASK (~1) |

| #define | RTX_COMPARE_RESULT (RTX_COMPARE & RTX_COMPARE_MASK) |

| #define | RTX_ARITHMETIC_MASK (~1) |

| #define | RTX_ARITHMETIC_RESULT (RTX_COMM_ARITH & RTX_ARITHMETIC_MASK) |

| #define | RTX_BINARY_MASK (~3) |

| #define | RTX_BINARY_RESULT (RTX_COMPARE & RTX_BINARY_MASK) |

| #define | RTX_COMMUTATIVE_MASK (~2) |

| #define | RTX_COMMUTATIVE_RESULT (RTX_COMM_COMPARE & RTX_COMMUTATIVE_MASK) |

| #define | RTX_NON_COMMUTATIVE_RESULT (RTX_COMPARE & RTX_COMMUTATIVE_MASK) |

| #define | GET_RTX_LENGTH(CODE) (rtx_length[(int) (CODE)]) |

| #define | GET_RTX_NAME(CODE) (rtx_name[(int) (CODE)]) |

| #define | GET_RTX_FORMAT(CODE) (rtx_format[(int) (CODE)]) |

| #define | GET_RTX_CLASS(CODE) (rtx_class[(int) (CODE)]) |

| #define | RTX_HDR_SIZE offsetof (struct rtx_def, u) |

| #define | RTX_CODE_SIZE(CODE) rtx_code_size[CODE] |

| #define | NULL_RTX (rtx) 0 |

| #define | RTX_NEXT(X) |

| #define | RTX_PREV(X) |

| #define | GET_CODE(RTX) ((enum rtx_code) (RTX)->code) |

| #define | PUT_CODE(RTX, CODE) ((RTX)->code = (CODE)) |

| #define | GET_MODE(RTX) ((enum machine_mode) (RTX)->mode) |

| #define | PUT_MODE(RTX, MODE) ((RTX)->mode = (MODE)) |

| #define | NULL_RTVEC (rtvec) 0 |

| #define | GET_NUM_ELEM(RTVEC) ((RTVEC)->num_elem) |

| #define | PUT_NUM_ELEM(RTVEC, NUM) ((RTVEC)->num_elem = (NUM)) |

| #define | REG_P(X) (GET_CODE (X) == REG) |

| #define | MEM_P(X) (GET_CODE (X) == MEM) |

| #define | CASE_CONST_SCALAR_INT |

| #define | CASE_CONST_UNIQUE |

| #define | CASE_CONST_ANY |

| #define | CONST_INT_P(X) (GET_CODE (X) == CONST_INT) |

| #define | CONST_FIXED_P(X) (GET_CODE (X) == CONST_FIXED) |

| #define | CONST_DOUBLE_P(X) (GET_CODE (X) == CONST_DOUBLE) |

| #define | CONST_DOUBLE_AS_INT_P(X) (GET_CODE (X) == CONST_DOUBLE && GET_MODE (X) == VOIDmode) |

| #define | CONST_SCALAR_INT_P(X) (CONST_INT_P (X) || CONST_DOUBLE_AS_INT_P (X)) |

| #define | CONST_DOUBLE_AS_FLOAT_P(X) (GET_CODE (X) == CONST_DOUBLE && GET_MODE (X) != VOIDmode) |

| #define | LABEL_P(X) (GET_CODE (X) == CODE_LABEL) |

| #define | JUMP_P(X) (GET_CODE (X) == JUMP_INSN) |

| #define | CALL_P(X) (GET_CODE (X) == CALL_INSN) |

| #define | NONJUMP_INSN_P(X) (GET_CODE (X) == INSN) |

| #define | DEBUG_INSN_P(X) (GET_CODE (X) == DEBUG_INSN) |

| #define | NONDEBUG_INSN_P(X) (INSN_P (X) && !DEBUG_INSN_P (X)) |

| #define | MAY_HAVE_DEBUG_INSNS (flag_var_tracking_assignments) |

| #define | INSN_P(X) (NONJUMP_INSN_P (X) || DEBUG_INSN_P (X) || JUMP_P (X) || CALL_P (X)) |

| #define | NOTE_P(X) (GET_CODE (X) == NOTE) |

| #define | BARRIER_P(X) (GET_CODE (X) == BARRIER) |

| #define | JUMP_TABLE_DATA_P(INSN) (GET_CODE (INSN) == JUMP_TABLE_DATA) |

| #define | ANY_RETURN_P(X) (GET_CODE (X) == RETURN || GET_CODE (X) == SIMPLE_RETURN) |

| #define | UNARY_P(X) (GET_RTX_CLASS (GET_CODE (X)) == RTX_UNARY) |

| #define | BINARY_P(X) ((GET_RTX_CLASS (GET_CODE (X)) & RTX_BINARY_MASK) == RTX_BINARY_RESULT) |

| #define | ARITHMETIC_P(X) |

| #define | COMMUTATIVE_ARITH_P(X) (GET_RTX_CLASS (GET_CODE (X)) == RTX_COMM_ARITH) |

| #define | SWAPPABLE_OPERANDS_P(X) |

| #define | NON_COMMUTATIVE_P(X) |

| #define | COMMUTATIVE_P(X) |

| #define | COMPARISON_P(X) ((GET_RTX_CLASS (GET_CODE (X)) & RTX_COMPARE_MASK) == RTX_COMPARE_RESULT) |

| #define | CONSTANT_P(X) (GET_RTX_CLASS (GET_CODE (X)) == RTX_CONST_OBJ) |

| #define | OBJECT_P(X) ((GET_RTX_CLASS (GET_CODE (X)) & RTX_OBJ_MASK) == RTX_OBJ_RESULT) |

| #define | RTL_CHECK1(RTX, N, C1) ((RTX)->u.fld[N]) |

| #define | RTL_CHECK2(RTX, N, C1, C2) ((RTX)->u.fld[N]) |

| #define | RTL_CHECKC1(RTX, N, C) ((RTX)->u.fld[N]) |

| #define | RTL_CHECKC2(RTX, N, C1, C2) ((RTX)->u.fld[N]) |

| #define | RTVEC_ELT(RTVEC, I) ((RTVEC)->elem[I]) |

| #define | XWINT(RTX, N) ((RTX)->u.hwint[N]) |

| #define | XCWINT(RTX, N, C) ((RTX)->u.hwint[N]) |

| #define | XCMWINT(RTX, N, C, M) ((RTX)->u.hwint[N]) |

| #define | XCNMWINT(RTX, N, C, M) ((RTX)->u.hwint[N]) |

| #define | XCNMPRV(RTX, C, M) (&(RTX)->u.rv) |

| #define | XCNMPFV(RTX, C, M) (&(RTX)->u.fv) |

| #define | BLOCK_SYMBOL_CHECK(RTX) (&(RTX)->u.block_sym) |

| #define | RTX_FLAG(RTX, FLAG) ((RTX)->FLAG) |

| #define | RTL_FLAG_CHECK1(NAME, RTX, C1) (RTX) |

| #define | RTL_FLAG_CHECK2(NAME, RTX, C1, C2) (RTX) |

| #define | RTL_FLAG_CHECK3(NAME, RTX, C1, C2, C3) (RTX) |

| #define | RTL_FLAG_CHECK4(NAME, RTX, C1, C2, C3, C4) (RTX) |

| #define | RTL_FLAG_CHECK5(NAME, RTX, C1, C2, C3, C4, C5) (RTX) |

| #define | RTL_FLAG_CHECK6(NAME, RTX, C1, C2, C3, C4, C5, C6) (RTX) |

| #define | RTL_FLAG_CHECK7(NAME, RTX, C1, C2, C3, C4, C5, C6, C7) (RTX) |

| #define | RTL_FLAG_CHECK8(NAME, RTX, C1, C2, C3, C4, C5, C6, C7, C8) (RTX) |

| #define | XINT(RTX, N) (RTL_CHECK2 (RTX, N, 'i', 'n').rt_int) |

| #define | XUINT(RTX, N) (RTL_CHECK2 (RTX, N, 'i', 'n').rt_uint) |

| #define | XSTR(RTX, N) (RTL_CHECK2 (RTX, N, 's', 'S').rt_str) |

| #define | XEXP(RTX, N) (RTL_CHECK2 (RTX, N, 'e', 'u').rt_rtx) |

| #define | XVEC(RTX, N) (RTL_CHECK2 (RTX, N, 'E', 'V').rt_rtvec) |

| #define | XMODE(RTX, N) (RTL_CHECK1 (RTX, N, 'M').rt_type) |

| #define | XTREE(RTX, N) (RTL_CHECK1 (RTX, N, 't').rt_tree) |

| #define | XBBDEF(RTX, N) (RTL_CHECK1 (RTX, N, 'B').rt_bb) |

| #define | XTMPL(RTX, N) (RTL_CHECK1 (RTX, N, 'T').rt_str) |

| #define | XCFI(RTX, N) (RTL_CHECK1 (RTX, N, 'C').rt_cfi) |

| #define | XVECEXP(RTX, N, M) RTVEC_ELT (XVEC (RTX, N), M) |

| #define | XVECLEN(RTX, N) GET_NUM_ELEM (XVEC (RTX, N)) |

| #define | X0INT(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_int) |

| #define | X0UINT(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_uint) |

| #define | X0STR(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_str) |

| #define | X0EXP(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_rtx) |

| #define | X0VEC(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_rtvec) |

| #define | X0MODE(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_type) |

| #define | X0TREE(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_tree) |

| #define | X0BBDEF(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_bb) |

| #define | X0ADVFLAGS(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_addr_diff_vec_flags) |

| #define | X0CSELIB(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_cselib) |

| #define | X0MEMATTR(RTX, N) (RTL_CHECKC1 (RTX, N, MEM).rt_mem) |

| #define | X0REGATTR(RTX, N) (RTL_CHECKC1 (RTX, N, REG).rt_reg) |

| #define | X0CONSTANT(RTX, N) (RTL_CHECK1 (RTX, N, '0').rt_constant) |

| #define | X0ANY(RTX, N) RTL_CHECK1 (RTX, N, '0') |

| #define | XCINT(RTX, N, C) (RTL_CHECKC1 (RTX, N, C).rt_int) |

| #define | XCUINT(RTX, N, C) (RTL_CHECKC1 (RTX, N, C).rt_uint) |

| #define | XCSTR(RTX, N, C) (RTL_CHECKC1 (RTX, N, C).rt_str) |

| #define | XCEXP(RTX, N, C) (RTL_CHECKC1 (RTX, N, C).rt_rtx) |

| #define | XCVEC(RTX, N, C) (RTL_CHECKC1 (RTX, N, C).rt_rtvec) |

| #define | XCMODE(RTX, N, C) (RTL_CHECKC1 (RTX, N, C).rt_type) |

| #define | XCTREE(RTX, N, C) (RTL_CHECKC1 (RTX, N, C).rt_tree) |

| #define | XCBBDEF(RTX, N, C) (RTL_CHECKC1 (RTX, N, C).rt_bb) |

| #define | XCCFI(RTX, N, C) (RTL_CHECKC1 (RTX, N, C).rt_cfi) |

| #define | XCCSELIB(RTX, N, C) (RTL_CHECKC1 (RTX, N, C).rt_cselib) |

| #define | XCVECEXP(RTX, N, M, C) RTVEC_ELT (XCVEC (RTX, N, C), M) |

| #define | XCVECLEN(RTX, N, C) GET_NUM_ELEM (XCVEC (RTX, N, C)) |

| #define | XC2EXP(RTX, N, C1, C2) (RTL_CHECKC2 (RTX, N, C1, C2).rt_rtx) |

| #define | INSN_UID(INSN) XINT (INSN, 0) |

| #define | PREV_INSN(INSN) XEXP (INSN, 1) |

| #define | NEXT_INSN(INSN) XEXP (INSN, 2) |

| #define | BLOCK_FOR_INSN(INSN) XBBDEF (INSN, 3) |

| #define | PATTERN(INSN) XEXP (INSN, 4) |

| #define | INSN_LOCATION(INSN) XUINT (INSN, 5) |

| #define | INSN_HAS_LOCATION(INSN) |

| #define | RTL_LOCATION(X) |

| #define | INSN_CODE(INSN) XINT (INSN, 6) |

| #define | RTX_FRAME_RELATED_P(RTX) |

| #define | INSN_DELETED_P(RTX) |

| #define | RTL_CONST_CALL_P(RTX) (RTL_FLAG_CHECK1 ("RTL_CONST_CALL_P", (RTX), CALL_INSN)->unchanging) |

| #define | RTL_PURE_CALL_P(RTX) (RTL_FLAG_CHECK1 ("RTL_PURE_CALL_P", (RTX), CALL_INSN)->return_val) |

| #define | RTL_CONST_OR_PURE_CALL_P(RTX) (RTL_CONST_CALL_P (RTX) || RTL_PURE_CALL_P (RTX)) |

| #define | RTL_LOOPING_CONST_OR_PURE_CALL_P(RTX) (RTL_FLAG_CHECK1 ("CONST_OR_PURE_CALL_P", (RTX), CALL_INSN)->call) |

| #define | SIBLING_CALL_P(RTX) (RTL_FLAG_CHECK1 ("SIBLING_CALL_P", (RTX), CALL_INSN)->jump) |

| #define | INSN_ANNULLED_BRANCH_P(RTX) (RTL_FLAG_CHECK1 ("INSN_ANNULLED_BRANCH_P", (RTX), JUMP_INSN)->unchanging) |

| #define | INSN_FROM_TARGET_P(RTX) |

| #define | ADDR_DIFF_VEC_FLAGS(RTX) X0ADVFLAGS (RTX, 4) |

| #define | CSELIB_VAL_PTR(RTX) X0CSELIB (RTX, 0) |

| #define | REG_NOTES(INSN) XEXP(INSN, 7) |

| #define | ENTRY_VALUE_EXP(RTX) (RTL_CHECKC1 (RTX, 0, ENTRY_VALUE).rt_rtx) |

| #define | DEF_REG_NOTE(NAME) NAME, |

| #define | REG_NOTE_KIND(LINK) ((enum reg_note) GET_MODE (LINK)) |

| #define | PUT_REG_NOTE_KIND(LINK, KIND) PUT_MODE (LINK, (enum machine_mode) (KIND)) |

| #define | GET_REG_NOTE_NAME(MODE) (reg_note_name[(int) (MODE)]) |

| #define | CALL_INSN_FUNCTION_USAGE(INSN) XEXP(INSN, 8) |

| #define | CODE_LABEL_NUMBER(INSN) XINT (INSN, 6) |

| #define | NOTE_DATA(INSN) RTL_CHECKC1 (INSN, 4, NOTE) |

| #define | NOTE_DELETED_LABEL_NAME(INSN) XCSTR (INSN, 4, NOTE) |

| #define | SET_INSN_DELETED(INSN) set_insn_deleted (INSN); |

| #define | NOTE_BLOCK(INSN) XCTREE (INSN, 4, NOTE) |

| #define | NOTE_EH_HANDLER(INSN) XCINT (INSN, 4, NOTE) |

| #define | NOTE_BASIC_BLOCK(INSN) XCBBDEF (INSN, 4, NOTE) |

| #define | NOTE_VAR_LOCATION(INSN) XCEXP (INSN, 4, NOTE) |

| #define | NOTE_CFI(INSN) XCCFI (INSN, 4, NOTE) |

| #define | NOTE_LABEL_NUMBER(INSN) XCINT (INSN, 4, NOTE) |

| #define | NOTE_KIND(INSN) XCINT (INSN, 5, NOTE) |

| #define | NOTE_INSN_BASIC_BLOCK_P(INSN) (NOTE_P (INSN) && NOTE_KIND (INSN) == NOTE_INSN_BASIC_BLOCK) |

| #define | PAT_VAR_LOCATION_DECL(PAT) (XCTREE ((PAT), 0, VAR_LOCATION)) |

| #define | PAT_VAR_LOCATION_LOC(PAT) (XCEXP ((PAT), 1, VAR_LOCATION)) |

| #define | PAT_VAR_LOCATION_STATUS(PAT) ((enum var_init_status) (XCINT ((PAT), 2, VAR_LOCATION))) |

| #define | NOTE_VAR_LOCATION_DECL(NOTE) PAT_VAR_LOCATION_DECL (NOTE_VAR_LOCATION (NOTE)) |

| #define | NOTE_VAR_LOCATION_LOC(NOTE) PAT_VAR_LOCATION_LOC (NOTE_VAR_LOCATION (NOTE)) |

| #define | NOTE_VAR_LOCATION_STATUS(NOTE) PAT_VAR_LOCATION_STATUS (NOTE_VAR_LOCATION (NOTE)) |

| #define | INSN_VAR_LOCATION(INSN) PATTERN (INSN) |

| #define | INSN_VAR_LOCATION_DECL(INSN) PAT_VAR_LOCATION_DECL (INSN_VAR_LOCATION (INSN)) |

| #define | INSN_VAR_LOCATION_LOC(INSN) PAT_VAR_LOCATION_LOC (INSN_VAR_LOCATION (INSN)) |

| #define | INSN_VAR_LOCATION_STATUS(INSN) PAT_VAR_LOCATION_STATUS (INSN_VAR_LOCATION (INSN)) |

| #define | gen_rtx_UNKNOWN_VAR_LOC() (gen_rtx_CLOBBER (VOIDmode, const0_rtx)) |

| #define | VAR_LOC_UNKNOWN_P(X) (GET_CODE (X) == CLOBBER && XEXP ((X), 0) == const0_rtx) |

| #define | NOTE_DURING_CALL_P(RTX) (RTL_FLAG_CHECK1 ("NOTE_VAR_LOCATION_DURING_CALL_P", (RTX), NOTE)->call) |

| #define | DEBUG_EXPR_TREE_DECL(RTX) XCTREE (RTX, 0, DEBUG_EXPR) |

| #define | DEBUG_IMPLICIT_PTR_DECL(RTX) XCTREE (RTX, 0, DEBUG_IMPLICIT_PTR) |

| #define | DEBUG_PARAMETER_REF_DECL(RTX) XCTREE (RTX, 0, DEBUG_PARAMETER_REF) |

| #define | DEF_INSN_NOTE(NAME) NAME, |

| #define | GET_NOTE_INSN_NAME(NOTE_CODE) (note_insn_name[(NOTE_CODE)]) |

| #define | LABEL_NAME(RTX) XCSTR (RTX, 7, CODE_LABEL) |

| #define | LABEL_NUSES(RTX) XCINT (RTX, 5, CODE_LABEL) |

| #define | LABEL_KIND(LABEL) ((enum label_kind) (((LABEL)->jump << 1) | (LABEL)->call)) |

| #define | SET_LABEL_KIND(LABEL, KIND) |

| #define | LABEL_ALT_ENTRY_P(LABEL) (LABEL_KIND (LABEL) != LABEL_NORMAL) |

| #define | JUMP_LABEL(INSN) XCEXP (INSN, 8, JUMP_INSN) |

| #define | LABEL_REFS(LABEL) XCEXP (LABEL, 4, CODE_LABEL) |

| #define | REGNO(RTX) (rhs_regno(RTX)) |

| #define | SET_REGNO(RTX, N) (df_ref_change_reg_with_loc (REGNO (RTX), N, RTX), XCUINT (RTX, 0, REG) = N) |

| #define | SET_REGNO_RAW(RTX, N) (XCUINT (RTX, 0, REG) = N) |

| #define | ORIGINAL_REGNO(RTX) X0UINT (RTX, 1) |

| #define | REG_FUNCTION_VALUE_P(RTX) (RTL_FLAG_CHECK2 ("REG_FUNCTION_VALUE_P", (RTX), REG, PARALLEL)->return_val) |

| #define | REG_USERVAR_P(RTX) (RTL_FLAG_CHECK1 ("REG_USERVAR_P", (RTX), REG)->volatil) |

| #define | REG_POINTER(RTX) (RTL_FLAG_CHECK1 ("REG_POINTER", (RTX), REG)->frame_related) |

| #define | MEM_POINTER(RTX) (RTL_FLAG_CHECK1 ("MEM_POINTER", (RTX), MEM)->frame_related) |

| #define | HARD_REGISTER_P(REG) (HARD_REGISTER_NUM_P (REGNO (REG))) |

| #define | HARD_REGISTER_NUM_P(REG_NO) ((REG_NO) < FIRST_PSEUDO_REGISTER) |

| #define | INTVAL(RTX) XCWINT (RTX, 0, CONST_INT) |

| #define | UINTVAL(RTX) ((unsigned HOST_WIDE_INT) INTVAL (RTX)) |

| #define | CONST_DOUBLE_LOW(r) XCMWINT (r, 0, CONST_DOUBLE, VOIDmode) |

| #define | CONST_DOUBLE_HIGH(r) XCMWINT (r, 1, CONST_DOUBLE, VOIDmode) |

| #define | CONST_DOUBLE_REAL_VALUE(r) ((const struct real_value *) XCNMPRV (r, CONST_DOUBLE, VOIDmode)) |

| #define | CONST_FIXED_VALUE(r) ((const struct fixed_value *) XCNMPFV (r, CONST_FIXED, VOIDmode)) |

| #define | CONST_FIXED_VALUE_HIGH(r) ((HOST_WIDE_INT) (CONST_FIXED_VALUE (r)->data.high)) |

| #define | CONST_FIXED_VALUE_LOW(r) ((HOST_WIDE_INT) (CONST_FIXED_VALUE (r)->data.low)) |

| #define | CONST_VECTOR_ELT(RTX, N) XCVECEXP (RTX, 0, N, CONST_VECTOR) |

| #define | CONST_VECTOR_NUNITS(RTX) XCVECLEN (RTX, 0, CONST_VECTOR) |

| #define | SUBREG_REG(RTX) XCEXP (RTX, 0, SUBREG) |

| #define | SUBREG_BYTE(RTX) XCUINT (RTX, 1, SUBREG) |

| #define | COSTS_N_INSNS(N) ((N) * 4) |

| #define | MAX_COST INT_MAX |

| #define | SUBREG_PROMOTED_VAR_P(RTX) (RTL_FLAG_CHECK1 ("SUBREG_PROMOTED", (RTX), SUBREG)->in_struct) |

| #define | SUBREG_PROMOTED_UNSIGNED_SET(RTX, VAL) |

| #define | SUBREG_PROMOTED_UNSIGNED_P(RTX) |

| #define | LRA_SUBREG_P(RTX) (RTL_FLAG_CHECK1 ("LRA_SUBREG_P", (RTX), SUBREG)->jump) |

| #define | ASM_OPERANDS_TEMPLATE(RTX) XCSTR (RTX, 0, ASM_OPERANDS) |

| #define | ASM_OPERANDS_OUTPUT_CONSTRAINT(RTX) XCSTR (RTX, 1, ASM_OPERANDS) |

| #define | ASM_OPERANDS_OUTPUT_IDX(RTX) XCINT (RTX, 2, ASM_OPERANDS) |

| #define | ASM_OPERANDS_INPUT_VEC(RTX) XCVEC (RTX, 3, ASM_OPERANDS) |

| #define | ASM_OPERANDS_INPUT_CONSTRAINT_VEC(RTX) XCVEC (RTX, 4, ASM_OPERANDS) |

| #define | ASM_OPERANDS_INPUT(RTX, N) XCVECEXP (RTX, 3, N, ASM_OPERANDS) |

| #define | ASM_OPERANDS_INPUT_LENGTH(RTX) XCVECLEN (RTX, 3, ASM_OPERANDS) |

| #define | ASM_OPERANDS_INPUT_CONSTRAINT_EXP(RTX, N) XCVECEXP (RTX, 4, N, ASM_OPERANDS) |

| #define | ASM_OPERANDS_INPUT_CONSTRAINT(RTX, N) XSTR (XCVECEXP (RTX, 4, N, ASM_OPERANDS), 0) |

| #define | ASM_OPERANDS_INPUT_MODE(RTX, N) GET_MODE (XCVECEXP (RTX, 4, N, ASM_OPERANDS)) |

| #define | ASM_OPERANDS_LABEL_VEC(RTX) XCVEC (RTX, 5, ASM_OPERANDS) |

| #define | ASM_OPERANDS_LABEL_LENGTH(RTX) XCVECLEN (RTX, 5, ASM_OPERANDS) |

| #define | ASM_OPERANDS_LABEL(RTX, N) XCVECEXP (RTX, 5, N, ASM_OPERANDS) |

| #define | ASM_OPERANDS_SOURCE_LOCATION(RTX) XCUINT (RTX, 6, ASM_OPERANDS) |

| #define | ASM_INPUT_SOURCE_LOCATION(RTX) XCUINT (RTX, 1, ASM_INPUT) |

| #define | MEM_READONLY_P(RTX) (RTL_FLAG_CHECK1 ("MEM_READONLY_P", (RTX), MEM)->unchanging) |

| #define | MEM_KEEP_ALIAS_SET_P(RTX) (RTL_FLAG_CHECK1 ("MEM_KEEP_ALIAS_SET_P", (RTX), MEM)->jump) |

| #define | MEM_VOLATILE_P(RTX) |

| #define | MEM_NOTRAP_P(RTX) (RTL_FLAG_CHECK1 ("MEM_NOTRAP_P", (RTX), MEM)->call) |

| #define | MEM_ATTRS(RTX) X0MEMATTR (RTX, 1) |

| #define | REG_ATTRS(RTX) X0REGATTR (RTX, 2) |

| #define | MEM_ALIAS_SET(RTX) (get_mem_attrs (RTX)->alias) |

| #define | MEM_EXPR(RTX) (get_mem_attrs (RTX)->expr) |

| #define | MEM_OFFSET_KNOWN_P(RTX) (get_mem_attrs (RTX)->offset_known_p) |

| #define | MEM_OFFSET(RTX) (get_mem_attrs (RTX)->offset) |

| #define | MEM_ADDR_SPACE(RTX) (get_mem_attrs (RTX)->addrspace) |

| #define | MEM_SIZE_KNOWN_P(RTX) (get_mem_attrs (RTX)->size_known_p) |

| #define | MEM_SIZE(RTX) (get_mem_attrs (RTX)->size) |

| #define | MEM_ALIGN(RTX) (get_mem_attrs (RTX)->align) |

| #define | REG_EXPR(RTX) (REG_ATTRS (RTX) == 0 ? 0 : REG_ATTRS (RTX)->decl) |

| #define | REG_OFFSET(RTX) (REG_ATTRS (RTX) == 0 ? 0 : REG_ATTRS (RTX)->offset) |

| #define | MEM_COPY_ATTRIBUTES(LHS, RHS) |

| #define | LABEL_REF_NONLOCAL_P(RTX) (RTL_FLAG_CHECK1 ("LABEL_REF_NONLOCAL_P", (RTX), LABEL_REF)->volatil) |

| #define | LABEL_PRESERVE_P(RTX) (RTL_FLAG_CHECK2 ("LABEL_PRESERVE_P", (RTX), CODE_LABEL, NOTE)->in_struct) |

| #define | SCHED_GROUP_P(RTX) |

| #define | SET_DEST(RTX) XC2EXP (RTX, 0, SET, CLOBBER) |

| #define | SET_SRC(RTX) XCEXP (RTX, 1, SET) |

| #define | SET_IS_RETURN_P(RTX) (RTL_FLAG_CHECK1 ("SET_IS_RETURN_P", (RTX), SET)->jump) |

| #define | TRAP_CONDITION(RTX) XCEXP (RTX, 0, TRAP_IF) |

| #define | TRAP_CODE(RTX) XCEXP (RTX, 1, TRAP_IF) |

| #define | COND_EXEC_TEST(RTX) XCEXP (RTX, 0, COND_EXEC) |

| #define | COND_EXEC_CODE(RTX) XCEXP (RTX, 1, COND_EXEC) |

| #define | CONSTANT_POOL_ADDRESS_P(RTX) (RTL_FLAG_CHECK1 ("CONSTANT_POOL_ADDRESS_P", (RTX), SYMBOL_REF)->unchanging) |

| #define | TREE_CONSTANT_POOL_ADDRESS_P(RTX) |

| #define | SYMBOL_REF_FLAG(RTX) (RTL_FLAG_CHECK1 ("SYMBOL_REF_FLAG", (RTX), SYMBOL_REF)->volatil) |

| #define | SYMBOL_REF_USED(RTX) (RTL_FLAG_CHECK1 ("SYMBOL_REF_USED", (RTX), SYMBOL_REF)->used) |

| #define | SYMBOL_REF_WEAK(RTX) (RTL_FLAG_CHECK1 ("SYMBOL_REF_WEAK", (RTX), SYMBOL_REF)->return_val) |

| #define | SYMBOL_REF_DATA(RTX) X0ANY ((RTX), 2) |

| #define | SET_SYMBOL_REF_DECL(RTX, DECL) (gcc_assert (!CONSTANT_POOL_ADDRESS_P (RTX)), X0TREE ((RTX), 2) = (DECL)) |

| #define | SYMBOL_REF_DECL(RTX) (CONSTANT_POOL_ADDRESS_P (RTX) ? NULL : X0TREE ((RTX), 2)) |

| #define | SET_SYMBOL_REF_CONSTANT(RTX, C) (gcc_assert (CONSTANT_POOL_ADDRESS_P (RTX)), X0CONSTANT ((RTX), 2) = (C)) |

| #define | SYMBOL_REF_CONSTANT(RTX) (CONSTANT_POOL_ADDRESS_P (RTX) ? X0CONSTANT ((RTX), 2) : NULL) |

| #define | SYMBOL_REF_FLAGS(RTX) X0INT ((RTX), 1) |

| #define | SYMBOL_FLAG_FUNCTION (1 << 0) |

| #define | SYMBOL_REF_FUNCTION_P(RTX) ((SYMBOL_REF_FLAGS (RTX) & SYMBOL_FLAG_FUNCTION) != 0) |

| #define | SYMBOL_FLAG_LOCAL (1 << 1) |

| #define | SYMBOL_REF_LOCAL_P(RTX) ((SYMBOL_REF_FLAGS (RTX) & SYMBOL_FLAG_LOCAL) != 0) |

| #define | SYMBOL_FLAG_SMALL (1 << 2) |

| #define | SYMBOL_REF_SMALL_P(RTX) ((SYMBOL_REF_FLAGS (RTX) & SYMBOL_FLAG_SMALL) != 0) |

| #define | SYMBOL_FLAG_TLS_SHIFT 3 |

| #define | SYMBOL_REF_TLS_MODEL(RTX) ((enum tls_model) ((SYMBOL_REF_FLAGS (RTX) >> SYMBOL_FLAG_TLS_SHIFT) & 7)) |

| #define | SYMBOL_FLAG_EXTERNAL (1 << 6) |

| #define | SYMBOL_REF_EXTERNAL_P(RTX) ((SYMBOL_REF_FLAGS (RTX) & SYMBOL_FLAG_EXTERNAL) != 0) |

| #define | SYMBOL_FLAG_HAS_BLOCK_INFO (1 << 7) |

| #define | SYMBOL_REF_HAS_BLOCK_INFO_P(RTX) ((SYMBOL_REF_FLAGS (RTX) & SYMBOL_FLAG_HAS_BLOCK_INFO) != 0) |

| #define | SYMBOL_FLAG_ANCHOR (1 << 8) |

| #define | SYMBOL_REF_ANCHOR_P(RTX) ((SYMBOL_REF_FLAGS (RTX) & SYMBOL_FLAG_ANCHOR) != 0) |

| #define | SYMBOL_FLAG_MACH_DEP_SHIFT 9 |

| #define | SYMBOL_FLAG_MACH_DEP (1 << SYMBOL_FLAG_MACH_DEP_SHIFT) |

| #define | SYMBOL_REF_BLOCK(RTX) (BLOCK_SYMBOL_CHECK (RTX)->block) |

| #define | SYMBOL_REF_BLOCK_OFFSET(RTX) (BLOCK_SYMBOL_CHECK (RTX)->offset) |

| #define | PREFETCH_SCHEDULE_BARRIER_P(RTX) (RTL_FLAG_CHECK1 ("PREFETCH_SCHEDULE_BARRIER_P", (RTX), PREFETCH)->volatil) |

| #define | FIND_REG_INC_NOTE(INSN, REG) 0 |

| #define | HAVE_PRE_INCREMENT 0 |

| #define | HAVE_PRE_DECREMENT 0 |

| #define | HAVE_POST_INCREMENT 0 |

| #define | HAVE_POST_DECREMENT 0 |

| #define | HAVE_POST_MODIFY_DISP 0 |

| #define | HAVE_POST_MODIFY_REG 0 |

| #define | HAVE_PRE_MODIFY_DISP 0 |

| #define | HAVE_PRE_MODIFY_REG 0 |

| #define | USE_LOAD_POST_INCREMENT(MODE) HAVE_POST_INCREMENT |

| #define | USE_LOAD_POST_DECREMENT(MODE) HAVE_POST_DECREMENT |

| #define | USE_LOAD_PRE_INCREMENT(MODE) HAVE_PRE_INCREMENT |

| #define | USE_LOAD_PRE_DECREMENT(MODE) HAVE_PRE_DECREMENT |

| #define | USE_STORE_POST_INCREMENT(MODE) HAVE_POST_INCREMENT |

| #define | USE_STORE_POST_DECREMENT(MODE) HAVE_POST_DECREMENT |

| #define | USE_STORE_PRE_INCREMENT(MODE) HAVE_PRE_INCREMENT |

| #define | USE_STORE_PRE_DECREMENT(MODE) HAVE_PRE_DECREMENT |

| #define | rtx_alloc(c) rtx_alloc_stat (c MEM_STAT_INFO) |

| #define | shallow_copy_rtx(a) shallow_copy_rtx_stat (a MEM_STAT_INFO) |

| #define | convert_memory_address(to_mode, x) convert_memory_address_addr_space ((to_mode), (x), ADDR_SPACE_GENERIC) |

| #define | ASLK_REDUCE_ALIGN 1 |

| #define | ASLK_RECORD_PAD 2 |

| #define | single_set(I) |

| #define | single_set_1(I) single_set_2 (I, PATTERN (I)) |

| #define | MAX_SAVED_CONST_INT 64 |

| #define | const0_rtx (const_int_rtx[MAX_SAVED_CONST_INT]) |

| #define | const1_rtx (const_int_rtx[MAX_SAVED_CONST_INT+1]) |

| #define | const2_rtx (const_int_rtx[MAX_SAVED_CONST_INT+2]) |

| #define | constm1_rtx (const_int_rtx[MAX_SAVED_CONST_INT-1]) |

| #define | CONST0_RTX(MODE) (const_tiny_rtx[0][(int) (MODE)]) |

| #define | CONST1_RTX(MODE) (const_tiny_rtx[1][(int) (MODE)]) |

| #define | CONST2_RTX(MODE) (const_tiny_rtx[2][(int) (MODE)]) |

| #define | CONSTM1_RTX(MODE) (const_tiny_rtx[3][(int) (MODE)]) |

| #define | HARD_FRAME_POINTER_REGNUM FRAME_POINTER_REGNUM |

| #define | HARD_FRAME_POINTER_IS_FRAME_POINTER (HARD_FRAME_POINTER_REGNUM == FRAME_POINTER_REGNUM) |

| #define | HARD_FRAME_POINTER_IS_ARG_POINTER (HARD_FRAME_POINTER_REGNUM == ARG_POINTER_REGNUM) |

| #define | this_target_rtl (&default_target_rtl) |

| #define | global_rtl (this_target_rtl->x_global_rtl) |

| #define | pic_offset_table_rtx (this_target_rtl->x_pic_offset_table_rtx) |

| #define | return_address_pointer_rtx (this_target_rtl->x_return_address_pointer_rtx) |

| #define | top_of_stack (this_target_rtl->x_top_of_stack) |

| #define | mode_mem_attrs (this_target_rtl->x_mode_mem_attrs) |

| #define | stack_pointer_rtx (global_rtl[GR_STACK_POINTER]) |

| #define | frame_pointer_rtx (global_rtl[GR_FRAME_POINTER]) |

| #define | hard_frame_pointer_rtx (global_rtl[GR_HARD_FRAME_POINTER]) |

| #define | arg_pointer_rtx (global_rtl[GR_ARG_POINTER]) |

| #define | gen_rtx_ASM_INPUT(MODE, ARG0) gen_rtx_fmt_si (ASM_INPUT, (MODE), (ARG0), 0) |

| #define | gen_rtx_ASM_INPUT_loc(MODE, ARG0, LOC) gen_rtx_fmt_si (ASM_INPUT, (MODE), (ARG0), (LOC)) |

| #define | GEN_INT(N) gen_rtx_CONST_INT (VOIDmode, (N)) |

| #define | FIRST_VIRTUAL_REGISTER (FIRST_PSEUDO_REGISTER) |

| #define | virtual_incoming_args_rtx (global_rtl[GR_VIRTUAL_INCOMING_ARGS]) |

| #define | VIRTUAL_INCOMING_ARGS_REGNUM (FIRST_VIRTUAL_REGISTER) |

| #define | virtual_stack_vars_rtx (global_rtl[GR_VIRTUAL_STACK_ARGS]) |

| #define | VIRTUAL_STACK_VARS_REGNUM ((FIRST_VIRTUAL_REGISTER) + 1) |

| #define | virtual_stack_dynamic_rtx (global_rtl[GR_VIRTUAL_STACK_DYNAMIC]) |

| #define | VIRTUAL_STACK_DYNAMIC_REGNUM ((FIRST_VIRTUAL_REGISTER) + 2) |

| #define | virtual_outgoing_args_rtx (global_rtl[GR_VIRTUAL_OUTGOING_ARGS]) |

| #define | VIRTUAL_OUTGOING_ARGS_REGNUM ((FIRST_VIRTUAL_REGISTER) + 3) |

| #define | virtual_cfa_rtx (global_rtl[GR_VIRTUAL_CFA]) |

| #define | VIRTUAL_CFA_REGNUM ((FIRST_VIRTUAL_REGISTER) + 4) |

| #define | LAST_VIRTUAL_POINTER_REGISTER ((FIRST_VIRTUAL_REGISTER) + 4) |

| #define | virtual_preferred_stack_boundary_rtx (global_rtl[GR_VIRTUAL_PREFERRED_STACK_BOUNDARY]) |

| #define | VIRTUAL_PREFERRED_STACK_BOUNDARY_REGNUM ((FIRST_VIRTUAL_REGISTER) + 5) |

| #define | LAST_VIRTUAL_REGISTER ((FIRST_VIRTUAL_REGISTER) + 5) |

| #define | REGNO_PTR_FRAME_P(REGNUM) |

| #define | INVALID_REGNUM (~(unsigned int) 0) |

| #define | IGNORED_DWARF_REGNUM (INVALID_REGNUM - 1) |

| #define | can_create_pseudo_p() (!reload_in_progress && !reload_completed) |

| #define | gen_lowpart rtl_hooks.gen_lowpart |

| #define | fatal_insn(msgid, insn) _fatal_insn (msgid, insn, __FILE__, __LINE__, __FUNCTION__) |

| #define | fatal_insn_not_found(insn) _fatal_insn_not_found (insn, __FILE__, __LINE__, __FUNCTION__) |

Typedefs | |

| typedef struct mem_attrs | mem_attrs |

| typedef struct reg_attrs | reg_attrs |

| typedef union rtunion_def | rtunion |

| typedef struct replace_label_data | replace_label_data |

| typedef int(* | rtx_function )(rtx *, void *) |

| typedef int(* | for_each_inc_dec_fn )(rtx mem, rtx op, rtx dest, rtx src, rtx srcoff, void *arg) |

| typedef int(* | rtx_equal_p_callback_function )(const_rtx *, const_rtx *, rtx *, rtx *) |

| typedef int(* | hash_rtx_callback_function )(const_rtx, enum machine_mode, rtx *, enum machine_mode *) |

Enumerations | |

| enum | rtx_code { DEF_RTL_EXPR, DEF_RTL_EXPR } |

| enum | rtx_class { RTX_COMPARE, RTX_COMM_COMPARE, RTX_BIN_ARITH, RTX_COMM_ARITH, RTX_UNARY, RTX_EXTRA, RTX_MATCH, RTX_INSN, RTX_OBJ, RTX_CONST_OBJ, RTX_TERNARY, RTX_BITFIELD_OPS, RTX_AUTOINC } |

| enum | reg_note { REG_NOTE, REG_NOTE } |

| enum | insn_note { INSN_NOTE, INSN_NOTE } |

| enum | label_kind { LABEL_NORMAL = 0, LABEL_STATIC_ENTRY, LABEL_GLOBAL_ENTRY, LABEL_WEAK_ENTRY } |

| enum | global_rtl_index { GR_STACK_POINTER, GR_FRAME_POINTER, GR_ARG_POINTER = GR_FRAME_POINTER, GR_HARD_FRAME_POINTER = GR_FRAME_POINTER, GR_VIRTUAL_INCOMING_ARGS, GR_VIRTUAL_STACK_ARGS, GR_VIRTUAL_STACK_DYNAMIC, GR_VIRTUAL_OUTGOING_ARGS, GR_VIRTUAL_CFA, GR_VIRTUAL_PREFERRED_STACK_BOUNDARY, GR_MAX } |

| enum | libcall_type { LCT_NORMAL = 0, LCT_CONST = 1, LCT_PURE = 2, LCT_NORETURN = 3, LCT_THROW = 4, LCT_RETURNS_TWICE = 5 } |

Variables | |

| const unsigned char | rtx_length [NUM_RTX_CODE] |

| const char *const | rtx_name [NUM_RTX_CODE] |

| const char *const | rtx_format [NUM_RTX_CODE] |

| enum rtx_class | rtx_class [NUM_RTX_CODE] |

| const unsigned char | rtx_code_size [NUM_RTX_CODE] |

| const unsigned char | rtx_next [NUM_RTX_CODE] |

| const char *const | reg_note_name [] |

| const char *const | note_insn_name [NOTE_INSN_MAX] |

| int | generating_concat_p |

| int | currently_expanding_to_rtl |

| location_t | prologue_location |

| location_t | epilogue_location |

| int | split_branch_probability |

| rtx | const_int_rtx [MAX_SAVED_CONST_INT *2+1] |

| rtx | const_true_rtx |

| rtx | const_tiny_rtx [4][(int) MAX_MACHINE_MODE] |

| rtx | pc_rtx |

| rtx | cc0_rtx |

| rtx | ret_rtx |

| rtx | simple_return_rtx |

| struct target_rtl | default_target_rtl |

| int | reload_completed |

| int | epilogue_completed |

| int | reload_in_progress |

| int | lra_in_progress |

| int | cse_not_expected |

| const char * | print_rtx_head |

| rtx | stack_limit_rtx |

| struct rtl_hooks | rtl_hooks |

| struct rtl_hooks | general_rtl_hooks |

Macro Definition Documentation

| #define ADDR_DIFF_VEC_FLAGS | ( | RTX | ) | X0ADVFLAGS (RTX, 4) |

In an ADDR_DIFF_VEC, the flags for RTX for use by branch shortening. See the comments for ADDR_DIFF_VEC in rtl.def.

Predicate yielding nonzero iff X is a return or simple_return.

Referenced by replace_rtx(), and return_insn_p().

| #define arg_pointer_rtx (global_rtl[GR_ARG_POINTER]) |

| #define ARITHMETIC_P | ( | X | ) |

1 if X is an arithmetic operator.

| #define ASLK_RECORD_PAD 2 |

| #define ASLK_REDUCE_ALIGN 1 |

| #define ASM_INPUT_SOURCE_LOCATION | ( | RTX | ) | XCUINT (RTX, 1, ASM_INPUT) |

Referenced by find_comparison_args().

| #define ASM_OPERANDS_INPUT_CONSTRAINT_VEC | ( | RTX | ) | XCVEC (RTX, 4, ASM_OPERANDS) |

| #define ASM_OPERANDS_INPUT_LENGTH | ( | RTX | ) | XCVECLEN (RTX, 3, ASM_OPERANDS) |

Referenced by find_comparison_args().

| #define ASM_OPERANDS_INPUT_VEC | ( | RTX | ) | XCVEC (RTX, 3, ASM_OPERANDS) |

Referenced by ordered_comparison_operator().

Referenced by emit_barrier_after_bb().

| #define ASM_OPERANDS_LABEL_LENGTH | ( | RTX | ) | XCVECLEN (RTX, 5, ASM_OPERANDS) |

| #define ASM_OPERANDS_LABEL_VEC | ( | RTX | ) | XCVEC (RTX, 5, ASM_OPERANDS) |

| #define ASM_OPERANDS_OUTPUT_CONSTRAINT | ( | RTX | ) | XCSTR (RTX, 1, ASM_OPERANDS) |

Referenced by extract_asm_operands().

| #define ASM_OPERANDS_OUTPUT_IDX | ( | RTX | ) | XCINT (RTX, 2, ASM_OPERANDS) |

| #define ASM_OPERANDS_SOURCE_LOCATION | ( | RTX | ) | XCUINT (RTX, 6, ASM_OPERANDS) |

Referenced by diagnostic_for_asm().

| #define ASM_OPERANDS_TEMPLATE | ( | RTX | ) | XCSTR (RTX, 0, ASM_OPERANDS) |

Access various components of an ASM_OPERANDS rtx.

| #define BARRIER_P | ( | X | ) | (GET_CODE (X) == BARRIER) |

Predicate yielding nonzero iff X is a barrier insn.

Referenced by alter_reg(), block_label(), create_cfi_notes(), make_pass_free_cfg(), merge_blocks_move_predecessor_nojumps(), rebuild_jump_labels_chain(), and rtl_verify_bb_pointers().

| #define BINARY_P | ( | X | ) | ((GET_RTX_CLASS (GET_CODE (X)) & RTX_BINARY_MASK) == RTX_BINARY_RESULT) |

1 if X is a binary operator.

| #define BLOCK_FOR_INSN | ( | INSN | ) | XBBDEF (INSN, 3) |

Referenced by alloc_cprop_mem(), check_dependency(), delete_insn(), df_dump_insn_bottom(), emit_call_insn_after_setloc(), find_moveable_store(), free_gcse_mem(), init_resource_info(), inner_loop_header_p(), insert_insn_end_basic_block(), insert_store(), ira_reassign_pseudos(), mark_label_nuses(), may_assign_reg_p(), move2add_use_add3_insn(), move2add_valid_value_p(), one_pre_gcse_pass(), pre_edge_insert(), process_reg_shuffles(), reload_combine_closest_single_use(), remove_predictions_associated_with_edge(), rtl_delete_block(), saved_hard_reg_compare_func(), store_killed_before(), and try_replace_reg().

| #define BLOCK_SYMBOL_CHECK | ( | RTX | ) | (&(RTX)->u.block_sym) |

| #define CALL_INSN_FUNCTION_USAGE | ( | INSN | ) | XEXP(INSN, 8) |

This field is only present on CALL_INSNs. It holds a chain of EXPR_LIST of USE and CLOBBER expressions. USE expressions list the registers filled with arguments that are passed to the function. CLOBBER expressions document the registers explicitly clobbered by this CALL_INSN. Pseudo registers can not be mentioned in this list.

Referenced by check_argument_store(), find_reg_note(), gen_const_vector(), make_note_raw(), merge_dir(), remove_pseudos(), and reverse_op().

| #define CALL_P | ( | X | ) | (GET_CODE (X) == CALL_INSN) |

Predicate yielding nonzero iff X is a call insn.

Referenced by can_throw_external(), cheap_bb_rtx_cost_p(), check_argument_store(), default_fixed_point_supported_p(), df_get_exit_block_use_set(), do_warn_unused_parameter(), emit_move_insn(), expand_builtin_longjmp(), expand_copysign_bit(), find_reg_note(), force_move_args_size_note(), fprint_whex(), get_eh_region_from_rtx(), get_last_insertion_point(), get_last_value_validate(), make_note_raw(), merge_identical_invariants(), next_active_insn(), process_bb_node_lives(), record_entry_value(), remove_pseudos(), remove_unreachable_eh_regions(), saved_hard_reg_compare_func(), sjlj_assign_call_site_values(), and store_killed_in_pat().

| #define can_create_pseudo_p | ( | ) | (!reload_in_progress && !reload_completed) |

This macro indicates whether you may create a new pseudo-register.

| #define CASE_CONST_ANY |

Match all CONST_* rtxes.

Referenced by equiv_init_varies_p(), find_comparison_args(), gen_clobber(), get_elimination(), invariant_for_use(), remove_note(), rtx_unstable_p(), rtx_varies_p(), set_label_offsets(), shared_const_p(), target_canonicalize_comparison(), and volatile_refs_p().

| #define CASE_CONST_SCALAR_INT |

Match CONST_*s that can represent compile-time constant integers.

Referenced by convert_memory_address_addr_space().

| #define CASE_CONST_UNIQUE |

Match CONST_*s for which pointer equality corresponds to value equality.

Referenced by hard_reg_set_here_p(), invert_jump(), and rtx_equal_p_cb().

| #define CODE_LABEL_NUMBER | ( | INSN | ) | XINT (INSN, 6) |

The label-number of a code-label. The assembler label is made from `L' and the label-number printed in decimal. Label numbers are unique in a compilation.

Referenced by alter_reg(), default_jump_align_max_skip(), default_loop_align_max_skip(), and premark_types_used_by_global_vars_helper().

| #define COMMUTATIVE_ARITH_P | ( | X | ) | (GET_RTX_CLASS (GET_CODE (X)) == RTX_COMM_ARITH) |

1 if X is an arithmetic operator.

| #define COMMUTATIVE_P | ( | X | ) |

1 if X is a commutative operator on integers.

Referenced by default_hidden_stack_protect_fail(), and validate_change().

| #define COMPARISON_P | ( | X | ) | ((GET_RTX_CLASS (GET_CODE (X)) & RTX_COMPARE_MASK) == RTX_COMPARE_RESULT) |

1 if X is a relational operator.

Referenced by canon_condition(), and simplify_set().

| #define COND_EXEC_CODE | ( | RTX | ) | XCEXP (RTX, 1, COND_EXEC) |

Referenced by adjust_mem_uses(), multiple_sets(), and reg_overlap_mentioned_p().

| #define COND_EXEC_TEST | ( | RTX | ) | XCEXP (RTX, 0, COND_EXEC) |

For a COND_EXEC rtx, COND_EXEC_TEST is the condition to base conditionally executing the code on, COND_EXEC_CODE is the code to execute if the condition is true.

| #define const0_rtx (const_int_rtx[MAX_SAVED_CONST_INT]) |

Referenced by analyze_insn_to_expand_var(), can_store_by_pieces(), canon_condition(), cfg_layout_can_merge_blocks_p(), do_compare_rtx_and_jump(), do_jump_by_parts_zero_rtx(), emit_cmp_and_jump_insn_1(), end_ifcvt_sequence(), expand_atomic_compare_and_swap(), expand_builtin_longjmp(), expand_builtin_mempcpy_args(), expand_builtin_memset_args(), expand_builtin_strcpy_args(), expand_builtin_strncmp(), expand_call(), expand_copysign(), expand_gimple_stmt(), expand_mem_signal_fence(), expand_mult_highpart_adjust(), expand_sync_lock_test_and_set(), final_start_function(), find_shift_sequence(), get_frame_arg(), get_memmodel(), have_sub2_insn(), highest_pow2_factor_for_target(), maybe_emit_unop_insn(), move2add_use_add3_insn(), move2add_valid_value_p(), noce_try_addcc(), operands_match_p(), promote_decl_mode(), reg_num_sign_bit_copies_for_combine(), remove_eh_handler(), set_label_offsets(), simplify_relational_operation_1(), simplify_using_condition(), simplify_while_replacing(), and subst().

| #define CONST0_RTX | ( | MODE | ) | (const_tiny_rtx[0][(int) (MODE)]) |

Returns a constant 0 rtx in mode MODE. Integer modes are treated the same as VOIDmode.

Referenced by clear_by_pieces(), count_type_elements(), do_compare_rtx_and_jump(), expand_doubleword_shift(), expand_vector_broadcast(), fold_rtx(), highest_pow2_factor_for_target(), simplify_byte_swapping_operation(), and split_iv().

| #define const1_rtx (const_int_rtx[MAX_SAVED_CONST_INT+1]) |

| #define CONST1_RTX | ( | MODE | ) | (const_tiny_rtx[1][(int) (MODE)]) |

Likewise, for the constants 1 and 2 and -1.

Referenced by split_iv().

| #define const2_rtx (const_int_rtx[MAX_SAVED_CONST_INT+2]) |

| #define CONST2_RTX | ( | MODE | ) | (const_tiny_rtx[2][(int) (MODE)]) |

Predicate yielding true iff X is an rtx for a double-int.

Referenced by const_desc_rtx_eq(), decode_asm_operands(), process_alt_operands(), and simplify_relational_operation_1().

Predicate yielding true iff X is an rtx for a double-int.

Referenced by simplify_relational_operation_1().

| #define CONST_DOUBLE_HIGH | ( | r | ) | XCMWINT (r, 1, CONST_DOUBLE, VOIDmode) |

Referenced by print_value(), and simplify_relational_operation_1().

| #define CONST_DOUBLE_LOW | ( | r | ) | XCMWINT (r, 0, CONST_DOUBLE, VOIDmode) |

For a CONST_DOUBLE: For a VOIDmode, there are two integers CONST_DOUBLE_LOW is the low-order word and ..._HIGH the high-order. For a float, there is a REAL_VALUE_TYPE structure, and CONST_DOUBLE_REAL_VALUE(r) is a pointer to it.

Referenced by print_value(), and simplify_relational_operation_1().

| #define CONST_DOUBLE_P | ( | X | ) | (GET_CODE (X) == CONST_DOUBLE) |

Predicate yielding true iff X is an rtx for a double-int or floating point constant.

| #define CONST_DOUBLE_REAL_VALUE | ( | r | ) | ((const struct real_value *) XCNMPRV (r, CONST_DOUBLE, VOIDmode)) |

Referenced by print_value().

| #define CONST_FIXED_P | ( | X | ) | (GET_CODE (X) == CONST_FIXED) |

Predicate yielding nonzero iff X is an rtx for a constant fixed-point.

| #define CONST_FIXED_VALUE | ( | r | ) | ((const struct fixed_value *) XCNMPFV (r, CONST_FIXED, VOIDmode)) |

Referenced by const_double_htab_eq(), and print_value().

| #define CONST_FIXED_VALUE_HIGH | ( | r | ) | ((HOST_WIDE_INT) (CONST_FIXED_VALUE (r)->data.high)) |

| #define CONST_FIXED_VALUE_LOW | ( | r | ) | ((HOST_WIDE_INT) (CONST_FIXED_VALUE (r)->data.low)) |

| #define CONST_INT_P | ( | X | ) | (GET_CODE (X) == CONST_INT) |

Predicate yielding nonzero iff X is an rtx for a constant integer.

Referenced by compress_float_constant(), convert_memory_address_addr_space(), convert_modes(), end_ifcvt_sequence(), expand_mult(), find_call_stack_args(), find_shift_sequence(), find_single_use(), get_call_rtx_from(), make_extraction(), make_memloc(), move2add_use_add3_insn(), move_by_pieces_1(), offset_within_block_p(), operands_match_p(), output_asm_operand_names(), process_alt_operands(), promote_decl_mode(), register_operand(), remove_reg_equal_offset_note(), rtx_equal_for_memref_p(), set_label_offsets(), setup_elimination_map(), simplify_relational_operation_1(), simplify_set(), subst(), swap_commutative_operands_with_target(), and validate_simplify_insn().

| #define CONST_SCALAR_INT_P | ( | X | ) | (CONST_INT_P (X) || CONST_DOUBLE_AS_INT_P (X)) |

Predicate yielding true iff X is an rtx for a integer const.

Referenced by process_alt_operands().

For a CONST_VECTOR, return element #n.

| #define CONST_VECTOR_NUNITS | ( | RTX | ) | XCVECLEN (RTX, 0, CONST_VECTOR) |

For a CONST_VECTOR, return the number of elements in a vector.

| #define CONSTANT_P | ( | X | ) | (GET_RTX_CLASS (GET_CODE (X)) == RTX_CONST_OBJ) |

1 if X is a constant value that is an integer.

Referenced by adjust_for_new_dest(), canon_condition(), check_cond_move_block(), copy_rtx_if_shared_1(), do_output_reload(), emit_group_load_1(), emit_move_change_mode(), equiv_constant(), expand_debug_parm_decl(), find_comparison_args(), find_shift_sequence(), init_num_sign_bit_copies_in_rep(), no_conflict_move_test(), noce_emit_store_flag(), ok_for_base_p_nonstrict(), operands_match_p(), pmode_register_operand(), process_alt_operands(), register_operand(), reload_combine_closest_single_use(), rtx_equal_for_memref_p(), scratch_operand(), set_label_offsets(), set_usage_bits(), setup_elimination_map(), subst_reloads(), unchain_one_elt_loc_list(), validate_simplify_insn(), and vt_stack_adjustments().

| #define CONSTANT_POOL_ADDRESS_P | ( | RTX | ) | (RTL_FLAG_CHECK1 ("CONSTANT_POOL_ADDRESS_P", (RTX), SYMBOL_REF)->unchanging) |

1 if RTX is a symbol_ref that addresses this function's rtl constants pool.

Referenced by default_binds_local_p(), get_integer_term(), and init_varasm_status().

| #define constm1_rtx (const_int_rtx[MAX_SAVED_CONST_INT-1]) |

| #define CONSTM1_RTX | ( | MODE | ) | (const_tiny_rtx[3][(int) (MODE)]) |

| #define convert_memory_address | ( | to_mode, | |

| x | |||

| ) | convert_memory_address_addr_space ((to_mode), (x), ADDR_SPACE_GENERIC) |

Referenced by emit_jump(), expand_null_return_1(), and rtx_equal_for_memref_p().

in rtlanal.c Return the right cost to give to an operation to make the cost of the corresponding register-to-register instruction N times that of a fast register-to-register instruction.

Referenced by no_conflict_move_test().

| #define CSELIB_VAL_PTR | ( | RTX | ) | X0CSELIB (RTX, 0) |

In a VALUE, the value cselib has assigned to RTX. This is a "struct cselib_val_struct", see cselib.h.

Referenced by add_mem_for_addr(), attrs_list_mpdv_union(), drop_overlapping_mem_locs(), refs_newer_value_cb(), and rtx_equal_for_memref_p().

| #define DEBUG_EXPR_TREE_DECL | ( | RTX | ) | XCTREE (RTX, 0, DEBUG_EXPR) |

DEBUG_EXPR_DECL corresponding to a DEBUG_EXPR RTX.

Referenced by print_value().

| #define DEBUG_IMPLICIT_PTR_DECL | ( | RTX | ) | XCTREE (RTX, 0, DEBUG_IMPLICIT_PTR) |

VAR_DECL/PARM_DECL DEBUG_IMPLICIT_PTR takes address of.

Referenced by rtx_equal_p_cb().

| #define DEBUG_INSN_P | ( | X | ) | (GET_CODE (X) == DEBUG_INSN) |

Predicate yielding nonzero iff X is a debug note/insn.

Referenced by bb_note(), df_word_lr_simulate_uses(), gate_ud_dce(), get_last_insn_anywhere(), insert_var_expansion_initialization(), note_reg_elim_costly(), note_sets_clobbers(), reload_combine_purge_reg_uses_after_ruid(), replace_equiv_address_nv(), set_label_offsets(), set_used_flags(), subst_reloads(), and try_crossjump_to_edge().

| #define DEBUG_PARAMETER_REF_DECL | ( | RTX | ) | XCTREE (RTX, 0, DEBUG_PARAMETER_REF) |

PARM_DECL DEBUG_PARAMETER_REF references.

Referenced by convert_descriptor_to_mode(), gen_formal_parameter_die(), and rtx_equal_p_cb().

| #define DEF_RTL_EXPR | ( | ENUM, | |

| NAME, | |||

| FORMAT, | |||

| CLASS | |||

| ) | ENUM , |

| #define ENTRY_VALUE_EXP | ( | RTX | ) | (RTL_CHECKC1 (RTX, 0, ENTRY_VALUE).rt_rtx) |

In an ENTRY_VALUE this is the DECL_INCOMING_RTL of the argument in question.

Referenced by rtx_equal_p_cb().

| #define fatal_insn | ( | msgid, | |

| insn | |||

| ) | _fatal_insn (msgid, insn, __FILE__, __LINE__, __FUNCTION__) |

Referenced by rtl_verify_edges(), and rtl_verify_flow_info_1().

| #define fatal_insn_not_found | ( | insn | ) | _fatal_insn_not_found (insn, __FILE__, __LINE__, __FUNCTION__) |

| #define FIND_REG_INC_NOTE | ( | INSN, | |

| REG | |||

| ) | 0 |

Indicate whether the machine has any sort of auto increment addressing. If not, we can avoid checking for REG_INC notes. Define a macro to look for REG_INC notes, but save time on machines where they never exist.

| #define FIRST_VIRTUAL_REGISTER (FIRST_PSEUDO_REGISTER) |

Virtual registers are used during RTL generation to refer to locations into the stack frame when the actual location isn't known until RTL generation is complete. The routine instantiate_virtual_regs replaces these with the proper value, which is normally {frame,arg,stack}_pointer_rtx plus a constant.

| #define frame_pointer_rtx (global_rtl[GR_FRAME_POINTER]) |

| #define GEN_INT | ( | N | ) | gen_rtx_CONST_INT (VOIDmode, (N)) |

Referenced by assemble_static_space(), count_type_elements(), create_fixed_operand(), decide_peel_simple(), dw2_asm_output_data_sleb128(), emit_block_move_libcall_fn(), emit_block_move_via_loop(), emit_cstore(), emit_move_change_mode(), expand_builtin_memset_args(), expand_mult_highpart_adjust(), expand_vector_broadcast(), extract_low_bits(), fixup_args_size_notes(), maybe_emit_sync_lock_test_and_set(), output_constant(), output_constructor_regular_field(), remove_eh_handler(), remove_unreachable_eh_regions_worker(), simplify_and_const_int_1(), swap_commutative_operands_with_target(), try_widen_shift_mode(), and undo_commit().

| #define gen_lowpart rtl_hooks.gen_lowpart |

Keep this for the nonce.

Referenced by convert_modes(), expand_binop(), lookup_as_function(), make_compound_operation(), make_extraction(), simplify_set(), and undo_commit().

| #define gen_rtx_ASM_INPUT | ( | MODE, | |

| ARG0 | |||

| ) | gen_rtx_fmt_si (ASM_INPUT, (MODE), (ARG0), 0) |

Include the RTL generation functions.

Referenced by get_reg_attrs().

Referenced by n_occurrences().

| #define gen_rtx_UNKNOWN_VAR_LOC | ( | ) | (gen_rtx_CLOBBER (VOIDmode, const0_rtx)) |

Expand to the RTL that denotes an unknown variable location in a DEBUG_INSN.

| #define GET_CODE | ( | RTX | ) | ((enum rtx_code) (RTX)->code) |

Define macros to access the `code' field of the rtx.

Referenced by add_attr_value(), add_define_attr(), add_equal_note(), add_mem_for_addr(), add_mode_tests(), add_name_attribute(), addr_expr_of_non_mem_decl_p(), address_of_int_loc_descriptor(), address_operand(), adjust_mem_uses(), adjust_operands_numbers(), alter_reg(), apply_code_iterator(), asm_noperands(), assemble_name_raw(), assign_parm_setup_reg(), assign_stack_slot_num_and_sort_pseudos(), attr_alt_subset_of_compl_p(), attr_hash_add_string(), can_reload_into(), canon_condition(), canonicalize_change_group(), canonicalize_values_star(), change_cfi_row(), change_subst_attribute(), check_argument_store(), check_defs(), clobber_return_register(), collect_one_action_chain(), compress_float_constant(), compute_alternative_mask(), compute_const_anchors(), compute_local_properties(), cond_exec_find_if_block(), connect_traces(), contains_symbol_ref(), convert_memory_address_addr_space(), copy_rtx_if_shared_1(), count_alternatives(), count_reg_usage(), cse_prescan_path(), dead_debug_global_insert(), dead_or_set_regno_p(), decl_for_component_ref(), decls_for_scope(), decode_asm_operands(), decompose_register(), default_section_type_flags(), delete_slot_part(), df_bb_regno_first_def_find(), df_read_modify_subreg_p(), df_simulate_defs(), df_simulate_uses(), diagnostic_for_asm(), do_output_reload(), drop_overlapping_mem_locs(), dump_rtx_statistics(), dwarf2out_flush_queued_reg_saves(), dwarf2out_frame_debug_cfa_window_save(), emit_clobber(), emit_debug_insn(), emit_debug_insn_after_noloc(), emit_insn_at_entry(), emit_move_change_mode(), emit_move_insn(), emit_note_before(), emit_notes_for_differences_2(), emit_pattern_after(), emit_push_insn(), equiv_init_varies_p(), expand_copysign_bit(), extract_asm_operands(), find_call_stack_args(), find_comparison_args(), find_invariants_to_move(), find_loads(), find_reg_equal_equiv_note(), find_reg_note(), find_single_use(), for_each_rtx(), fprint_whex(), free_loop_data(), gcse_emit_move_after(), gen_attr(), gen_formal_parameter_die(), gen_insn(), gen_label_rtx(), gen_mnemonic_setattr(), gen_reg_rtx_offset(), gen_satfractuns_conv_libfunc(), get_attr_order(), get_biv_step_1(), get_call_rtx_from(), get_elimination(), get_final_hard_regno(), get_integer_term(), hash_scan_set(), init_num_sign_bit_copies_in_rep(), init_varasm_status(), initial_value_entry(), initialize_argument_information(), insert_insn_end_basic_block(), insert_var_expansion_initialization(), invert_exp_1(), iv_analysis_done(), kill_set_value(), lra_set_insn_deleted(), main(), make_extraction(), make_memloc(), mark_insn(), mark_pseudo_reg_dead(), maybe_memory_address_addr_space_p(), maybe_propagate_label_ref(), memory_operand(), merge_dir(), move2add_use_add3_insn(), multiple_sets(), new_decision(), noce_emit_store_flag(), note_outside_basic_block_p(), note_reg_elim_costly(), note_stores(), note_uses(), notice_source_line(), notice_stack_pointer_modification_1(), num_validated_changes(), offset_within_block_p(), ok_for_base_p_nonstrict(), one_code_hoisting_pass(), operands_match_p(), ordered_comparison_operator(), output_added_clobbers_hard_reg_p(), output_asm_insn(), output_get_insn_name(), preserve_value(), prev_nonnote_insn_bb(), previous_insn(), print_value(), process_alt_operands(), process_bb_node_lives(), push_insns(), record_component_aliases(), record_hard_reg_sets(), reg_class_from_constraints(), reg_overlap_mentioned_p(), reg_saved_in(), reload_as_needed(), reload_combine_closest_single_use(), remove_invalid_refs(), remove_note(), remove_reg_equal_offset_note(), remove_value_from_changed_variables(), replace_oldest_value_addr(), resolve_operand_name_1(), returnjump_p_1(), reverse_op(), rtl_verify_flow_info_1(), rtx_addr_varies_p(), rtx_equal_for_memref_p(), safe_insn_predicate(), save_call_clobbered_regs(), scompare_loc_descriptor(), scratch_operand(), set_dv_changed(), set_label_offsets(), set_nonzero_bits_and_sign_copies(), set_reg_attrs_from_value(), set_usage_bits(), setup_elimination_map(), setup_reg_equiv(), shallow_copy_rtvec(), shared_const_p(), simplejump_p(), simplify_byte_swapping_operation(), simplify_relational_operation_1(), simplify_replace_rtx(), simplify_set(), simplify_truncation(), single_set_2(), spill_hard_reg(), splay_tree_compare_strings(), store_killed_in_pat(), subst(), subst_reloads(), tablejump_p(), target_canonicalize_comparison(), try_back_substitute_reg(), unchain_one_elt_loc_list(), unroll_loop_stupid(), update_cfg_for_uncondjump(), uses_hard_regs_p(), valid_address_p(), validate_simplify_insn(), var_reg_decl_set(), var_reg_set(), var_regno_delete(), volatile_refs_p(), vt_canon_true_dep(), vt_stack_adjustments(), walk_attr_value(), write_const_num_delay_slots(), and write_header().

| #define GET_MODE | ( | RTX | ) | ((enum machine_mode) (RTX)->mode) |

Referenced by add_stores(), addr_expr_of_non_mem_decl_p_1(), address_of_int_loc_descriptor(), address_operand(), adjust_offset_for_component_ref(), anti_adjust_stack_and_probe(), asm_noperands(), asm_operand_ok(), assemble_name_raw(), assign_mem_slot(), assign_parm_setup_reg(), assign_temp(), build_def_use(), build_libfunc_function(), calculate_bb_reg_pressure(), can_compare_and_swap_p(), can_reload_into(), canonicalize_values_star(), combine_set_extension(), compress_float_constant(), compute_const_anchors(), cond_exec_find_if_block(), const_double_htab_eq(), convert_memory_address_addr_space(), copy_rtx_if_shared_1(), count_reg_usage(), count_type_elements(), cselib_invalidate_mem(), cselib_invalidate_regno(), cselib_reg_set_mode(), dataflow_set_destroy(), dead_debug_insert_temp(), decl_for_component_ref(), decode_asm_operands(), decompose_register(), delete_caller_save_insns(), distribute_and_simplify_rtx(), do_output_reload(), dump_case_nodes(), dump_insn_info(), dv_changed_p(), dwarf2out_frame_debug_adjust_cfa(), emit_cmp_and_jump_insn_1(), emit_cstore(), emit_group_load_1(), entry_register(), expand_builtin_memset_args(), expand_debug_parm_decl(), expand_mem_signal_fence(), expand_mult(), expand_naked_return(), expand_value_return(), extract_asm_operands(), extract_split_bit_field(), find_comparison_args(), find_reloads_toplev(), find_shift_sequence(), find_single_use(), fixed_base_plus_p(), gen_formal_parameter_die(), gen_group_rtx(), gen_highpart_mode(), gen_insn(), gen_lowpart_common(), gen_lowpart_if_possible(), get_biv_step_1(), get_inner_reference(), get_ivts_expr(), cselib_hasher::hash(), have_sub2_insn(), inherit_piecemeal_p(), init_reg_last(), insert_move_for_subreg(), insert_restore(), insert_save(), invariant_for_use(), invert_exp_1(), invert_jump_1(), kill_set_value(), mark_nonreg_stores_2(), mark_pseudo_regno_subword_dead(), match_reload(), mathfn_built_in_1(), may_trap_p(), maybe_memory_address_addr_space_p(), merge_overlapping_regs(), move2add_use_add3_insn(), move2add_valid_value_p(), move_block_to_reg(), no_conflict_move_test(), noce_emit_store_flag(), noce_try_addcc(), noce_try_cmove_arith(), nonimmediate_operand(), num_changes_pending(), operands_match_p(), print_value(), process_alt_operands(), push_insns(), push_secondary_reload(), record_component_aliases(), record_value_for_reg(), redirect_jump(), reg_class_from_constraints(), reg_loc_descriptor(), register_operand(), reload_as_needed(), reload_combine_closest_single_use(), reload_combine_note_store(), reload_combine_recognize_pattern(), remove_child_with_prev(), replace_reg_with_saved_mem(), reset_opr_set_tables(), resolve_reg_notes(), resolve_subreg_use(), reverse_op(), rtx_equal_for_memref_p(), scompare_loc_descriptor(), scratch_operand(), set_decl_origin_self(), set_dv_changed(), set_label_offsets(), set_mem_attributes(), set_of_1(), set_reg_attrs_from_value(), set_storage_via_setmem(), setup_elimination_map(), setup_incoming_promotions(), setup_reg_equiv(), shift_optab_p(), simplify_relational_operation_1(), simplify_replace_rtx(), simplify_set(), simplify_while_replacing(), sjlj_assign_call_site_values(), spill_hard_reg(), split_iv(), stabilize_va_list_loc(), store_bit_field(), store_killed_before(), subst_pattern_match(), subst_reloads(), unroll_loop_stupid(), update_auto_inc_notes(), uses_hard_regs_p(), val_bind(), validate_simplify_insn(), variable_part_different_p(), volatile_refs_p(), and vt_stack_adjustments().

| #define GET_NOTE_INSN_NAME | ( | NOTE_CODE | ) | (note_insn_name[(NOTE_CODE)]) |

| #define GET_NUM_ELEM | ( | RTVEC | ) | ((RTVEC)->num_elem) |

Referenced by change_subst_attribute(), and find_int().

| #define GET_REG_NOTE_NAME | ( | MODE | ) | (reg_note_name[(int) (MODE)]) |

| #define GET_RTX_CLASS | ( | CODE | ) | (rtx_class[(int) (CODE)]) |

| #define GET_RTX_FORMAT | ( | CODE | ) | (rtx_format[(int) (CODE)]) |

| #define GET_RTX_LENGTH | ( | CODE | ) | (rtx_length[(int) (CODE)]) |

| #define GET_RTX_NAME | ( | CODE | ) | (rtx_name[(int) (CODE)]) |

Referenced by add_map_value(), dump_rtx_statistics(), gen_satfractuns_conv_libfunc(), and print_value().

| #define global_rtl (this_target_rtl->x_global_rtl) |

| #define HARD_FRAME_POINTER_IS_ARG_POINTER (HARD_FRAME_POINTER_REGNUM == ARG_POINTER_REGNUM) |

| #define HARD_FRAME_POINTER_IS_FRAME_POINTER (HARD_FRAME_POINTER_REGNUM == FRAME_POINTER_REGNUM) |

Referenced by make_memloc().

| #define HARD_FRAME_POINTER_REGNUM FRAME_POINTER_REGNUM |

If HARD_FRAME_POINTER_REGNUM is defined, then a special dummy reg is used to represent the frame pointer. This is because the hard frame pointer and the automatic variables are separated by an amount that cannot be determined until after register allocation. We can assume that in this case ELIMINABLE_REGS will be defined, one action of which will be to eliminate FRAME_POINTER_REGNUM into HARD_FRAME_POINTER_REGNUM.

Referenced by add_mem_for_addr(), dwarf2out_frame_debug_cfa_window_save(), insn_contains_asm_1(), and remove_reg_equal_offset_note().

| #define hard_frame_pointer_rtx (global_rtl[GR_HARD_FRAME_POINTER]) |

Referenced by cselib_record_sets(), rtx_unstable_p(), rtx_varies_p(), set_usage_bits(), and target_char_cast().

| #define HARD_REGISTER_NUM_P | ( | REG_NO | ) | ((REG_NO) < FIRST_PSEUDO_REGISTER) |

1 if the given register number REG_NO corresponds to a hard register.

Referenced by df_chain_finalize(), insert_save(), resolve_reg_notes(), and variable_part_different_p().

| #define HARD_REGISTER_P | ( | REG | ) | (HARD_REGISTER_NUM_P (REGNO (REG))) |

1 if the given register REG corresponds to a hard register.

Referenced by bb_has_abnormal_call_pred(), dump_insn_info(), emit_move_change_mode(), iv_analysis_done(), record_jump_cond_subreg(), record_last_set_info(), save_call_clobbered_regs(), and split_double().

| #define HAVE_POST_DECREMENT 0 |

| #define HAVE_POST_INCREMENT 0 |

Referenced by get_def_for_expr().

| #define HAVE_POST_MODIFY_DISP 0 |

| #define HAVE_POST_MODIFY_REG 0 |

| #define HAVE_PRE_DECREMENT 0 |

| #define HAVE_PRE_INCREMENT 0 |

| #define HAVE_PRE_MODIFY_DISP 0 |

| #define HAVE_PRE_MODIFY_REG 0 |

| #define IGNORED_DWARF_REGNUM (INVALID_REGNUM - 1) |

REGNUM for which no debug information can be generated.

| #define INSN_ANNULLED_BRANCH_P | ( | RTX | ) | (RTL_FLAG_CHECK1 ("INSN_ANNULLED_BRANCH_P", (RTX), JUMP_INSN)->unchanging) |

1 if RTX is a jump_insn, call_insn, or insn that is an annulling branch.

| #define INSN_CODE | ( | INSN | ) | XINT (INSN, 6) |

Code number of instruction, from when it was recognized. -1 means this instruction has not been recognized yet.

Referenced by init_elim_table(), mark_label_nuses(), maybe_fix_stack_asms(), multiple_sets(), and process_alt_operands().

| #define INSN_DELETED_P | ( | RTX | ) |

1 if RTX is an insn that has been deleted.

Referenced by pre_edge_insert(), premark_types_used_by_global_vars_helper(), and return_insn_p().

| #define INSN_FROM_TARGET_P | ( | RTX | ) |

1 if RTX is an insn in a delay slot and is from the target of the branch. If the branch insn has INSN_ANNULLED_BRANCH_P set, this insn should only be executed if the branch is taken. For annulled branches with this bit clear, the insn should be executed only if the branch is not taken.

| #define INSN_HAS_LOCATION | ( | INSN | ) |

Referenced by loop_latch_edge(), and rtl_split_block().

| #define INSN_LOCATION | ( | INSN | ) | XUINT (INSN, 5) |

| #define INSN_P | ( | X | ) | (NONJUMP_INSN_P (X) || DEBUG_INSN_P (X) || JUMP_P (X) || CALL_P (X)) |

Predicate yielding nonzero iff X is a real insn.

Referenced by check_for_label_ref(), collect_one_action_chain(), compute_out(), count_reg_usage(), covers_regno_no_parallel_p(), covers_regno_p(), cse_prescan_path(), dead_or_set_regno_p(), df_chain_remove_problem(), df_get_call_refs(), df_get_exit_block_use_set(), df_live_free_bb_info(), df_set_regs_ever_live(), do_clobber_return_reg(), dse_step3(), eh_returnjump_p_1(), find_dead_or_set_registers(), for_each_eh_label(), get_first_nonnote_insn(), hash_scan_set(), in_list_p(), inner_loop_header_p(), insert_var_expansion_initialization(), loop_latch_edge(), make_reg_eh_region_note(), maybe_fix_stack_asms(), memref_referenced_p(), notice_stack_pointer_modification(), prev_nonnote_nondebug_insn(), record_hard_reg_uses(), reload_cse_regs_1(), remove_unreachable_eh_regions(), reorder_basic_blocks(), rtx_addr_varies_p(), scan_stores_spill(), sjlj_assign_call_site_values(), sprint_ul(), too_high_register_pressure_p(), update_alignments(), and vt_get_decl_and_offset().

| #define INSN_UID | ( | INSN | ) | XINT (INSN, 0) |

ACCESS MACROS for particular fields of insns. Holds a unique number for each insn. These are not necessarily sequentially increasing.

Referenced by add_to_inherit(), btr_def_live_range(), change_cfi_row(), compute_out(), connect_traces(), cselib_process_insn(), df_bb_regno_first_def_find(), df_chain_remove_problem(), df_dump_insn_bottom(), df_dump_insn_top(), df_hard_reg_used_p(), df_live_free_bb_info(), df_whole_mw_reg_dead_p(), df_word_lr_transfer_function(), dse_step3(), dse_transfer_function(), dump_prediction(), emit_call_insn_after_setloc(), emit_jump_insn_after_setloc(), find_if_case_2(), find_removable_extensions(), insert_insn_end_basic_block(), insert_store(), insn_addresses_new(), invalidate_insn_data_regno_info(), lra_inheritance(), lra_push_insn_by_uid(), lra_set_regno_unique_value(), mark_label_nuses(), new_btr_user(), note_sets_clobbers(), print_value(), process_bb_node_lives(), profile_function(), regrename_chain_from_id(), remove_pseudos(), replace_equiv_address_nv(), return_insn_p(), rtl_verify_edges(), swap_operands(), unsuitable_loc(), and web_main().

| #define INSN_VAR_LOCATION | ( | INSN | ) | PATTERN (INSN) |

The VAR_LOCATION rtx in a DEBUG_INSN.

| #define INSN_VAR_LOCATION_DECL | ( | INSN | ) | PAT_VAR_LOCATION_DECL (INSN_VAR_LOCATION (INSN)) |

Accessors for a tree-expanded var location debug insn.

Referenced by insert_var_expansion_initialization(), and print_insn().

| #define INSN_VAR_LOCATION_LOC | ( | INSN | ) | PAT_VAR_LOCATION_LOC (INSN_VAR_LOCATION (INSN)) |

Referenced by print_insn(), and reload_combine_purge_reg_uses_after_ruid().

| #define INSN_VAR_LOCATION_STATUS | ( | INSN | ) | PAT_VAR_LOCATION_STATUS (INSN_VAR_LOCATION (INSN)) |

| #define INTVAL | ( | RTX | ) | XCWINT (RTX, 0, CONST_INT) |

For a CONST_INT rtx, INTVAL extracts the integer.

Referenced by builtin_memset_gen_str(), check_defs(), combine_set_extension(), compress_float_constant(), compute_const_anchors(), convert_modes(), dump_prediction(), dwarf2out_flush_queued_reg_saves(), emit_notes_for_differences_2(), end_ifcvt_sequence(), expand_builtin_update_setjmp_buf(), expand_mult(), expand_widening_mult(), extract_low_bits(), find_call_stack_args(), find_shift_sequence(), find_single_use(), fixup_args_size_notes(), force_reg(), free_csa_reflist(), get_eh_region_from_rtx(), get_pos_from_mask(), insert_regs(), insert_temp_slot_address(), make_extraction(), maybe_memory_address_addr_space_p(), move2add_use_add3_insn(), move2add_valid_value_p(), move_by_pieces_1(), noce_emit_store_flag(), note_reg_elim_costly(), operands_match_p(), output_asm_operand_names(), print_value(), process_alt_operands(), promote_decl_mode(), reg_saved_in(), register_operand(), rtx_equal_for_memref_p(), rtx_for_function_call(), set_label_offsets(), set_reg_attrs_from_value(), setup_elimination_map(), simplify_comparison(), simplify_plus_minus_op_data_cmp(), simplify_relational_operation_1(), simplify_set(), subst(), swap_commutative_operands_with_target(), try_widen_shift_mode(), validate_simplify_insn(), validize_mem(), var_reg_decl_set(), var_regno_delete(), and vt_get_canonicalize_base().

| #define INVALID_REGNUM (~(unsigned int) 0) |

REGNUM never really appearing in the INSN stream.

Referenced by debug_value_data(), df_get_regular_block_artificial_uses(), dwarf2out_frame_debug_adjust_cfa(), fp_setter_insn(), and set_value_regno().

| #define JUMP_LABEL | ( | INSN | ) | XCEXP (INSN, 8, JUMP_INSN) |

In jump.c, each JUMP_INSN can point to a label that it can jump to, so that if the JUMP_INSN is deleted, the label's LABEL_NUSES can be decremented and possibly the label can be deleted.

Referenced by cond_exec_find_if_block(), find_cond_trap(), maybe_propagate_label_ref(), outof_cfg_layout_mode(), profile_function(), prologue_epilogue_contains(), record_truncated_values(), redirect_exp_1(), replace_rtx(), and sprint_ul_rev().

| #define JUMP_P | ( | X | ) | (GET_CODE (X) == JUMP_INSN) |

Predicate yielding nonzero iff X is a jump insn.

Referenced by add_labels_and_missing_jumps(), btr_def_live_range(), expand_builtin_longjmp(), find_cond_trap(), find_partition_fixes(), gcse_emit_move_after(), get_last_bb_insn(), get_last_insertion_point(), maybe_fix_stack_asms(), next_real_insn(), profile_function(), prologue_epilogue_contains(), remove_predictions_associated_with_edge(), replace_rtx(), and returnjump_p_1().

| #define JUMP_TABLE_DATA_P | ( | INSN | ) | (GET_CODE (INSN) == JUMP_TABLE_DATA) |

Predicate yielding nonzero iff X is a data for a jump table.

Referenced by inside_basic_block_p(), make_pass_compute_alignments(), maybe_fix_stack_asms(), replace_rtx(), and rtl_verify_flow_info_1().

| #define LABEL_ALT_ENTRY_P | ( | LABEL | ) | (LABEL_KIND (LABEL) != LABEL_NORMAL) |

| #define LABEL_KIND | ( | LABEL | ) | ((enum label_kind) (((LABEL)->jump << 1) | (LABEL)->call)) |

Retrieve the kind of LABEL.

| #define LABEL_NAME | ( | RTX | ) | XCSTR (RTX, 7, CODE_LABEL) |

The name of a label, in case it corresponds to an explicit label in the input source code.

Referenced by delete_insn(), and expand_computed_goto().

| #define LABEL_NUSES | ( | RTX | ) | XCINT (RTX, 5, CODE_LABEL) |

In jump.c, each label contains a count of the number of LABEL_REFs that point at it, so unused labels can be deleted.

Referenced by cond_exec_find_if_block(), emit_barrier_after_bb(), gcse_emit_move_after(), make_pass_cleanup_barriers(), prev_nonnote_insn_bb(), profile_function(), and record_truncated_values().

| #define LABEL_P | ( | X | ) | (GET_CODE (X) == CODE_LABEL) |

Predicate yielding nonzero iff X is a label insn.

Referenced by block_label(), can_fallthru(), create_cfi_notes(), gcse_emit_move_after(), init_resource_info(), make_pass_cleanup_barriers(), maybe_fix_stack_asms(), prev_nonnote_insn_bb(), profile_function(), record_truncated_values(), rtl_split_edge(), rtl_verify_edges(), sjlj_assign_call_site_values(), subst_reloads(), thread_prologue_and_epilogue_insns(), try_crossjump_to_edge(), and update_alignments().

| #define LABEL_PRESERVE_P | ( | RTX | ) | (RTL_FLAG_CHECK2 ("LABEL_PRESERVE_P", (RTX), CODE_LABEL, NOTE)->in_struct) |

1 if RTX is a code_label that should always be considered to be needed.

Referenced by make_pass_cleanup_barriers().

| #define LABEL_REF_NONLOCAL_P | ( | RTX | ) | (RTL_FLAG_CHECK1 ("LABEL_REF_NONLOCAL_P", (RTX), LABEL_REF)->volatil) |

1 if RTX is a label_ref for a nonlocal label. Likewise in an expr_list for a REG_LABEL_OPERAND or REG_LABEL_TARGET note.

Referenced by alter_reg(), convert_memory_address_addr_space(), gcse_emit_move_after(), and invert_jump().

Once basic blocks are found, each CODE_LABEL starts a chain that goes through all the LABEL_REFs that jump to that label. The chain eventually winds up at the CODE_LABEL: it is circular.

Referenced by profile_function().

| #define LAST_VIRTUAL_POINTER_REGISTER ((FIRST_VIRTUAL_REGISTER) + 4) |

| #define LAST_VIRTUAL_REGISTER ((FIRST_VIRTUAL_REGISTER) + 5) |

Referenced by determine_common_wider_type(), and gmalloc().

| #define LRA_SUBREG_P | ( | RTX | ) | (RTL_FLAG_CHECK1 ("LRA_SUBREG_P", (RTX), SUBREG)->jump) |

True if the subreg was generated by LRA for reload insns. Such subregs are valid only during LRA.

Referenced by reg_class_from_constraints().

| #define MAX_COST INT_MAX |

Maximum cost of an rtl expression. This value has the special meaning not to use an rtx with this cost under any circumstances.

Referenced by try_back_substitute_reg().

| #define MAX_SAVED_CONST_INT 64 |

| #define MAY_HAVE_DEBUG_INSNS (flag_var_tracking_assignments) |

Nonzero if DEBUG_INSN_P may possibly hold.

Referenced by find_src_set_src(), init_dce(), loc_exp_insert_dep(), and vt_get_decl_and_offset().

| #define MEM_ADDR_SPACE | ( | RTX | ) | (get_mem_attrs (RTX)->addrspace) |

For a MEM rtx, the address space.

Referenced by distribute_and_simplify_rtx(), gen_lowpart_if_possible(), move_by_pieces_1(), num_changes_pending(), reload_combine_closest_single_use(), and subreg_lowpart_offset().

| #define MEM_ALIAS_SET | ( | RTX | ) | (get_mem_attrs (RTX)->alias) |

For a MEM rtx, the alias set. If 0, this MEM is not in any alias set, and may alias anything. Otherwise, the MEM can only alias MEMs in a conflicting alias set. This value is set in a language-dependent manner in the front-end, and should not be altered in the back-end. These set numbers are tested with alias_sets_conflict_p.

Referenced by array_ref_element_size(), decl_for_component_ref(), and rtx_refs_may_alias_p().

| #define MEM_ALIGN | ( | RTX | ) | (get_mem_attrs (RTX)->align) |

For a MEM rtx, the alignment in bits. We can use the alignment of the mode as a default when STRICT_ALIGNMENT, but not if not.

Referenced by alignment_for_piecewise_move(), delete_caller_save_insns(), insert_restore(), lowpart_bit_field_p(), move_by_pieces_1(), replace_reg_with_saved_mem(), and store_bit_field().

| #define MEM_ATTRS | ( | RTX | ) | X0MEMATTR (RTX, 1) |

The memory attribute block. We provide access macros for each value in the block and provide defaults if none specified.

| #define MEM_COPY_ATTRIBUTES | ( | LHS, | |

| RHS | |||

| ) |

Copy the attributes that apply to memory locations from RHS to LHS.

Referenced by set_mem_alias_set(), and set_storage_via_setmem().

| #define MEM_EXPR | ( | RTX | ) | (get_mem_attrs (RTX)->expr) |

For a MEM rtx, the decl it is known to refer to, if it is known to refer to part of a DECL. It may also be a COMPONENT_REF.

Referenced by assign_parms_setup_varargs(), move_by_pieces_1(), and reverse_op().

| #define MEM_KEEP_ALIAS_SET_P | ( | RTX | ) | (RTL_FLAG_CHECK1 ("MEM_KEEP_ALIAS_SET_P", (RTX), MEM)->jump) |

1 if RTX is a mem and we should keep the alias set for this mem unchanged when we access a component. Set to 1, or example, when we are already in a non-addressable component of an aggregate.

Referenced by all_zeros_p().

| #define MEM_NOTRAP_P | ( | RTX | ) | (RTL_FLAG_CHECK1 ("MEM_NOTRAP_P", (RTX), MEM)->call) |

1 if RTX is a mem that cannot trap.

Referenced by volatile_refs_p().

| #define MEM_OFFSET | ( | RTX | ) | (get_mem_attrs (RTX)->offset) |

For a MEM rtx, the offset from the start of MEM_EXPR.

Referenced by assign_parms_setup_varargs().

| #define MEM_OFFSET_KNOWN_P | ( | RTX | ) | (get_mem_attrs (RTX)->offset_known_p) |

For a MEM rtx, true if its MEM_OFFSET is known.

Referenced by assign_parms_setup_varargs().

| #define MEM_P | ( | X | ) | (GET_CODE (X) == MEM) |

Predicate yielding nonzero iff X is an rtx for a memory location.

Referenced by add_equal_note(), add_name_attribute(), all_zeros_p(), allocate_struct_function(), array_ref_element_size(), can_compare_and_swap_p(), can_reload_into(), ceiling(), check_argument_store(), clear_storage_libcall_fn(), combine_stack_adjustments(), copy_rtx_if_shared_1(), count_type_elements(), cselib_invalidate_regno(), cselib_reg_set_mode(), decode_asm_operands(), df_simulate_defs(), df_simulate_uses(), discover_nonconstant_array_refs_r(), do_output_reload(), dv_changed_p(), dwarf2out_flush_queued_reg_saves(), dwarf2out_frame_debug_cfa_window_save(), emit_move_change_mode(), equiv_init_varies_p(), expand_reg_info(), find_loads(), gen_formal_parameter_die(), have_global_bss_p(), init_num_sign_bit_copies_in_rep(), initialize_argument_information(), lowpart_bit_field_p(), make_decl_rtl_for_debug(), move_by_pieces_1(), noce_emit_store_flag(), nonimmediate_operand(), note_stores(), notice_stack_pointer_modification_1(), one_code_hoisting_pass(), process_alt_operands(), reg_class_from_constraints(), reg_saved_in(), reg_truncated_to_mode_general(), reverse_op(), rtx_addr_varies_p(), set_dv_changed(), set_storage_via_libcall(), set_storage_via_setmem(), spill_hard_reg(), split_double(), store_killed_before(), subst_reloads(), valid_address_p(), validate_simplify_insn(), and write_dependence_p().

| #define MEM_POINTER | ( | RTX | ) | (RTL_FLAG_CHECK1 ("MEM_POINTER", (RTX), MEM)->frame_related) |

1 if RTX is a mem that holds a pointer value.

Referenced by split_double().

| #define MEM_READONLY_P | ( | RTX | ) | (RTL_FLAG_CHECK1 ("MEM_READONLY_P", (RTX), MEM)->unchanging) |

1 if RTX is a mem that is statically allocated in read-only memory.

Referenced by df_simulate_defs(), rtx_unstable_p(), and rtx_varies_p().

| #define MEM_SIZE | ( | RTX | ) | (get_mem_attrs (RTX)->size) |

For a MEM rtx, the size in bytes of the MEM.

Referenced by find_call_stack_args(), and volatile_refs_p().

| #define MEM_SIZE_KNOWN_P | ( | RTX | ) | (get_mem_attrs (RTX)->size_known_p) |

For a MEM rtx, true if its MEM_SIZE is known.

Referenced by find_call_stack_args(), and volatile_refs_p().

| #define MEM_VOLATILE_P | ( | RTX | ) |

1 if RTX is a mem or asm_operand for a volatile reference.

Referenced by calculate_bb_reg_pressure(), decl_for_component_ref(), df_simulate_defs(), df_simulate_uses(), distribute_and_simplify_rtx(), get_reg_attrs(), maybe_emit_atomic_exchange(), n_occurrences(), remove_note(), rtx_unstable_p(), rtx_varies_p(), store_bit_field(), store_killed_before(), subst_reloads(), and volatile_refs_p().

| #define mode_mem_attrs (this_target_rtl->x_mode_mem_attrs) |

Referenced by set_mem_attributes().

| #define NEXT_INSN | ( | INSN | ) | XEXP (INSN, 2) |

Referenced by alloc_use_cost_map(), bb_note(), block_label(), can_fallthru(), cfg_layout_split_block(), cheap_bb_rtx_cost_p(), check_for_label_ref(), collect_one_action_chain(), compute_out(), count_reg_usage(), debug_rtx_list(), delete_insn(), delete_trivially_dead_insns(), df_simulate_initialize_backwards(), do_clobber_return_reg(), emit_barrier_after(), emit_pattern_after_setloc(), expand_copysign(), expand_dummy_function_end(), for_each_eh_label(), fprint_whex(), get_first_nonnote_insn(), get_stored_val(), insert_store(), insert_var_expansion_initialization(), inside_basic_block_p(), insn_live_p(), label_for_bb(), link_insn_into_chain(), make_pass_cleanup_barriers(), make_pass_free_cfg(), memref_referenced_p(), merge_blocks_move_predecessor_nojumps(), note_outside_basic_block_p(), prev_nondebug_insn(), prev_nonnote_nondebug_insn(), profile_function(), rebuild_jump_labels_chain(), record_insns(), reemit_insn_block_notes(), reload_combine_purge_reg_uses_after_ruid(), replace_rtx(), restore_operands(), rtl_delete_block(), rtl_force_nonfallthru(), rtl_split_block(), rtl_split_edge(), rtl_verify_edges(), rtl_verify_flow_info_1(), set_first_insn(), set_used_flags(), sprint_ul(), too_high_register_pressure_p(), try_crossjump_to_edge(), update_alignments(), and vt_get_decl_and_offset().

| #define NON_COMMUTATIVE_P | ( | X | ) |

1 if X is a non-commutative operator.

| #define NONDEBUG_INSN_P | ( | X | ) | (INSN_P (X) && !DEBUG_INSN_P (X)) |

Predicate yielding nonzero iff X is an insn that is not a debug insn.

Referenced by add_to_inherit(), cfg_layout_redirect_edge_and_branch_force(), decls_for_scope(), df_simulate_initialize_backwards(), get_last_insertion_point(), insert_store(), lra_update_dups(), memref_referenced_p(), num_loop_insns(), one_code_hoisting_pass(), one_pre_gcse_pass(), process_bb_node_lives(), restore_operands(), rtl_split_block(), should_hoist_expr_to_dom(), store_killed_in_pat(), use_crosses_set_p(), and web_main().

| #define NONJUMP_INSN_P | ( | X | ) | (GET_CODE (X) == INSN) |

Predicate yielding nonzero iff X is an insn that cannot jump.

Referenced by cheap_bb_rtx_cost_p(), clobber_return_register(), collect_one_action_chain(), fprint_whex(), and previous_insn().

| #define NOOP_MOVE_INSN_CODE INT_MAX |

Register Transfer Language (RTL) definitions for GCC Copyright (C) 1987-2013 Free Software Foundation, Inc.

This file is part of GCC.

GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version.

GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see http://www.gnu.org/licenses/. Value used by some passes to "recognize" noop moves as valid instructions.

Referenced by multiple_sets().

| #define NOTE_BASIC_BLOCK | ( | INSN | ) | XCBBDEF (INSN, 4, NOTE) |

Referenced by rtl_verify_edges().

| #define NOTE_BLOCK | ( | INSN | ) | XCTREE (INSN, 4, NOTE) |

Referenced by add_debug_prefix_map().

| #define NOTE_CFI | ( | INSN | ) | XCCFI (INSN, 4, NOTE) |

Referenced by new_cfi_row().

| #define NOTE_DATA | ( | INSN | ) | RTL_CHECKC1 (INSN, 4, NOTE) |

In a NOTE that is a line number, this is a string for the file name that the line is in. We use the same field to record block numbers temporarily in NOTE_INSN_BLOCK_BEG and NOTE_INSN_BLOCK_END notes. (We avoid lots of casts between ints and pointers if we use a different macro for the block number.) Opaque data.

Referenced by emit_debug_insn_after().

| #define NOTE_DELETED_LABEL_NAME | ( | INSN | ) | XCSTR (INSN, 4, NOTE) |

Referenced by delete_insn().

| #define NOTE_DURING_CALL_P | ( | RTX | ) | (RTL_FLAG_CHECK1 ("NOTE_VAR_LOCATION_DURING_CALL_P", (RTX), NOTE)->call) |

1 if RTX is emitted after a call, but it should take effect before the call returns.

| #define NOTE_EH_HANDLER | ( | INSN | ) | XCINT (INSN, 4, NOTE) |

Referenced by collect_one_action_chain().

| #define NOTE_INSN_BASIC_BLOCK_P | ( | INSN | ) | (NOTE_P (INSN) && NOTE_KIND (INSN) == NOTE_INSN_BASIC_BLOCK) |

Nonzero if INSN is a note marking the beginning of a basic block.

Referenced by can_fallthru(), delete_insn(), inner_loop_header_p(), rtl_split_edge(), rtl_verify_bb_pointers(), rtl_verify_edges(), and sjlj_emit_function_enter().

| #define NOTE_KIND | ( | INSN | ) | XCINT (INSN, 5, NOTE) |

In a NOTE that is a line number, this is the line number. Other kinds of NOTEs are identified by negative numbers here.

Referenced by block_fallthru(), create_cfi_notes(), delete_insn(), emit_debug_insn_after(), reemit_insn_block_notes(), and sjlj_emit_function_enter().

| #define NOTE_LABEL_NUMBER | ( | INSN | ) | XCINT (INSN, 4, NOTE) |

| #define NOTE_P | ( | X | ) | (GET_CODE (X) == NOTE) |

Predicate yielding nonzero iff X is a note insn.

Referenced by block_fallthru(), create_cfi_notes(), delete_insn_and_edges(), get_last_insn_anywhere(), reemit_insn_block_notes(), spill_hard_reg(), and try_crossjump_to_edge().