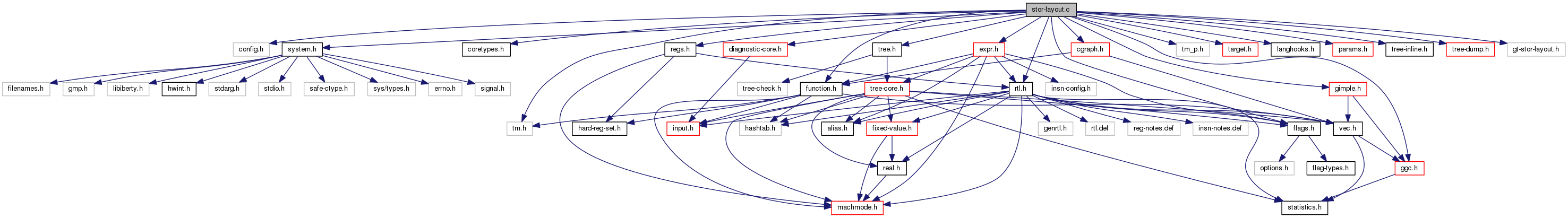

#include "config.h"#include "system.h"#include "coretypes.h"#include "tm.h"#include "tree.h"#include "rtl.h"#include "tm_p.h"#include "flags.h"#include "function.h"#include "expr.h"#include "diagnostic-core.h"#include "ggc.h"#include "target.h"#include "langhooks.h"#include "regs.h"#include "params.h"#include "cgraph.h"#include "tree-inline.h"#include "tree-dump.h"#include "gimple.h"#include "gt-stor-layout.h"

Functions | |

| static tree | self_referential_size (tree) |

| static void | finalize_record_size (record_layout_info) |

| static void | finalize_type_size (tree) |

| static void | place_union_field (record_layout_info, tree) |

| void | debug_rli (record_layout_info) |

| void | internal_reference_types () |

| tree | variable_size () |

| static tree | copy_self_referential_tree_r () |

| static tree | self_referential_size () |

| void | finalize_size_functions () |

| enum machine_mode | mode_for_size () |

| enum machine_mode | mode_for_size_tree () |

| enum machine_mode | smallest_mode_for_size () |

| enum machine_mode | int_mode_for_mode () |

| enum machine_mode | mode_for_vector () |

| unsigned int | get_mode_alignment () |

| unsigned int | element_precision () |

| static enum machine_mode | mode_for_array () |

| static void | do_type_align () |

| void | layout_decl () |

| void | relayout_decl () |

| record_layout_info | start_record_layout () |

| tree | bit_from_pos () |

| tree | byte_from_pos () |

| void | pos_from_bit (tree *poffset, tree *pbitpos, unsigned int off_align, tree pos) |

| void | normalize_offset () |

| DEBUG_FUNCTION void | debug_rli () |

| void | normalize_rli () |

| tree | rli_size_unit_so_far () |

| tree | rli_size_so_far () |

| unsigned int | update_alignment_for_field (record_layout_info rli, tree field, unsigned int known_align) |

| static void | place_union_field () |

| void | place_field () |

| static void | finalize_record_size () |

| void | compute_record_mode () |

| static void | finalize_type_size () |

| static tree | start_bitfield_representative () |

| static void | finish_bitfield_representative () |

| static void | finish_bitfield_layout () |

| void | finish_record_layout () |

| void | finish_builtin_struct (tree type, const char *name, tree fields, tree align_type) |

| void | layout_type () |

| enum machine_mode | vector_type_mode () |

| tree | make_signed_type () |

| tree | make_unsigned_type () |

| tree | make_fract_type () |

| tree | make_accum_type () |

| void | initialize_sizetypes () |

| void | set_min_and_max_values_for_integral_type (tree type, int precision, bool is_unsigned) |

| void | fixup_signed_type () |

| void | fixup_unsigned_type () |

| enum machine_mode | get_best_mode (int bitsize, int bitpos, unsigned HOST_WIDE_INT bitregion_start, unsigned HOST_WIDE_INT bitregion_end, unsigned int align, enum machine_mode largest_mode, bool volatilep) |

| void | get_mode_bounds (enum machine_mode mode, int sign, enum machine_mode target_mode, rtx *mmin, rtx *mmax) |

Variables | |

| tree | sizetype_tab [(int) stk_type_kind_last] |

| unsigned int | maximum_field_alignment = TARGET_DEFAULT_PACK_STRUCT * BITS_PER_UNIT |

| static int | reference_types_internal = 0 |

| static vec< tree, va_gc > * | size_functions |

Function Documentation

| tree bit_from_pos | ( | ) |

Return the combined bit position for the byte offset OFFSET and the bit position BITPOS.

These functions operate on byte and bit positions present in FIELD_DECLs and assume that these expressions result in no (intermediate) overflow. This assumption is necessary to fold the expressions as much as possible, so as to avoid creating artificially variable-sized types in languages supporting variable-sized types like Ada.

References print_node_brief(), and targetm.

| tree byte_from_pos | ( | ) |

Return the combined truncated byte position for the byte offset OFFSET and the bit position BITPOS.

| void compute_record_mode | ( | ) |

Compute the TYPE_MODE for the TYPE (which is a RECORD_TYPE).

Most RECORD_TYPEs have BLKmode, so we start off assuming that. However, if possible, we use a mode that fits in a register instead, in order to allow for better optimization down the line.

A record which has any BLKmode members must itself be BLKmode; it can't go in a register. Unless the member is BLKmode only because it isn't aligned.

If this field is the whole struct, remember its mode so

that, say, we can put a double in a class into a DF

register instead of forcing it to live in the stack. With some targets, it is sub-optimal to access an aligned

BLKmode structure as a scalar. If we only have one real field; use its mode if that mode's size matches the type's size. This only applies to RECORD_TYPE. This does not apply to unions.

If structure's known alignment is less than what the scalar mode would need, and it matters, then stick with BLKmode.

If this is the only reason this type is BLKmode, then

don't force containing types to be BLKmode.

References SET_TYPE_MODE, TYPE_ALIGN, TYPE_SIZE, TYPE_SIZE_UNIT, and TYPE_USER_ALIGN.

|

static |

Similar to copy_tree_r but do not copy component references involving PLACEHOLDER_EXPRs. These nodes are spotted in find_placeholder_in_expr and substituted in substitute_in_expr.

Stop at types, decls, constants like copy_tree_r.

This is the pattern built in ada/make_aligning_type.

Default case: the component reference.

We're not supposed to have them in self-referential size trees because we wouldn't properly control when they are evaluated. However, not creating superfluous SAVE_EXPRs requires accurate tracking of readonly-ness all the way down to here, which we cannot always guarantee in practice. So punt in this case.

| void debug_rli | ( | record_layout_info | ) |

| DEBUG_FUNCTION void debug_rli | ( | ) |

Print debugging information about the information in RLI.

The ms_struct code is the only that uses this.

References DECL_BIT_FIELD_TYPE, DECL_PACKED, DECL_SIZE, integer_zerop(), MAX, maximum_field_alignment, MIN, NULL_TREE, targetm, and TYPE_ALIGN.

|

inlinestatic |

Subroutine of layout_decl: Force alignment required for the data type. But if the decl itself wants greater alignment, don't override that.

| unsigned int element_precision | ( | ) |

Return the precision of the mode, or for a complex or vector mode the precision of the mode of its elements.

|

static |

|

static |

Assuming that all the fields have been laid out, this function uses RLI to compute the final TYPE_SIZE, TYPE_ALIGN, etc. for the type indicated by RLI.

Now we want just byte and bit offsets, so set the offset alignment to be a byte and then normalize.

Determine the desired alignment.

Compute the size so far. Be sure to allow for extra bits in the size in bytes. We have guaranteed above that it will be no more than a single byte.

Round the size up to be a multiple of the required alignment.

| void finalize_size_functions | ( | void | ) |

Take, queue and compile all the size functions. It is essential that the size functions be gimplified at the very end of the compilation in order to guarantee transparent handling of self-referential sizes. Otherwise the GENERIC inliner would not be able to inline them back at each of their call sites, thus creating artificial non-constant size expressions which would trigger nasty problems later on.

|

static |

|

static |

Compute TYPE_SIZE and TYPE_ALIGN for TYPE, once it has been laid out.

Normally, use the alignment corresponding to the mode chosen. However, where strict alignment is not required, avoid over-aligning structures, since most compilers do not do this alignment.

Don't override a larger alignment requirement coming from a user

alignment of one of the fields. Do machine-dependent extra alignment.

If we failed to find a simple way to calculate the unit size of the type, find it by division.

TYPE_SIZE (type) is computed in bitsizetype. After the division, the result will fit in sizetype. We will get more efficient code using sizetype, so we force a conversion.

Evaluate nonconstant sizes only once, either now or as soon as safe.

Also layout any other variants of the type.

Record layout info of this variant.

Copy it into all variants.

|

static |

Compute and set FIELD_DECLs for the underlying objects we should use for bitfield access for the structure laid out with RLI.

Unions would be special, for the ease of type-punning optimizations we could use the underlying type as hint for the representative if the bitfield would fit and the representative would not exceed the union in size.

In the C++ memory model, consecutive bit fields in a structure are

considered one memory location and updating a memory location

may not store into adjacent memory locations. Start new representative.

Finish off new representative.

Zero-size bitfields finish off a representative and

do not have a representative themselves. This is

required by the C++ memory model. We assume that either DECL_FIELD_OFFSET of the representative

and each bitfield member is a constant or they are equal.

This is because we need to be able to compute the bit-offset

of each field relative to the representative in get_bit_range

during RTL expansion.

If these constraints are not met, simply force a new

representative to be generated. That will at most

generate worse code but still maintain correctness with

respect to the C++ memory model.

References bitsize_int, error_mark_node, gcc_assert, gcc_unreachable, GET_MODE_ALIGNMENT, GET_MODE_BITSIZE, GET_MODE_SIZE, int_const_binop(), mode_for_size(), mode_for_vector(), SET_TYPE_MODE, size_int, smallest_mode_for_size(), targetm, TREE_CODE, TREE_TYPE, TYPE_ALIGN, TYPE_MODE, TYPE_PRECISION, TYPE_SATURATING, TYPE_SIZE, TYPE_SIZE_UNIT, TYPE_UNSIGNED, TYPE_USER_ALIGN, and TYPE_VECTOR_SUBPARTS.

|

static |

Finish up a bitfield group that was started by creating the underlying object REPR with the last field in the bitfield group FIELD.

Round up bitsize to multiples of BITS_PER_UNIT.

Now nothing tells us how to pad out bitsize ...

If there was an error, the field may be not laid out

correctly. Don't bother to do anything. If the group ends within a bitfield nextf does not need to be

aligned to BITS_PER_UNIT. Thus round up. ??? If you consider that tail-padding of this struct might be

re-used when deriving from it we cannot really do the following

and thus need to set maxsize to bitsize? Also we cannot

generally rely on maxsize to fold to an integer constant, so

use bitsize as fallback for this case. Only if we don't artificially break up the representative in the middle of a large bitfield with different possibly overlapping representatives. And all representatives start at byte offset.

Find the smallest nice mode to use.

We really want a BLKmode representative only as a last resort,

considering the member b in

struct { int a : 7; int b : 17; int c; } __attribute__((packed));

Otherwise we simply want to split the representative up

allowing for overlaps within the bitfield region as required for

struct { int a : 7; int b : 7;

int c : 10; int d; } __attribute__((packed));

[0, 15] HImode for a and b, [8, 23] HImode for c. Remember whether the bitfield group is at the end of the structure or not.

References start_bitfield_representative().

Finish processing a builtin RECORD_TYPE type TYPE. It's name is NAME, its fields are chained in reverse on FIELDS.

If ALIGN_TYPE is non-null, it is given the same alignment as ALIGN_TYPE.

References build_int_cst(), double_int_to_tree(), fold_convert, integer_zerop(), size_binop, size_zero_node, sizetype, ssizetype, TREE_CODE, tree_int_cst_lt(), tree_to_double_int(), TREE_TYPE, TYPE_MAX_VALUE, TYPE_MIN_VALUE, TYPE_PRECISION, TYPE_SIZE, and TYPE_UNSIGNED.

| void finish_record_layout | ( | ) |

Do all of the work required to layout the type indicated by RLI, once the fields have been laid out. This function will call `free' for RLI, unless FREE_P is false. Passing a value other than false for FREE_P is bad practice; this option only exists to support the G++ 3.2 ABI.

Compute the final size.

Compute the TYPE_MODE for the record.

Perform any last tweaks to the TYPE_SIZE, etc.

Compute bitfield representatives.

Propagate TYPE_PACKED to variants. With C++ templates, handle_packed_attribute is too early to do this.

Lay out any static members. This is done now because their type may use the record's type.

Clean up.

References targetm.

| void fixup_signed_type | ( | ) |

Set the extreme values of TYPE based on its precision in bits, then lay it out. Used when make_signed_type won't do because the tree code is not INTEGER_TYPE. E.g. for Pascal, when the -fsigned-char option is given.

We can not represent properly constants greater then HOST_BITS_PER_DOUBLE_INT, still we need the types as they are used by i386 vector extensions and friends.

Lay out the type: set its alignment, size, etc.

References bit_field_mode_iterator::prefer_smaller_modes().

| void fixup_unsigned_type | ( | ) |

Set the extreme values of TYPE based on its precision in bits, then lay it out. This is used both in `make_unsigned_type' and for enumeral types.

We can not represent properly constants greater then HOST_BITS_PER_DOUBLE_INT, still we need the types as they are used by i386 vector extensions and friends.

Lay out the type: set its alignment, size, etc.

| enum machine_mode get_best_mode | ( | int | bitsize, |

| int | bitpos, | ||

| unsigned HOST_WIDE_INT | bitregion_start, | ||

| unsigned HOST_WIDE_INT | bitregion_end, | ||

| unsigned int | align, | ||

| enum machine_mode | largest_mode, | ||

| bool | volatilep | ||

| ) |

Find the best machine mode to use when referencing a bit field of length BITSIZE bits starting at BITPOS.

BITREGION_START is the bit position of the first bit in this sequence of bit fields. BITREGION_END is the last bit in this sequence. If these two fields are non-zero, we should restrict the memory access to that range. Otherwise, we are allowed to touch any adjacent non bit-fields.

The underlying object is known to be aligned to a boundary of ALIGN bits. If LARGEST_MODE is not VOIDmode, it means that we should not use a mode larger than LARGEST_MODE (usually SImode).

If no mode meets all these conditions, we return VOIDmode.

If VOLATILEP is false and SLOW_BYTE_ACCESS is false, we return the smallest mode meeting these conditions.

If VOLATILEP is false and SLOW_BYTE_ACCESS is true, we return the largest mode (but a mode no wider than UNITS_PER_WORD) that meets all the conditions.

If VOLATILEP is true the narrow_volatile_bitfields target hook is used to decide which of the above modes should be used.

??? For historical reasons, reject modes that would normally receive greater alignment, even if unaligned accesses are acceptable. This has both advantages and disadvantages. Removing this check means that something like:

struct s { unsigned int x; unsigned int y; }; int f (struct s *s) { return s->x == 0 && s->y == 0; }

can be implemented using a single load and compare on 64-bit machines that have no alignment restrictions. For example, on powerpc64-linux-gnu, we would generate:

ld 3,0(3)

cntlzd 3,3

srdi 3,3,6

blr

rather than:

lwz 9,0(3)

cmpwi 7,9,0

bne 7,.L3

lwz 3,4(3)

cntlzw 3,3

srwi 3,3,5

extsw 3,3

blr

.p2align 4,,15

.L3: li 3,0 blr

However, accessing more than one field can make life harder for the gimple optimizers. For example, gcc.dg/vect/bb-slp-5.c has a series of unsigned short copies followed by a series of unsigned short comparisons. With this check, both the copies and comparisons remain 16-bit accesses and FRE is able to eliminate the latter. Without the check, the comparisons can be done using 2 64-bit operations, which FRE isn't able to handle in the same way.

Either way, it would probably be worth disabling this check during expand. One particular example where removing the check would help is the get_best_mode call in store_bit_field. If we are given a memory bitregion of 128 bits that is aligned to a 64-bit boundary, and the bitfield we want to modify is in the second half of the bitregion, this check causes store_bitfield to turn the memory into a 64-bit reference to the first half of the region. We later use adjust_bitfield_address to get a reference to the correct half, but doing so looks to adjust_bitfield_address as though we are moving past the end of the original object, so it drops the associated MEM_EXPR and MEM_OFFSET. Removing the check causes store_bit_field to keep a 128-bit memory reference, so that the final bitfield reference still has a MEM_EXPR and MEM_OFFSET.

Referenced by store_bit_field().

| unsigned int get_mode_alignment | ( | ) |

Return the alignment of MODE. This will be bounded by 1 and BIGGEST_ALIGNMENT.

References DECL_ALIGN, TREE_CODE, and TYPE_ALIGN.

| void get_mode_bounds | ( | enum machine_mode | mode, |

| int | sign, | ||

| enum machine_mode | target_mode, | ||

| rtx * | mmin, | ||

| rtx * | mmax | ||

| ) |

Gets minimal and maximal values for MODE (signed or unsigned depending on SIGN). The returned constants are made to be usable in TARGET_MODE.

Referenced by simplify_relational_operation_1().

| void initialize_sizetypes | ( | void | ) |

Initialize sizetypes so layout_type can use them.

Get sizetypes precision from the SIZE_TYPE target macro.

Create stubs for sizetype and bitsizetype so we can create constants.

Now layout both types manually.

Create the signed variants of *sizetype.

| enum machine_mode int_mode_for_mode | ( | ) |

Find an integer mode of the exact same size, or BLKmode on failure.

... fall through ...

References GET_MODE_BITSIZE, GET_MODE_CLASS, GET_MODE_INNER, GET_MODE_NUNITS, GET_MODE_WIDER_MODE, have_regs_of_mode, mode_for_size(), SCALAR_ACCUM_MODE_P, SCALAR_FLOAT_MODE_P, SCALAR_FRACT_MODE_P, SCALAR_UACCUM_MODE_P, and SCALAR_UFRACT_MODE_P.

| void internal_reference_types | ( | void | ) |

Show that REFERENCE_TYPES are internal and should use address_mode. Called only by front end.

References TREE_CONSTANT.

| void layout_decl | ( | ) |

Set the size, mode and alignment of a ..._DECL node. TYPE_DECL does need this for C++. Note that LABEL_DECL and CONST_DECL nodes do not need this, and FUNCTION_DECL nodes have them set up in a special (and simple) way. Don't call layout_decl for them.

KNOWN_ALIGN is the amount of alignment we can assume this decl has with no special effort. It is relevant only for FIELD_DECLs and depends on the previous fields. All that matters about KNOWN_ALIGN is which powers of 2 divide it. If KNOWN_ALIGN is 0, it means, "as much alignment as you like": the record will be aligned to suit.

Usually the size and mode come from the data type without change, however, the front-end may set the explicit width of the field, so its size may not be the same as the size of its type. This happens with bitfields, of course (an `int' bitfield may be only 2 bits, say), but it also happens with other fields. For example, the C++ front-end creates zero-sized fields corresponding to empty base classes, and depends on layout_type setting DECL_FIELD_BITPOS correctly for the field. Set the size in bytes from the size in bits. If we have already set the mode, don't set it again since we can be called twice for FIELD_DECLs.

For non-fields, update the alignment from the type.

For fields, it's a bit more complicated...

A zero-length bit-field affects the alignment of the next

field. In essence such bit-fields are not influenced by

any packing due to #pragma pack or attribute packed. See if we can use an ordinary integer mode for a bit-field.

Conditions are: a fixed size that is correct for another mode,

occupying a complete byte or bytes on proper boundary. Turn off DECL_BIT_FIELD if we won't need it set.

Don't touch DECL_ALIGN. For other packed fields, go ahead and

round up; we'll reduce it again below. We want packing to

supersede USER_ALIGN inherited from the type, but defer to

alignment explicitly specified on the field decl. If the field is packed and not explicitly aligned, give it the

minimum alignment. Note that do_type_align may set

DECL_USER_ALIGN, so we need to check old_user_align instead. Some targets (i.e. i386, VMS) limit struct field alignment

to a lower boundary than alignment of variables unless

it was overridden by attribute aligned. Should this be controlled by DECL_USER_ALIGN, too?

Evaluate nonconstant size only once, either now or as soon as safe.

If requested, warn about definitions of large data objects.

If the RTL was already set, update its mode and mem attributes.

References targetm.

Referenced by build2_stat(), emit_local(), normalize_offset(), remap_vla_decls(), and use_register_for_decl().

| void layout_type | ( | ) |

Calculate the mode, size, and alignment for TYPE. For an array type, calculate the element separation as well. Record TYPE on the chain of permanent or temporary types so that dbxout will find out about it.

TYPE_SIZE of a type is nonzero if the type has been laid out already. layout_type does nothing on such a type.

If the type is incomplete, its TYPE_SIZE remains zero.

Do nothing if type has been laid out before.

This kind of type is the responsibility

of the language-specific code. TYPE_MODE (type) has been set already.

Find an appropriate mode for the vector type.

For vector types, we do not default to the mode's alignment.

Instead, query a target hook, defaulting to natural alignment.

This prevents ABI changes depending on whether or not native

vector modes are supported. However, if the underlying mode requires a bigger alignment than

what the target hook provides, we cannot use the mode. For now,

simply reject that case. This is an incomplete type and so doesn't have a size.

A pointer might be MODE_PARTIAL_INT,

but ptrdiff_t must be integral. It's hard to see what the mode and size of a function ought to

be, but we do know the alignment is FUNCTION_BOUNDARY, so

make it consistent with that. We need to know both bounds in order to compute the size.

Make sure that an array of zero-sized element is zero-sized

regardless of its extent. The computation should happen in the original signedness so

that (possible) negative values are handled appropriately

when determining overflow. ??? When it is obvious that the range is signed

represent it using ssizetype. ??? We have no way to distinguish a null-sized array from an

array spanning the whole sizetype range, so we arbitrarily

decide that [0, -1] is the only valid representation. If we know the size of the element, calculate the total size

directly, rather than do some division thing below. This

optimization helps Fortran assumed-size arrays (where the

size of the array is determined at runtime) substantially. Now round the alignment and size,

using machine-dependent criteria if any. BLKmode elements force BLKmode aggregate;

else extract/store fields may lose. When the element size is constant, check that it is at least as

large as the element alignment. If TYPE_SIZE_UNIT overflowed, then it is certainly larger than

TYPE_ALIGN_UNIT. Initialize the layout information.

If this is a QUAL_UNION_TYPE, we want to process the fields

in the reverse order in building the COND_EXPR that denotes

its size. We reverse them again later. Place all the fields.

Finish laying out the record.

Compute the final TYPE_SIZE, TYPE_ALIGN, etc. for TYPE. For records and unions, finish_record_layout already called this function.

We should never see alias sets on incomplete aggregates. And we should not call layout_type on not incomplete aggregates.

Referenced by finalize_task_copyfn(), find_combined_for(), remap_vla_decls(), simple_cst_equal(), and tree_map_base_eq().

| tree make_accum_type | ( | ) |

Create and return a type for accum of PRECISION bits, UNSIGNEDP, and SATP.

Lay out the type: set its alignment, size, etc.

| tree make_fract_type | ( | ) |

Create and return a type for fract of PRECISION bits, UNSIGNEDP, and SATP.

Lay out the type: set its alignment, size, etc.

| tree make_signed_type | ( | ) |

Create and return a type for signed integers of PRECISION bits.

| tree make_unsigned_type | ( | ) |

Create and return a type for unsigned integers of PRECISION bits.

References HOST_BITS_PER_DOUBLE_INT, set_min_and_max_values_for_integral_type(), TYPE_PRECISION, and TYPE_UNSIGNED.

|

static |

Return the natural mode of an array, given that it is SIZE bytes in total and has elements of type ELEM_TYPE.

One-element arrays get the component type's mode.

References DECL_RTL_IF_SET, DECL_SOURCE_LOCATION, error_mark_node, gcc_assert, NULL_RTX, TREE_CODE, TREE_TYPE, gdbhooks::TYPE_DECL, and void_type_node.

| enum machine_mode mode_for_size | ( | ) |

Return the machine mode to use for a nonscalar of SIZE bits. The mode must be in class MCLASS, and have exactly that many value bits; it may have padding as well. If LIMIT is nonzero, modes of wider than MAX_FIXED_MODE_SIZE will not be used.

Get the first mode which has this size, in the specified class.

References gcc_unreachable, GET_CLASS_NARROWEST_MODE, GET_MODE_PRECISION, and GET_MODE_WIDER_MODE.

| enum machine_mode mode_for_size_tree | ( | ) |

Similar, except passed a tree node.

| enum machine_mode mode_for_vector | ( | ) |

Find a mode that is suitable for representing a vector with NUNITS elements of mode INNERMODE. Returns BLKmode if there is no suitable mode.

First, look for a supported vector type.

Do not check vector_mode_supported_p here. We'll do that later in vector_type_mode.

For integers, try mapping it to a same-sized scalar mode.

References BITS_PER_UNIT, MAX, MIN, and mode_base_align.

| void normalize_offset | ( | ) |

Given a pointer to bit and byte offsets and an offset alignment, normalize the offsets so they are within the alignment.

If the bit position is now larger than it should be, adjust it downwards.

References DECL_ALIGN, DECL_USER_ALIGN, layout_decl(), TREE_CODE, and TREE_TYPE.

Referenced by pos_from_bit().

| void normalize_rli | ( | ) |

Given an RLI with a possibly-incremented BITPOS, adjust OFFSET and BITPOS if necessary to keep BITPOS below OFFSET_ALIGN.

| void place_field | ( | ) |

RLI contains information about the layout of a RECORD_TYPE. FIELD is a FIELD_DECL to be added after those fields already present in T. (FIELD is not actually added to the TYPE_FIELDS list here; callers that desire that behavior must manually perform that step.)

The alignment required for FIELD.

The alignment FIELD would have if we just dropped it into the record as it presently stands.

The type of this field.

If FIELD is static, then treat it like a separate variable, not really like a structure field. If it is a FUNCTION_DECL, it's a method. In both cases, all we do is lay out the decl, and we do it *after* the record is laid out.

Enumerators and enum types which are local to this class need not be laid out. Likewise for initialized constant fields.

Unions are laid out very differently than records, so split that code off to another function.

Place this field at the current allocation position, so we

maintain monotonicity. Work out the known alignment so far. Note that A & (-A) is the value of the least-significant bit in A that is one.

Don't warn if DECL_PACKED was set by the type.

Does this field automatically have alignment it needs by virtue of the fields that precede it and the record's own alignment?

No, we need to skip space before this field.

Bump the cumulative size to multiple of field alignment. If the alignment is still within offset_align, just align

the bit position. First adjust OFFSET by the partial bits, then align.

Handle compatibility with PCC. Note that if the record has any variable-sized fields, we need not worry about compatibility.

See the docs for TARGET_MS_BITFIELD_LAYOUT_P for details.

A subtlety:

When a bit field is inserted into a packed record, the whole

size of the underlying type is used by one or more same-size

adjacent bitfields. (That is, if its long:3, 32 bits is

used in the record, and any additional adjacent long bitfields are

packed into the same chunk of 32 bits. However, if the size

changes, a new field of that size is allocated.) In an unpacked

record, this is the same as using alignment, but not equivalent

when packing.

Note: for compatibility, we use the type size, not the type alignment

to determine alignment, since that matches the documentation This is a bitfield if it exists.

If both are bitfields, nonzero, and the same size, this is

the middle of a run. Zero declared size fields are special

and handled as "end of run". (Note: it's nonzero declared

size, but equal type sizes!) (Since we know that both

the current and previous fields are bitfields by the

time we check it, DECL_SIZE must be present for both.) We're in the middle of a run of equal type size fields; make

sure we realign if we run out of bits. (Not decl size,

type size!) out of bits; bump up to next 'word'.

End of a run: if leaving a run of bitfields of the same type

size, we have to "use up" the rest of the bits of the type

size.

Compute the new position as the sum of the size for the prior

type and where we first started working on that type.

Note: since the beginning of the field was aligned then

of course the end will be too. No round needed. We "use up" size zero fields; the code below should behave

as if the prior field was not a bitfield. Cause a new bitfield to be captured, either this time (if

currently a bitfield) or next time we see one. If we're starting a new run of same type size bitfields

(or a run of non-bitfields), set up the "first of the run"

fields.

That is, if the current field is not a bitfield, or if there

was a prior bitfield the type sizes differ, or if there wasn't

a prior bitfield the size of the current field is nonzero.

Note: we must be sure to test ONLY the type size if there was

a prior bitfield and ONLY for the current field being zero if

there wasn't. Never smaller than a byte for compatibility.

(When not a bitfield), we could be seeing a flex array (with

no DECL_SIZE). Since we won't be using remaining_in_alignment

until we see a bitfield (and come by here again) we just skip

calculating it. Now align (conventionally) for the new type.

If we really aligned, don't allow subsequent bitfields

to undo that. Offset so far becomes the position of this field after normalizing.

If this field ended up more aligned than we thought it would be (we approximate this by seeing if its position changed), lay out the field again; perhaps we can use an integral mode for it now.

ACTUAL_ALIGN is still the actual alignment *within the record* . store / extract bit field operations will check the alignment of the record against the mode of bit fields.

Now add size of this field to the size of the record. If the size is

not constant, treat the field as being a multiple of bytes and just

adjust the offset, resetting the bit position. Otherwise, apportion the

size amongst the bit position and offset. First handle the case of an

unspecified size, which can happen when we have an invalid nested struct

definition, such as struct j { struct j { int i; } }. The error message

is printed in finish_struct. Do nothing.

If we ended a bitfield before the full length of the type then

pad the struct out to the full length of the last type.

|

static |

|

static |

Called from place_field to handle unions.

If this is an ERROR_MARK return after having set the field at the start of the union. This helps when parsing invalid fields.

We assume the union's size will be a multiple of a byte so we don't bother with BITPOS.

Split the bit position POS into a byte offset *POFFSET and a bit position *PBITPOS with the byte offset aligned to OFF_ALIGN bits.

References normalize_offset().

| void relayout_decl | ( | ) |

Given a VAR_DECL, PARM_DECL or RESULT_DECL, clears the results of a previous call to layout_decl and calls it again.

References bitsize_unit_node, bitsizetype, fold_convert, size_binop, TREE_CODE, and TREE_OPERAND.

| tree rli_size_unit_so_far | ( | ) |

Returns the size in bytes allocated so far.

References TYPE_ALIGN, and TYPE_USER_ALIGN.

|

static |

Given a SIZE expression that is self-referential, return an equivalent expression to serve as the actual size expression for a type.

Do not factor out simple operations.

Collect the list of self-references in the expression.

Obtain a private copy of the expression.

Build the parameter and argument lists in parallel; also substitute the former for the latter in the expression.

We shouldn't have true variables here.

This is the pattern built in ada/make_aligning_type.

Default case: the component reference.

Append 'void' to indicate that the number of parameters is fixed.

The 3 lists have been created in reverse order.

Build the function type.

Build the function declaration.

The function has been created by the compiler and we don't want to emit debug info for it.

It is supposed to be "const" and never throw.

We want it to be inlined when this is deemed profitable, as well as discarded if every call has been integrated.

It is made up of a unique return statement.

Put it onto the list of size functions.

Replace the original expression with a call to the size function.

TYPE is an integral type, i.e., an INTEGRAL_TYPE, ENUMERAL_TYPE or BOOLEAN_TYPE. Set TYPE_MIN_VALUE and TYPE_MAX_VALUE for TYPE, based on the PRECISION and whether or not the TYPE IS_UNSIGNED. PRECISION need not correspond to a width supported natively by the hardware; for example, on a machine with 8-bit, 16-bit, and 32-bit register modes, PRECISION might be 7, 23, or

For bitfields with zero width we end up creating integer types with zero precision. Don't assign any minimum/maximum values to those types, they don't have any valid value.

References bit_field_mode_iterator::next_mode().

Referenced by make_unsigned_type().

| enum machine_mode smallest_mode_for_size | ( | ) |

Similar, but never return BLKmode; return the narrowest mode that contains at least the requested number of value bits.

Get the first mode which has at least this size, in the specified class.

|

static |

Return a new underlying object for a bitfield started with FIELD.

Force the representative to begin at a BITS_PER_UNIT aligned boundary - C++ may use tail-padding of a base object to continue packing bits so the bitfield region does not start at bit zero (see g++.dg/abi/bitfield5.C for example). Unallocated bits may happen for other reasons as well, for example Ada which allows explicit bit-granular structure layout.

Referenced by finish_bitfield_representative().

| record_layout_info start_record_layout | ( | ) |

Begin laying out type T, which may be a RECORD_TYPE, UNION_TYPE, or QUAL_UNION_TYPE. Return a pointer to a struct record_layout_info which is to be passed to all other layout functions for this record. It is the responsibility of the caller to call `free' for the storage returned. Note that garbage collection is not permitted until we finish laying out the record.

If the type has a minimum specified alignment (via an attribute declaration, for example) use it – otherwise, start with a one-byte alignment.

| unsigned int update_alignment_for_field | ( | record_layout_info | rli, |

| tree | field, | ||

| unsigned int | known_align | ||

| ) |

FIELD is about to be added to RLI->T. The alignment (in bits) of the next available location within the record is given by KNOWN_ALIGN. Update the variable alignment fields in RLI, and return the alignment to give the FIELD.

The alignment required for FIELD.

The type of this field.

True if the field was explicitly aligned by the user.

Do not attempt to align an ERROR_MARK node

Lay out the field so we know what alignment it needs.

Record must have at least as much alignment as any field. Otherwise, the alignment of the field within the record is meaningless.

Here, the alignment of the underlying type of a bitfield can

affect the alignment of a record; even a zero-sized field

can do this. The alignment should be to the alignment of

the type, except that for zero-size bitfields this only

applies if there was an immediately prior, nonzero-size

bitfield. (That's the way it is, experimentally.)

| tree variable_size | ( | ) |

Given a size SIZE that may not be a constant, return a SAVE_EXPR to serve as the actual size-expression for a type or decl.

Obviously.

If the size is self-referential, we can't make a SAVE_EXPR (see save_expr for the rationale). But we can do something else.

If we are in the global binding level, we can't make a SAVE_EXPR since it may end up being shared across functions, so it is up to the front-end to deal with this case.

| enum machine_mode vector_type_mode | ( | ) |

Vector types need to re-check the target flags each time we report the machine mode. We need to do this because attribute target can change the result of vector_mode_supported_p and have_regs_of_mode on a per-function basis. Thus the TYPE_MODE of a VECTOR_TYPE can change on a per-function basis. ??? Possibly a better solution is to run through all the types referenced by a function and re-compute the TYPE_MODE once, rather than make the TYPE_MODE macro call a function.

For integers, try mapping it to a same-sized scalar mode.

Variable Documentation

| unsigned int maximum_field_alignment = TARGET_DEFAULT_PACK_STRUCT * BITS_PER_UNIT |

If nonzero, this is an upper limit on alignment of structure fields. The value is measured in bits.

Referenced by debug_rli().

|

static |

Nonzero if all REFERENCE_TYPEs are internal and hence should be allocated in the address spaces' address_mode, not pointer_mode. Set only by internal_reference_types called only by a front end.

An array of functions used for self-referential size computation.

| tree sizetype_tab[(int) stk_type_kind_last] |

C-compiler utilities for types and variables storage layout Copyright (C) 1987-2013 Free Software Foundation, Inc.

This file is part of GCC.

GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version.

GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see http://www.gnu.org/licenses/. Data type for the expressions representing sizes of data types. It is the first integer type laid out.