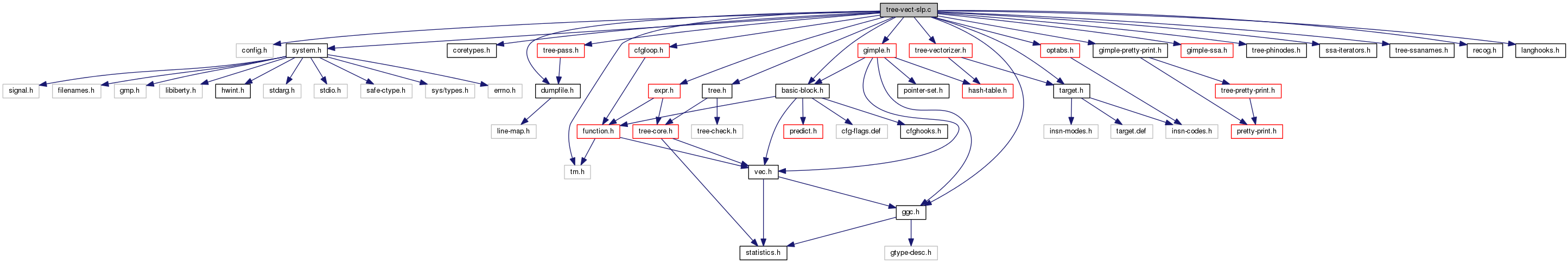

#include "config.h"#include "system.h"#include "coretypes.h"#include "dumpfile.h"#include "tm.h"#include "ggc.h"#include "tree.h"#include "target.h"#include "basic-block.h"#include "gimple-pretty-print.h"#include "gimple.h"#include "gimple-ssa.h"#include "tree-phinodes.h"#include "ssa-iterators.h"#include "tree-ssanames.h"#include "tree-pass.h"#include "cfgloop.h"#include "expr.h"#include "recog.h"#include "optabs.h"#include "tree-vectorizer.h"#include "langhooks.h"

Functions | |

| LOC | find_bb_location () |

| static void | vect_free_slp_tree () |

| void | vect_free_slp_instance () |

| static slp_tree | vect_create_new_slp_node () |

| static vec< slp_oprnd_info > | vect_create_oprnd_info () |

| static void | vect_free_oprnd_info () |

| static int | vect_get_place_in_interleaving_chain () |

| static bool | vect_get_and_check_slp_defs (loop_vec_info loop_vinfo, bb_vec_info bb_vinfo, gimple stmt, bool first, vec< slp_oprnd_info > *oprnds_info) |

| static bool | vect_build_slp_tree_1 (loop_vec_info loop_vinfo, bb_vec_info bb_vinfo, vec< gimple > stmts, unsigned int group_size, unsigned nops, unsigned int *max_nunits, unsigned int vectorization_factor, bool *matches) |

| static bool | vect_build_slp_tree (loop_vec_info loop_vinfo, bb_vec_info bb_vinfo, slp_tree *node, unsigned int group_size, unsigned int *max_nunits, vec< slp_tree > *loads, unsigned int vectorization_factor, bool *matches, unsigned *npermutes) |

| static void | vect_print_slp_tree () |

| static void | vect_mark_slp_stmts () |

| static void | vect_mark_slp_stmts_relevant () |

| static void | vect_slp_rearrange_stmts (slp_tree node, unsigned int group_size, vec< unsigned > permutation) |

| static bool | vect_supported_load_permutation_p () |

| static gimple | vect_find_first_load_in_slp_instance () |

| static gimple | vect_find_last_store_in_slp_instance () |

| static void | vect_analyze_slp_cost_1 (loop_vec_info loop_vinfo, bb_vec_info bb_vinfo, slp_instance instance, slp_tree node, stmt_vector_for_cost *prologue_cost_vec, unsigned ncopies_for_cost) |

| static void | vect_analyze_slp_cost (loop_vec_info loop_vinfo, bb_vec_info bb_vinfo, slp_instance instance, unsigned nunits) |

| static bool | vect_analyze_slp_instance (loop_vec_info loop_vinfo, bb_vec_info bb_vinfo, gimple stmt) |

| bool | vect_analyze_slp () |

| bool | vect_make_slp_decision () |

| static void | vect_detect_hybrid_slp_stmts () |

| void | vect_detect_hybrid_slp () |

| static bb_vec_info | new_bb_vec_info () |

| static void | destroy_bb_vec_info () |

| static bool | vect_slp_analyze_node_operations () |

| static bool | vect_slp_analyze_operations () |

| static unsigned | vect_bb_slp_scalar_cost (basic_block bb, slp_tree node, vec< bool, va_heap > *life) |

| static bool | vect_bb_vectorization_profitable_p () |

| static bb_vec_info | vect_slp_analyze_bb_1 () |

| bb_vec_info | vect_slp_analyze_bb () |

| void | vect_update_slp_costs_according_to_vf () |

| static void | vect_get_constant_vectors (tree op, slp_tree slp_node, vec< tree > *vec_oprnds, unsigned int op_num, unsigned int number_of_vectors, int reduc_index) |

| static void | vect_get_slp_vect_defs () |

| void | vect_get_slp_defs (vec< tree > ops, slp_tree slp_node, vec< vec< tree > > *vec_oprnds, int reduc_index) |

| static void | vect_create_mask_and_perm (gimple stmt, gimple next_scalar_stmt, tree mask, int first_vec_indx, int second_vec_indx, gimple_stmt_iterator *gsi, slp_tree node, tree vectype, vec< tree > dr_chain, int ncopies, int vect_stmts_counter) |

| static bool | vect_get_mask_element (gimple stmt, int first_mask_element, int m, int mask_nunits, bool only_one_vec, int index, unsigned char *mask, int *current_mask_element, bool *need_next_vector, int *number_of_mask_fixes, bool *mask_fixed, bool *needs_first_vector) |

| bool | vect_transform_slp_perm_load (slp_tree node, vec< tree > dr_chain, gimple_stmt_iterator *gsi, int vf, slp_instance slp_node_instance, bool analyze_only) |

| static bool | vect_schedule_slp_instance (slp_tree node, slp_instance instance, unsigned int vectorization_factor) |

| static void | vect_remove_slp_scalar_calls () |

| bool | vect_schedule_slp () |

| void | vect_slp_transform_bb () |

Function Documentation

|

static |

Free BB_VINFO struct, as well as all the stmt_vec_info structs of all the stmts in the basic block.

Free stmt_vec_info.

References BREAK_FROM_IMM_USE_STMT, DEF_FROM_PTR, DR_IS_READ, FOR_EACH_IMM_USE_STMT, FOR_EACH_SSA_DEF_OPERAND, scalar_load, scalar_stmt, scalar_store, SSA_OP_DEF, stmt_cost(), STMT_VINFO_DATA_REF, STMT_VINFO_VECTORIZABLE, vect_get_stmt_cost(), and vinfo_for_stmt().

| LOC find_bb_location | ( | ) |

SLP - Basic Block Vectorization Copyright (C) 2007-2013 Free Software Foundation, Inc. Contributed by Dorit Naishlos dorit@il.ibm.com and Ira Rosen irar@il.ibm.com

This file is part of GCC.

GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version.

GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see http://www.gnu.org/licenses/. Extract the location of the basic block in the source code. Return the basic block location if succeed and NULL if not.

|

static |

Create and initialize a new bb_vec_info struct for BB, as well as stmt_vec_info structs for all the stmts in it.

References vect_free_slp_instance().

| bool vect_analyze_slp | ( | ) |

Check if there are stmts in the loop can be vectorized using SLP. Build SLP trees of packed scalar stmts if SLP is possible.

Find SLP sequences starting from groups of grouped stores.

Find SLP sequences starting from reduction chains.

Don't try to vectorize SLP reductions if reduction chain was

detected. Find SLP sequences starting from groups of reductions.

References BB_VINFO_BB, flow_bb_inside_loop_p(), FOR_EACH_IMM_USE_STMT, FOR_EACH_VEC_ELT, gimple_op(), hybrid, LOOP_VINFO_LOOP, NULL, PURE_SLP_STMT, SLP_TREE_CHILDREN, SLP_TREE_SCALAR_STMTS, STMT_SLP_TYPE, STMT_VINFO_BB_VINFO, STMT_VINFO_DEF_TYPE, STMT_VINFO_LOOP_VINFO, STMT_VINFO_RELEVANT, TREE_CODE, vect_detect_hybrid_slp_stmts(), vect_mark_slp_stmts(), vect_reduction_def, VECTORIZABLE_CYCLE_DEF, and vinfo_for_stmt().

|

static |

Compute the cost for the SLP instance INSTANCE.

Calculate the number of vector stmts to create based on the unrolling factor (number of vectors is 1 if NUNITS >= GROUP_SIZE, and is GROUP_SIZE / NUNITS otherwise.

Record the prologue costs, which were delayed until we were sure that SLP was successful. Unlike the body costs, we know the final values now regardless of the loop vectorization factor.

|

static |

Compute the cost for the SLP node NODE in the SLP instance INSTANCE.

Recurse down the SLP tree.

Look at the first scalar stmt to determine the cost.

If the load is permuted record the cost for the permutation.

??? Loads from multiple chains are let through here only

for a single special case involving complex numbers where

in the end no permutation is necessary. Scan operands and account for prologue cost of constants/externals. ??? This over-estimates cost for multiple uses and should be re-engineered.

|

static |

Analyze an SLP instance starting from a group of grouped stores. Call vect_build_slp_tree to build a tree of packed stmts if possible. Return FALSE if it's impossible to SLP any stmt in the loop.

Calculate the unrolling factor.

Create a node (a root of the SLP tree) for the packed grouped stores.

Collect the stores and store them in SLP_TREE_SCALAR_STMTS.

Collect reduction statements.

Build the tree for the SLP instance.

Calculate the unrolling factor based on the smallest type.

Create a new SLP instance.

Compute the load permutation.

Compute the costs of this SLP instance.

Failed to SLP.

Free the allocated memory.

|

static |

Compute the scalar cost of the SLP node NODE and its children and return it. Do not account defs that are marked in LIFE and update LIFE according to uses of NODE.

If there is a non-vectorized use of the defs then the scalar stmt is kept live in which case we do not account it or any required defs in the SLP children in the scalar cost. This way we make the vectorization more costly when compared to the scalar cost.

|

static |

Check if vectorization of the basic block is profitable.

Calculate vector costs.

Calculate scalar cost.

Complete the target-specific cost calculation.

Vectorization is profitable if its cost is less than the cost of scalar version.

|

static |

Recursively build an SLP tree starting from NODE. Fail (and return a value not equal to zero) if def-stmts are not isomorphic, require data permutation or are of unsupported types of operation. Otherwise, return 0. The value returned is the depth in the SLP tree where a mismatch was found.

If the SLP node is a load, terminate the recursion.

Get at the operands, verifying they are compatible.

Create SLP_TREE nodes for the definition node/s.

If the SLP build for operand zero failed and operand zero

and one can be commutated try that for the scalar stmts

that failed the match. A first scalar stmt mismatch signals a fatal mismatch.

??? For COND_EXPRs we can swap the comparison operands

as well as the arms under some constraints. Do so only if the number of not successful permutes was nor more

than a cut-ff as re-trying the recursive match on

possibly each level of the tree would expose exponential

behavior. Roll back.

Swap mismatched definition stmts.

And try again ...

References vect_free_oprnd_info().

|

static |

Verify if the scalar stmts STMTS are isomorphic, require data permutation or are of unsupported types of operation. Return true if they are, otherwise return false and indicate in *MATCHES which stmts are not isomorphic to the first one. If MATCHES[0] is false then this indicates the comparison could not be carried out or the stmts will never be vectorized by SLP.

For every stmt in NODE find its def stmt/s.

Fail to vectorize statements marked as unvectorizable.

Fatal mismatch.

Fatal mismatch.

Fatal mismatch.

Fatal mismatch.

In case of multiple types we need to detect the smallest type.

Fatal mismatch.

Check the operation.

Shift arguments should be equal in all the packed stmts for a

vector shift with scalar shift operand. First see if we have a vector/vector shift.

No vector/vector shift, try for a vector/scalar shift.

Fatal mismatch.

Fatal mismatch.

Mismatch.

Mismatch.

Mismatch.

Grouped store or load.

Store.

Load.

FORNOW: Check that there is no gap between the loads

and no gap between the groups when we need to load

multiple groups at once.

??? We should enhance this to only disallow gaps

inside vectors. Fatal mismatch.

Check that the size of interleaved loads group is not

greater than the SLP group size. Fatal mismatch.

Check that there are no loads from different interleaving

chains in the same node. Mismatch.

In some cases a group of loads is just the same load

repeated N times. Only analyze its cost once. Fatal mismatch.

Not grouped load.

FORNOW: Not grouped loads are not supported.

Fatal mismatch.

Not memory operation.

Fatal mismatch.

Mismatch.

References COMPARISON_CLASS_P, dr_unaligned_unsupported, dump_enabled_p(), dump_generic_expr(), dump_gimple_stmt(), dump_printf(), dump_printf_loc(), get_vectype_for_scalar_type(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs_code(), gimple_call_chain(), gimple_call_fn(), gimple_call_fntype(), gimple_call_internal_p(), gimple_call_noreturn_p(), gimple_call_nothrow_p(), gimple_call_num_args(), gimple_call_tail_p(), gimple_get_lhs(), GROUP_FIRST_ELEMENT, GROUP_GAP, GROUP_SIZE, insn_data, is_gimple_assign(), is_gimple_call(), least_common_multiple(), MSG_MISSED_OPTIMIZATION, MSG_NOTE, NULL_TREE, insn_data_d::operand, operand_equal_p(), optab_for_tree_code(), optab_handler(), optab_scalar, optab_vector, REFERENCE_CLASS_P, STMT_VINFO_DATA_REF, STMT_VINFO_GROUPED_ACCESS, STMT_VINFO_VECTORIZABLE, tcc_binary, tcc_reference, tcc_unary, TDF_SLIM, TREE_CODE, TREE_CODE_CLASS, TYPE_MODE, TYPE_VECTOR_SUBPARTS, vect_get_smallest_scalar_type(), vect_location, vect_supportable_dr_alignment(), VECTOR_MODE_P, and vinfo_for_stmt().

|

inlinestatic |

Create NCOPIES permutation statements using the mask MASK_BYTES (by building a vector of type MASK_TYPE from it) and two input vectors placed in DR_CHAIN at FIRST_VEC_INDX and SECOND_VEC_INDX for the first copy and shifting by STRIDE elements of DR_CHAIN for every copy. (STRIDE is the number of vectorized stmts for NODE divided by the number of copies). VECT_STMTS_COUNTER specifies the index in the vectorized stmts of NODE, where the created stmts must be inserted.

Initialize the vect stmts of NODE to properly insert the generated stmts later.

Generate the permute statement.

Store the vector statement in NODE.

Mark the scalar stmt as vectorized.

Referenced by vect_get_mask_element().

|

static |

Create an SLP node for SCALAR_STMTS.

|

static |

Allocate operands info for NOPS operands, and GROUP_SIZE def-stmts for each operand.

References _slp_oprnd_info::def_stmts, _slp_oprnd_info::first_dt, _slp_oprnd_info::first_op_type, _slp_oprnd_info::first_pattern, NULL_TREE, and vect_uninitialized_def.

| void vect_detect_hybrid_slp | ( | ) |

Find stmts that must be both vectorized and SLPed.

|

static |

Find stmts that must be both vectorized and SLPed (since they feed stmts that can't be SLPed) in the tree rooted at NODE. Mark such stmts as HYBRID.

Referenced by vect_analyze_slp().

|

static |

Find the first load in the loop that belongs to INSTANCE. When loads are in several SLP nodes, there can be a case in which the first load does not appear in the first SLP node to be transformed, causing incorrect order of statements. Since we generate all the loads together, they must be inserted before the first load of the SLP instance and not before the first load of the first node of the instance.

|

static |

Find the last store in SLP INSTANCE.

|

static |

Free operands info.

Referenced by vect_build_slp_tree().

| void vect_free_slp_instance | ( | ) |

Free the memory allocated for the SLP instance.

Referenced by new_bb_vec_info().

|

static |

Recursively free the memory allocated for the SLP tree rooted at NODE.

References SLP_INSTANCE_LOADS, and SLP_INSTANCE_TREE.

|

static |

Get the defs for the rhs of STMT (collect them in OPRNDS_INFO), check that they are of a valid type and that they match the defs of the first stmt of the SLP group (stored in OPRNDS_INFO).

Check if DEF_STMT is a part of a pattern in LOOP and get the def stmt

from the pattern. Check that all the stmts of the node are in the

pattern.

Not first stmt of the group, check that the def-stmt/s match

the def-stmt/s of the first stmt. Allow different definition

types for reduction chains: the first stmt must be a

vect_reduction_def (a phi node), and the rest

vect_internal_def. Check the types of the definitions.

FORNOW: Not supported.

|

static |

For constant and loop invariant defs of SLP_NODE this function returns (vector) defs (VEC_OPRNDS) that will be used in the vectorized stmts. OP_NUM determines if we gather defs for operand 0 or operand 1 of the RHS of scalar stmts. NUMBER_OF_VECTORS is the number of vector defs to create. REDUC_INDEX is the index of the reduction operand in the statements, unless it is -1.

For additional copies (see the explanation of NUMBER_OF_COPIES below) we need either neutral operands or the original operands. See get_initial_def_for_reduction() for details.

NUMBER_OF_COPIES is the number of times we need to use the same values in

created vectors. It is greater than 1 if unrolling is performed.

For example, we have two scalar operands, s1 and s2 (e.g., group of

strided accesses of size two), while NUNITS is four (i.e., four scalars

of this type can be packed in a vector). The output vector will contain

two copies of each scalar operand: {s1, s2, s1, s2}. (NUMBER_OF_COPIES

will be 2).

If GROUP_SIZE > NUNITS, the scalars will be split into several vectors

containing the operands.

For example, NUNITS is four as before, and the group size is 8

(s1, s2, ..., s8). We will create two vectors {s1, s2, s3, s4} and

{s5, s6, s7, s8}.

Unlike the other binary operators, shifts/rotates have

the shift count being int, instead of the same type as

the lhs, so make sure the scalar is the right type if

we are dealing with vectors of

long long/long/short/char. Get the def before the loop. In reduction chain we have only

one initial value. Create 'vect_ = {op0,op1,...,opn}'. Since the vectors are created in the reverse order, we should invert them.

In case that VF is greater than the unrolling factor needed for the SLP group of stmts, NUMBER_OF_VECTORS to be created is greater than NUMBER_OF_SCALARS/NUNITS or NUNITS/NUMBER_OF_SCALARS, and hence we have to replicate the vectors.

|

static |

Given FIRST_MASK_ELEMENT - the mask element in element representation, return in CURRENT_MASK_ELEMENT its equivalent in target specific representation. Check that the mask is valid and return FALSE if not. Return TRUE in NEED_NEXT_VECTOR if the permutation requires to move to the next vector, i.e., the current first vector is not needed.

Convert to target specific representation.

Adjust the value in case it's a mask for second and third vectors.

We have only one input vector to permute but the mask accesses values in the next vector as well.

The mask requires the next vector.

We either need the first vector too or have already moved to the

next vector. In both cases, this permutation needs three

vectors. We move to the next vector, dropping the first one and working with

the second and the third - we need to adjust the values of the mask

accordingly. This was the last element of this mask. Start a new one.

References build_int_cst(), build_vector, can_vec_perm_p(), dump_enabled_p(), dump_printf(), dump_printf_loc(), MSG_MISSED_OPTIMIZATION, SLP_TREE_LOAD_PERMUTATION, SLP_TREE_SCALAR_STMTS, vect_create_mask_and_perm(), and vect_location.

|

static |

Find the place of the data-ref in STMT in the interleaving chain that starts from FIRST_STMT. Return -1 if the data-ref is not a part of the chain.

References GROUP_NEXT_ELEMENT, and vinfo_for_stmt().

| void vect_get_slp_defs | ( | vec< tree > | ops, |

| slp_tree | slp_node, | ||

| vec< vec< tree > > * | vec_oprnds, | ||

| int | reduc_index | ||

| ) |

Get vectorized definitions for SLP_NODE. If the scalar definitions are loop invariants or constants, collect them and call vect_get_constant_vectors() to create vector stmts. Otherwise, the def-stmts must be already vectorized and the vectorized stmts must be stored in the corresponding child of SLP_NODE, and we call vect_get_slp_vect_defs () to retrieve them.

For each operand we check if it has vectorized definitions in a child

node or we need to create them (for invariants and constants). We

check if the LHS of the first stmt of the next child matches OPRND.

If it does, we found the correct child. Otherwise, we call

vect_get_constant_vectors (), and not advance CHILD_INDEX in order

to check this child node for the next operand.

We have to check both pattern and original def, if available.

The number of vector defs is determined by the number of

vector statements in the node from which we get those

statements. Number of vector stmts was calculated according to LHS in

vect_schedule_slp_instance (), fix it by replacing LHS with

RHS, if necessary. See vect_get_smallest_scalar_type () for

details. Allocate memory for vectorized defs.

For reduction defs we call vect_get_constant_vectors (), since we are

looking for initial loop invariant values. The defs are already vectorized.

Build vectors from scalar defs.

For reductions, we only need initial values.

References dump_enabled_p(), dump_gimple_stmt(), dump_printf(), dump_printf_loc(), MSG_MISSED_OPTIMIZATION, TDF_SLIM, and vect_location.

|

static |

Get vectorized definitions from SLP_NODE that contains corresponding vectorized def-stmts.

| bool vect_make_slp_decision | ( | ) |

For each possible SLP instance decide whether to SLP it and calculate overall unrolling factor needed to SLP the loop. Return TRUE if decided to SLP at least one instance.

FORNOW: SLP if you can.

Mark all the stmts that belong to INSTANCE as PURE_SLP stmts. Later we

call vect_detect_hybrid_slp () to find stmts that need hybrid SLP and

loop-based vectorization. Such stmts will be marked as HYBRID.

References BB_VINFO_BB, BB_VINFO_GROUPED_STORES, BB_VINFO_SLP_INSTANCES, BB_VINFO_TARGET_COST_DATA, gimple_set_uid(), gsi_end_p(), gsi_next(), gsi_start_bb(), gsi_stmt(), init_cost, new_stmt_vec_info(), NULL, and set_vinfo_for_stmt().

|

static |

Mark the tree rooted at NODE with MARK (PURE_SLP or HYBRID). If MARK is HYBRID, it refers to a specific stmt in NODE (the stmt at index J). Otherwise, MARK is PURE_SLP and J is -1, which indicates that all the stmts in NODE are to be marked.

References SLP_INSTANCE_GROUP_SIZE, and data_reference::stmt.

Referenced by vect_analyze_slp().

|

static |

Mark the statements of the tree rooted at NODE as relevant (vect_used).

References dump_printf(), dump_printf_loc(), FOR_EACH_VEC_ELT, _slp_tree::load_permutation, MSG_NOTE, SLP_INSTANCE_LOADS, and vect_location.

|

static |

Dump a slp tree NODE using flags specified in DUMP_KIND.

References gcc_assert, STMT_VINFO_RELEVANT, vect_used_in_scope, and vinfo_for_stmt().

|

static |

Replace scalar calls from SLP node NODE with setting of their lhs to zero. For loop vectorization this is done in vectorizable_call, but for SLP it needs to be deferred until end of vect_schedule_slp, because multiple SLP instances may refer to the same scalar stmt.

| bool vect_schedule_slp | ( | ) |

Generate vector code for all SLP instances in the loop/basic block.

Schedule the tree of INSTANCE.

Remove scalar call stmts. Do not do this for basic-block

vectorization as not all uses may be vectorized.

??? Why should this be necessary? DCE should be able to

remove the stmts itself.

??? For BB vectorization we can as well remove scalar

stmts starting from the SLP tree root if they have no

uses. Free the attached stmt_vec_info and remove the stmt.

Referenced by vect_transform_loop().

|

static |

Vectorize SLP instance tree in postorder.

VECTYPE is the type of the destination.

For each SLP instance calculate number of vector stmts to be created for the scalar stmts in each node of the SLP tree. Number of vector elements in one vector iteration is the number of scalar elements in one scalar iteration (GROUP_SIZE) multiplied by VF divided by vector size.

Loads should be inserted before the first load.

Stores should be inserted just before the last store.

Mark the first element of the reduction chain as reduction to properly transform the node. In the analysis phase only the last element of the chain is marked as reduction.

| bb_vec_info vect_slp_analyze_bb | ( | ) |

Autodetect first vector size we try.

Try the next biggest vector size.

References build_int_cst(), build_real(), dconst0, dconst1, gimple_bb(), gimple_op(), loop_preheader_edge(), NULL, PHI_ARG_DEF_FROM_EDGE, SCALAR_FLOAT_TYPE_P, SSA_NAME_DEF_STMT, and TREE_TYPE.

|

static |

Check if the basic block can be vectorized.

Check the SLP opportunities in the basic block, analyze and build SLP trees.

Mark all the statements that we want to vectorize as pure SLP and relevant.

Cost model: check if the vectorization is worthwhile.

|

static |

Analyze statements contained in SLP tree node after recursively analyzing the subtree. Return TRUE if the operations are supported.

|

static |

Analyze statements in SLP instances of the basic block. Return TRUE if the operations are supported.

|

static |

Rearrange the statements of NODE according to PERMUTATION.

References bitmap_clear(), FOR_EACH_VEC_ELT, _slp_tree::load_permutation, sbitmap_alloc(), and SLP_INSTANCE_LOADS.

| void vect_slp_transform_bb | ( | ) |

Vectorize the basic block.

Schedule all the SLP instances when the first SLP stmt is reached.

|

static |

Check if the required load permutations in the SLP instance SLP_INSTN are supported.

In case of reduction every load permutation is allowed, since the order of the reduction statements is not important (as opposed to the case of grouped stores). The only condition we need to check is that all the load nodes are of the same size and have the same permutation (and then rearrange all the nodes of the SLP instance according to this permutation).

Check that all the load nodes are of the same size.

??? Can't we assert this?

Reduction (there are no data-refs in the root). In reduction chain the order of the loads is important.

Compare all the permutation sequences to the first one. We know

that at least one load is permuted. Check that the loads in the first sequence are different and there

are no gaps between them. This permutation is valid for reduction. Since the order of the

statements in the nodes is not important unless they are memory

accesses, we can rearrange the statements in all the nodes

according to the order of the loads. We are done, no actual permutations need to be generated.

In basic block vectorization we allow any subchain of an interleaving chain. FORNOW: not supported in loop SLP because of realignment compications.

Check that for every node in the instance the loads

form a subchain. Check that the alignment of the first load in every subchain, i.e.,

the first statement in every load node, is supported.

??? This belongs in alignment checking. We are done, no actual permutations need to be generated.

FORNOW: the only supported permutation is 0..01..1.. of length equal to GROUP_SIZE and where each sequence of same drs is of GROUP_SIZE length as well (unless it's reduction).

References bitmap_bit_p, bitmap_set_bit, and sbitmap_free().

| bool vect_transform_slp_perm_load | ( | slp_tree | node, |

| vec< tree > | dr_chain, | ||

| gimple_stmt_iterator * | gsi, | ||

| int | vf, | ||

| slp_instance | slp_node_instance, | ||

| bool | analyze_only | ||

| ) |

Generate vector permute statements from a list of loads in DR_CHAIN. If ANALYZE_ONLY is TRUE, only check that it is possible to create valid permute statements for the SLP node NODE of the SLP instance SLP_NODE_INSTANCE.

The generic VEC_PERM_EXPR code always uses an integral type of the same size as the vector element being permuted.

The number of vector stmts to generate based only on SLP_NODE_INSTANCE unrolling factor.

Number of copies is determined by the final vectorization factor relatively to SLP_NODE_INSTANCE unrolling factor.

Generate permutation masks for every NODE. Number of masks for each NODE

is equal to GROUP_SIZE.

E.g., we have a group of three nodes with three loads from the same

location in each node, and the vector size is 4. I.e., we have a

a0b0c0a1b1c1... sequence and we need to create the following vectors:

for a's: a0a0a0a1 a1a1a2a2 a2a3a3a3

for b's: b0b0b0b1 b1b1b2b2 b2b3b3b3

...

The masks for a's should be: {0,0,0,3} {3,3,6,6} {6,9,9,9}.

The last mask is illegal since we assume two operands for permute

operation, and the mask element values can't be outside that range.

Hence, the last mask must be converted into {2,5,5,5}.

For the first two permutations we need the first and the second input

vectors: {a0,b0,c0,a1} and {b1,c1,a2,b2}, and for the last permutation

we need the second and the third vectors: {b1,c1,a2,b2} and

{c2,a3,b3,c3}.

References SLP_TREE_NUMBER_OF_VEC_STMTS, and SLP_TREE_VEC_STMTS.

| void vect_update_slp_costs_according_to_vf | ( | ) |

SLP costs are calculated according to SLP instance unrolling factor (i.e., the number of created vector stmts depends on the unrolling factor). However, the actual number of vector stmts for every SLP node depends on VF which is set later in vect_analyze_operations (). Hence, SLP costs should be updated. In this function we assume that the inside costs calculated in vect_model_xxx_cost are linear in ncopies.

We assume that costs are linear in ncopies.

Record the instance's instructions in the target cost model.

This was delayed until here because the count of instructions

isn't known beforehand.