Functions |

| static void | add_stmt_info_to_vec (stmt_vector_for_cost *stmt_cost_vec, int count, enum vect_cost_for_stmt kind, gimple stmt, int misalign) |

| static loop_vec_info | loop_vec_info_for_loop () |

| static bool | nested_in_vect_loop_p () |

| static bb_vec_info | vec_info_for_bb () |

| void | init_stmt_vec_info_vec (void) |

| void | free_stmt_vec_info_vec (void) |

| static stmt_vec_info | vinfo_for_stmt () |

| static void | set_vinfo_for_stmt () |

| static gimple | get_earlier_stmt () |

| static gimple | get_later_stmt () |

| static bool | is_pattern_stmt_p () |

| static bool | is_loop_header_bb_p () |

| static int | vect_pow2 () |

| static int | builtin_vectorization_cost (enum vect_cost_for_stmt type_of_cost, tree vectype, int misalign) |

| static int | vect_get_stmt_cost (enum vect_cost_for_stmt type_of_cost) |

| static void * | init_cost () |

| static unsigned | add_stmt_cost (void *data, int count, enum vect_cost_for_stmt kind, stmt_vec_info stmt_info, int misalign, enum vect_cost_model_location where) |

| static void | finish_cost (void *data, unsigned *prologue_cost, unsigned *body_cost, unsigned *epilogue_cost) |

| static void | destroy_cost_data () |

| static bool | aligned_access_p () |

| static bool | known_alignment_for_access_p () |

| void | slpeel_make_loop_iterate_ntimes (struct loop *, tree) |

| bool | slpeel_can_duplicate_loop_p (const struct loop *, const_edge) |

| void | vect_loop_versioning (loop_vec_info, unsigned int, bool) |

| void | vect_do_peeling_for_loop_bound (loop_vec_info, tree *, unsigned int, bool) |

| void | vect_do_peeling_for_alignment (loop_vec_info, unsigned int, bool) |

| LOC | find_loop_location (struct loop *) |

| bool | vect_can_advance_ivs_p (loop_vec_info) |

| tree | get_vectype_for_scalar_type (tree) |

| tree | get_same_sized_vectype (tree, tree) |

| bool | vect_is_simple_use (tree, gimple, loop_vec_info, bb_vec_info, gimple *, tree *, enum vect_def_type *) |

| bool | vect_is_simple_use_1 (tree, gimple, loop_vec_info, bb_vec_info, gimple *, tree *, enum vect_def_type *, tree *) |

| bool | supportable_widening_operation (enum tree_code, gimple, tree, tree, enum tree_code *, enum tree_code *, int *, vec< tree > *) |

| bool | supportable_narrowing_operation (enum tree_code, tree, tree, enum tree_code *, int *, vec< tree > *) |

| stmt_vec_info | new_stmt_vec_info (gimple stmt, loop_vec_info, bb_vec_info) |

| void | free_stmt_vec_info (gimple stmt) |

| tree | vectorizable_function (gimple, tree, tree) |

| void | vect_model_simple_cost (stmt_vec_info, int, enum vect_def_type *, stmt_vector_for_cost *, stmt_vector_for_cost *) |

| void | vect_model_store_cost (stmt_vec_info, int, bool, enum vect_def_type, slp_tree, stmt_vector_for_cost *, stmt_vector_for_cost *) |

| void | vect_model_load_cost (stmt_vec_info, int, bool, slp_tree, stmt_vector_for_cost *, stmt_vector_for_cost *) |

| unsigned | record_stmt_cost (stmt_vector_for_cost *, int, enum vect_cost_for_stmt, stmt_vec_info, int, enum vect_cost_model_location) |

| void | vect_finish_stmt_generation (gimple, gimple, gimple_stmt_iterator *) |

| bool | vect_mark_stmts_to_be_vectorized (loop_vec_info) |

| tree | vect_get_vec_def_for_operand (tree, gimple, tree *) |

| tree | vect_init_vector (gimple, tree, tree, gimple_stmt_iterator *) |

| tree | vect_get_vec_def_for_stmt_copy (enum vect_def_type, tree) |

| bool | vect_transform_stmt (gimple, gimple_stmt_iterator *, bool *, slp_tree, slp_instance) |

| void | vect_remove_stores (gimple) |

| bool | vect_analyze_stmt (gimple, bool *, slp_tree) |

| bool | vectorizable_condition (gimple, gimple_stmt_iterator *, gimple *, tree, int, slp_tree) |

| void | vect_get_load_cost (struct data_reference *, int, bool, unsigned int *, unsigned int *, stmt_vector_for_cost *, stmt_vector_for_cost *, bool) |

| void | vect_get_store_cost (struct data_reference *, int, unsigned int *, stmt_vector_for_cost *) |

| bool | vect_supportable_shift (enum tree_code, tree) |

| void | vect_get_vec_defs (tree, tree, gimple, vec< tree > *, vec< tree > *, slp_tree, int) |

| tree | vect_gen_perm_mask (tree, unsigned char *) |

| bool | vect_can_force_dr_alignment_p (const_tree, unsigned int) |

| enum dr_alignment_support | vect_supportable_dr_alignment (struct data_reference *, bool) |

| tree | vect_get_smallest_scalar_type (gimple, HOST_WIDE_INT *, HOST_WIDE_INT *) |

| bool | vect_analyze_data_ref_dependences (loop_vec_info, int *) |

| bool | vect_slp_analyze_data_ref_dependences (bb_vec_info) |

| bool | vect_enhance_data_refs_alignment (loop_vec_info) |

| bool | vect_analyze_data_refs_alignment (loop_vec_info, bb_vec_info) |

| bool | vect_verify_datarefs_alignment (loop_vec_info, bb_vec_info) |

| bool | vect_analyze_data_ref_accesses (loop_vec_info, bb_vec_info) |

| bool | vect_prune_runtime_alias_test_list (loop_vec_info) |

| tree | vect_check_gather (gimple, loop_vec_info, tree *, tree *, int *) |

| bool | vect_analyze_data_refs (loop_vec_info, bb_vec_info, int *) |

| tree | vect_create_data_ref_ptr (gimple, tree, struct loop *, tree, tree *, gimple_stmt_iterator *, gimple *, bool, bool *) |

| tree | bump_vector_ptr (tree, gimple, gimple_stmt_iterator *, gimple, tree) |

| tree | vect_create_destination_var (tree, tree) |

| bool | vect_grouped_store_supported (tree, unsigned HOST_WIDE_INT) |

| bool | vect_store_lanes_supported (tree, unsigned HOST_WIDE_INT) |

| bool | vect_grouped_load_supported (tree, unsigned HOST_WIDE_INT) |

| bool | vect_load_lanes_supported (tree, unsigned HOST_WIDE_INT) |

| void | vect_permute_store_chain (vec< tree >, unsigned int, gimple, gimple_stmt_iterator *, vec< tree > *) |

| tree | vect_setup_realignment (gimple, gimple_stmt_iterator *, tree *, enum dr_alignment_support, tree, struct loop **) |

| void | vect_transform_grouped_load (gimple, vec< tree >, int, gimple_stmt_iterator *) |

| void | vect_record_grouped_load_vectors (gimple, vec< tree >) |

| tree | vect_get_new_vect_var (tree, enum vect_var_kind, const char *) |

| tree | vect_create_addr_base_for_vector_ref (gimple, gimple_seq *, tree, struct loop *) |

| void | destroy_loop_vec_info (loop_vec_info, bool) |

| gimple | vect_force_simple_reduction (loop_vec_info, gimple, bool, bool *) |

| loop_vec_info | vect_analyze_loop (struct loop *) |

| void | vect_transform_loop (loop_vec_info) |

| loop_vec_info | vect_analyze_loop_form (struct loop *) |

| bool | vectorizable_live_operation (gimple, gimple_stmt_iterator *, gimple *) |

| bool | vectorizable_reduction (gimple, gimple_stmt_iterator *, gimple *, slp_tree) |

| bool | vectorizable_induction (gimple, gimple_stmt_iterator *, gimple *) |

| tree | get_initial_def_for_reduction (gimple, tree, tree *) |

| int | vect_min_worthwhile_factor (enum tree_code) |

| int | vect_get_known_peeling_cost (loop_vec_info, int, int *, int, stmt_vector_for_cost *, stmt_vector_for_cost *) |

| int | vect_get_single_scalar_iteration_cost (loop_vec_info) |

| void | vect_free_slp_instance (slp_instance) |

| bool | vect_transform_slp_perm_load (slp_tree, vec< tree >, gimple_stmt_iterator *, int, slp_instance, bool) |

| bool | vect_schedule_slp (loop_vec_info, bb_vec_info) |

| void | vect_update_slp_costs_according_to_vf (loop_vec_info) |

| bool | vect_analyze_slp (loop_vec_info, bb_vec_info) |

| bool | vect_make_slp_decision (loop_vec_info) |

| void | vect_detect_hybrid_slp (loop_vec_info) |

| void | vect_get_slp_defs (vec< tree >, slp_tree, vec< vec< tree > > *, int) |

| LOC | find_bb_location (basic_block) |

| bb_vec_info | vect_slp_analyze_bb (basic_block) |

| void | vect_slp_transform_bb (basic_block) |

| void | vect_pattern_recog (loop_vec_info, bb_vec_info) |

| unsigned | vectorize_loops (void) |

Function bump_vector_ptr

Increment a pointer (to a vector type) by vector-size. If requested,

i.e. if PTR-INCR is given, then also connect the new increment stmt

to the existing def-use update-chain of the pointer, by modifying

the PTR_INCR as illustrated below:

The pointer def-use update-chain before this function:

DATAREF_PTR = phi (p_0, p_2)

....

PTR_INCR: p_2 = DATAREF_PTR + step

The pointer def-use update-chain after this function:

DATAREF_PTR = phi (p_0, p_2)

....

NEW_DATAREF_PTR = DATAREF_PTR + BUMP

....

PTR_INCR: p_2 = NEW_DATAREF_PTR + step

Input:

DATAREF_PTR - ssa_name of a pointer (to vector type) that is being updated

in the loop.

PTR_INCR - optional. The stmt that updates the pointer in each iteration of

the loop. The increment amount across iterations is expected

to be vector_size.

BSI - location where the new update stmt is to be placed.

STMT - the original scalar memory-access stmt that is being vectorized.

BUMP - optional. The offset by which to bump the pointer. If not given,

the offset is assumed to be vector_size.

Output: Return NEW_DATAREF_PTR as illustrated above.

References copy_ssa_name(), DR_PTR_INFO, duplicate_ssa_name_ptr_info(), gimple_build_assign_with_ops(), mark_ptr_info_alignment_unknown(), tree_int_cst_compare(), vect_finish_stmt_generation(), and vinfo_for_stmt().

Referenced by vectorizable_load(), and vectorizable_store().

Function get_initial_def_for_reduction

Input:

STMT - a stmt that performs a reduction operation in the loop.

INIT_VAL - the initial value of the reduction variable

Output:

ADJUSTMENT_DEF - a tree that holds a value to be added to the final result

of the reduction (used for adjusting the epilog - see below).

Return a vector variable, initialized according to the operation that STMT

performs. This vector will be used as the initial value of the

vector of partial results.

Option1 (adjust in epilog): Initialize the vector as follows:

add/bit or/xor: [0,0,...,0,0]

mult/bit and: [1,1,...,1,1]

min/max/cond_expr: [init_val,init_val,..,init_val,init_val]

and when necessary (e.g. add/mult case) let the caller know

that it needs to adjust the result by init_val.

Option2: Initialize the vector as follows:

add/bit or/xor: [init_val,0,0,...,0]

mult/bit and: [init_val,1,1,...,1]

min/max/cond_expr: [init_val,init_val,...,init_val]

and no adjustments are needed.

For example, for the following code:

s = init_val;

for (i=0;i<n;i++)

s = s + a[i];

STMT is 's = s + a[i]', and the reduction variable is 's'.

For a vector of 4 units, we want to return either [0,0,0,init_val],

or [0,0,0,0] and let the caller know that it needs to adjust

the result at the end by 'init_val'.

FORNOW, we are using the 'adjust in epilog' scheme, because this way the

initialization vector is simpler (same element in all entries), if

ADJUSTMENT_DEF is not NULL, and Option2 otherwise.

A cost model should help decide between these two schemes.

References build_constructor(), build_int_cst(), build_real(), build_vector_from_val(), dconst0, dconst1, flow_bb_inside_loop_p(), get_vectype_for_scalar_type(), gimple_assign_rhs_code(), nested_in_vect_loop_p(), vec_alloc(), vect_create_destination_var(), vect_double_reduction_def, vect_get_vec_def_for_operand(), and vinfo_for_stmt().

Referenced by vect_create_epilog_for_reduction(), and vect_get_vec_def_for_operand().

Function supportable_widening_operation

Check whether an operation represented by the code CODE is a

widening operation that is supported by the target platform in

vector form (i.e., when operating on arguments of type VECTYPE_IN

producing a result of type VECTYPE_OUT).

Widening operations we currently support are NOP (CONVERT), FLOAT

and WIDEN_MULT. This function checks if these operations are supported

by the target platform either directly (via vector tree-codes), or via

target builtins.

Output:

- CODE1 and CODE2 are codes of vector operations to be used when

vectorizing the operation, if available.

- MULTI_STEP_CVT determines the number of required intermediate steps in

case of multi-step conversion (like char->short->int - in that case

MULTI_STEP_CVT will be 1).

- INTERM_TYPES contains the intermediate type required to perform the

widening operation (short in the above example).

References insn_data, nested_in_vect_loop_p(), insn_data_d::operand, optab_default, optab_for_tree_code(), optab_handler(), supportable_widening_operation(), lang_hooks_for_types::type_for_mode, lang_hooks::types, vect_used_by_reduction, and vinfo_for_stmt().

Referenced by supportable_widening_operation(), vect_recog_widen_mult_pattern(), vect_recog_widen_shift_pattern(), and vectorizable_conversion().

Function vect_analyze_data_refs.

Find all the data references in the loop or basic block.

The general structure of the analysis of data refs in the vectorizer is as

follows:

1- vect_analyze_data_refs(loop/bb): call

compute_data_dependences_for_loop/bb to find and analyze all data-refs

in the loop/bb and their dependences.

2- vect_analyze_dependences(): apply dependence testing using ddrs.

3- vect_analyze_drs_alignment(): check that ref_stmt.alignment is ok.

4- vect_analyze_drs_access(): check that ref_stmt.step is ok.

References affine_iv::base, create_data_ref(), DR_BASE_ADDRESS, DR_INIT, DR_IS_READ, DR_OFFSET, DR_REF, DR_STEP, DR_STMT, dump_enabled_p(), dump_generic_expr(), dump_gimple_stmt(), dump_printf(), dump_printf_loc(), find_data_references_in_loop(), find_data_references_in_stmt(), find_loop_nest(), free_data_ref(), get_inner_reference(), get_vectype_for_scalar_type(), gimple_call_arg(), gimple_call_internal_fn(), gimple_call_internal_p(), gimple_clobber_p(), gsi_end_p(), gsi_next(), gsi_start_bb(), gsi_stmt(), highest_pow2_factor(), host_integerp(), HOST_WIDE_INT, integer_zerop(), is_gimple_call(), loop_containing_stmt(), nested_in_vect_loop_p(), offset, loop::simduid, simple_iv(), split_constant_offset(), affine_iv::step, data_reference::stmt, stmt_can_throw_internal(), targetm, tree_int_cst_equal(), unshare_expr(), vect_check_gather(), vect_location, and vinfo_for_stmt().

Referenced by vect_analyze_loop_2(), and vect_slp_analyze_bb_1().

Function vect_create_addr_base_for_vector_ref.

Create an expression that computes the address of the first memory location

that will be accessed for a data reference.

Input:

STMT: The statement containing the data reference.

NEW_STMT_LIST: Must be initialized to NULL_TREE or a statement list.

OFFSET: Optional. If supplied, it is be added to the initial address.

LOOP: Specify relative to which loop-nest should the address be computed.

For example, when the dataref is in an inner-loop nested in an

outer-loop that is now being vectorized, LOOP can be either the

outer-loop, or the inner-loop. The first memory location accessed

by the following dataref ('in' points to short):

for (i=0; i<N; i++)

for (j=0; j<M; j++)

s += in[i+j]

is as follows:

if LOOP=i_loop: &in (relative to i_loop)

if LOOP=j_loop: &in+i*2B (relative to j_loop)

Output:

1. Return an SSA_NAME whose value is the address of the memory location of

the first vector of the data reference.

2. If new_stmt_list is not NULL_TREE after return then the caller must insert

these statement(s) which define the returned SSA_NAME.

FORNOW: We are only handling array accesses with step 1.

References build_pointer_type(), DR_BASE_ADDRESS, DR_INIT, DR_OFFSET, DR_PTR_INFO, DR_REF, dump_enabled_p(), dump_generic_expr(), dump_printf_loc(), duplicate_ssa_name_ptr_info(), force_gimple_operand(), get_name(), gimple_seq_add_seq(), mark_ptr_info_alignment_unknown(), nested_in_vect_loop_p(), unshare_expr(), vect_get_new_vect_var(), vect_location, vect_pointer_var, and vinfo_for_stmt().

Referenced by vect_create_cond_for_align_checks(), vect_create_data_ref_ptr(), vect_gen_niters_for_prolog_loop(), and vect_setup_realignment().

Function vect_create_data_ref_ptr.

Create a new pointer-to-AGGR_TYPE variable (ap), that points to the first

location accessed in the loop by STMT, along with the def-use update

chain to appropriately advance the pointer through the loop iterations.

Also set aliasing information for the pointer. This pointer is used by

the callers to this function to create a memory reference expression for

vector load/store access.

Input:

1. STMT: a stmt that references memory. Expected to be of the form

GIMPLE_ASSIGN <name, data-ref> or

GIMPLE_ASSIGN <data-ref, name>.

2. AGGR_TYPE: the type of the reference, which should be either a vector

or an array.

3. AT_LOOP: the loop where the vector memref is to be created.

4. OFFSET (optional): an offset to be added to the initial address accessed

by the data-ref in STMT.

5. BSI: location where the new stmts are to be placed if there is no loop

6. ONLY_INIT: indicate if ap is to be updated in the loop, or remain

pointing to the initial address.

Output:

1. Declare a new ptr to vector_type, and have it point to the base of the

data reference (initial addressed accessed by the data reference).

For example, for vector of type V8HI, the following code is generated:

v8hi *ap;

ap = (v8hi *)initial_address;

if OFFSET is not supplied:

initial_address = &a[init];

if OFFSET is supplied:

initial_address = &a[init + OFFSET];

Return the initial_address in INITIAL_ADDRESS.

2. If ONLY_INIT is true, just return the initial pointer. Otherwise, also

update the pointer in each iteration of the loop.

Return the increment stmt that updates the pointer in PTR_INCR.

3. Set INV_P to true if the access pattern of the data reference in the

vectorized loop is invariant. Set it to false otherwise.

4. Return the pointer.

References alias_sets_conflict_p(), build_pointer_type_for_mode(), create_iv(), DR_BASE_ADDRESS, DR_BASE_OBJECT, DR_PTR_INFO, DR_REF, DR_STEP, dump_enabled_p(), dump_generic_expr(), dump_printf(), dump_printf_loc(), duplicate_ssa_name_ptr_info(), get_alias_set(), get_name(), gimple_assign_set_lhs(), gimple_bb(), gsi_insert_before(), gsi_insert_on_edge_immediate(), gsi_insert_seq_before(), gsi_insert_seq_on_edge_immediate(), GSI_SAME_STMT, gsi_stmt(), integer_zerop(), loop_preheader_edge(), make_ssa_name(), nested_in_vect_loop_p(), new_stmt_vec_info(), ptr_mode, set_vinfo_for_stmt(), standard_iv_increment_position(), tree_code_name, tree_int_cst_sgn(), useless_type_conversion_p(), vect_create_addr_base_for_vector_ref(), vect_get_new_vect_var(), vect_location, vect_pointer_var, and vinfo_for_stmt().

Referenced by vect_setup_realignment(), vectorizable_load(), and vectorizable_store().

Return the smallest scalar part of STMT.

This is used to determine the vectype of the stmt. We generally set the

vectype according to the type of the result (lhs). For stmts whose

result-type is different than the type of the arguments (e.g., demotion,

promotion), vectype will be reset appropriately (later). Note that we have

to visit the smallest datatype in this function, because that determines the

VF. If the smallest datatype in the loop is present only as the rhs of a

promotion operation - we'd miss it.

Such a case, where a variable of this datatype does not appear in the lhs

anywhere in the loop, can only occur if it's an invariant: e.g.:

'int_x = (int) short_inv', which we'd expect to have been optimized away by

invariant motion. However, we cannot rely on invariant motion to always

take invariants out of the loop, and so in the case of promotion we also

have to check the rhs.

LHS_SIZE_UNIT and RHS_SIZE_UNIT contain the sizes of the corresponding

types.

References gimple_assign_cast_p(), gimple_assign_rhs1(), gimple_assign_rhs_code(), gimple_expr_type(), HOST_WIDE_INT, and is_gimple_assign().

Referenced by vect_build_slp_tree_1(), vect_determine_vectorization_factor(), and vect_get_slp_defs().

Function vect_is_simple_use.

Input:

LOOP_VINFO - the vect info of the loop that is being vectorized.

BB_VINFO - the vect info of the basic block that is being vectorized.

OPERAND - operand of STMT in the loop or bb.

DEF - the defining stmt in case OPERAND is an SSA_NAME.

Returns whether a stmt with OPERAND can be vectorized.

For loops, supportable operands are constants, loop invariants, and operands

that are defined by the current iteration of the loop. Unsupportable

operands are those that are defined by a previous iteration of the loop (as

is the case in reduction/induction computations).

For basic blocks, supportable operands are constants and bb invariants.

For now, operands defined outside the basic block are not supported.

References dump_enabled_p(), dump_generic_expr(), dump_gimple_stmt(), dump_printf_loc(), flow_bb_inside_loop_p(), gimple_assign_lhs(), gimple_bb(), gimple_call_lhs(), gimple_nop_p(), gimple_phi_result(), is_gimple_min_invariant(), vect_constant_def, vect_double_reduction_def, vect_external_def, vect_location, vect_unknown_def_type, and vinfo_for_stmt().

Referenced by check_bool_pattern(), process_use(), type_conversion_p(), vect_analyze_slp_cost_1(), vect_get_and_check_slp_defs(), vect_get_vec_def_for_operand(), vect_is_simple_use_1(), vect_recog_rotate_pattern(), vect_recog_vector_vector_shift_pattern(), vectorizable_condition(), vectorizable_conversion(), vectorizable_live_operation(), vectorizable_operation(), vectorizable_reduction(), and vectorizable_store().

| void vect_loop_versioning |

( |

loop_vec_info |

loop_vinfo, |

|

|

unsigned int |

th, |

|

|

bool |

check_profitability |

|

) |

| |

Function vect_loop_versioning.

If the loop has data references that may or may not be aligned or/and

has data reference relations whose independence was not proven then

two versions of the loop need to be generated, one which is vectorized

and one which isn't. A test is then generated to control which of the

loops is executed. The test checks for the alignment of all of the

data references that may or may not be aligned. An additional

sequence of runtime tests is generated for each pairs of DDRs whose

independence was not proven. The vectorized version of loop is

executed only if both alias and alignment tests are passed.

The test generated to check which version of loop is executed

is modified to also check for profitability as indicated by the

cost model initially.

The versioning precondition(s) are placed in *COND_EXPR and

*COND_EXPR_STMT_LIST.

References add_phi_arg(), adjust_phi_and_debug_stmts(), build_int_cst(), copy_ssa_name(), create_phi_node(), edge_def::dest, force_gimple_operand_1(), free_original_copy_tables(), gimple_phi_arg_location_from_edge(), gimple_seq_add_seq(), gsi_end_p(), gsi_insert_seq_before(), gsi_last_bb(), gsi_next(), GSI_SAME_STMT, gsi_start_phis(), gsi_stmt(), initialize_original_copy_tables(), is_gimple_condexpr(), loop_version(), basic_block_def::preds, prob, single_exit(), split_edge(), update_ssa(), vect_create_cond_for_alias_checks(), and vect_create_cond_for_align_checks().

Referenced by vect_transform_loop().

Function vect_permute_store_chain.

Given a chain of interleaved stores in DR_CHAIN of LENGTH that must be

a power of 2, generate interleave_high/low stmts to reorder the data

correctly for the stores. Return the final references for stores in

RESULT_CHAIN.

E.g., LENGTH is 4 and the scalar type is short, i.e., VF is 8.

The input is 4 vectors each containing 8 elements. We assign a number to

each element, the input sequence is:

1st vec: 0 1 2 3 4 5 6 7

2nd vec: 8 9 10 11 12 13 14 15

3rd vec: 16 17 18 19 20 21 22 23

4th vec: 24 25 26 27 28 29 30 31

The output sequence should be:

1st vec: 0 8 16 24 1 9 17 25

2nd vec: 2 10 18 26 3 11 19 27

3rd vec: 4 12 20 28 5 13 21 30

4th vec: 6 14 22 30 7 15 23 31

i.e., we interleave the contents of the four vectors in their order.

We use interleave_high/low instructions to create such output. The input of

each interleave_high/low operation is two vectors:

1st vec 2nd vec

0 1 2 3 4 5 6 7

the even elements of the result vector are obtained left-to-right from the

high/low elements of the first vector. The odd elements of the result are

obtained left-to-right from the high/low elements of the second vector.

The output of interleave_high will be: 0 4 1 5

and of interleave_low: 2 6 3 7

The permutation is done in log LENGTH stages. In each stage interleave_high

and interleave_low stmts are created for each pair of vectors in DR_CHAIN,

where the first argument is taken from the first half of DR_CHAIN and the

second argument from it's second half.

In our example,

I1: interleave_high (1st vec, 3rd vec)

I2: interleave_low (1st vec, 3rd vec)

I3: interleave_high (2nd vec, 4th vec)

I4: interleave_low (2nd vec, 4th vec)

The output for the first stage is:

I1: 0 16 1 17 2 18 3 19

I2: 4 20 5 21 6 22 7 23

I3: 8 24 9 25 10 26 11 27

I4: 12 28 13 29 14 30 15 31

The output of the second stage, i.e. the final result is:

I1: 0 8 16 24 1 9 17 25

I2: 2 10 18 26 3 11 19 27

I3: 4 12 20 28 5 13 21 30

I4: 6 14 22 30 7 15 23 31.

References exact_log2(), gimple_build_assign_with_ops(), make_temp_ssa_name(), memcpy(), vect_finish_stmt_generation(), vect_gen_perm_mask(), and vinfo_for_stmt().

Referenced by vectorizable_store().

Function vect_setup_realignment

This function is called when vectorizing an unaligned load using

the dr_explicit_realign[_optimized] scheme.

This function generates the following code at the loop prolog:

p = initial_addr;

x msq_init = *(floor(p)); # prolog load

realignment_token = call target_builtin;

loop:

x msq = phi (msq_init, ---)

The stmts marked with x are generated only for the case of

dr_explicit_realign_optimized.

The code above sets up a new (vector) pointer, pointing to the first

location accessed by STMT, and a "floor-aligned" load using that pointer.

It also generates code to compute the "realignment-token" (if the relevant

target hook was defined), and creates a phi-node at the loop-header bb

whose arguments are the result of the prolog-load (created by this

function) and the result of a load that takes place in the loop (to be

created by the caller to this function).

For the case of dr_explicit_realign_optimized:

The caller to this function uses the phi-result (msq) to create the

realignment code inside the loop, and sets up the missing phi argument,

as follows:

loop:

msq = phi (msq_init, lsq)

lsq = *(floor(p')); # load in loop

result = realign_load (msq, lsq, realignment_token);

For the case of dr_explicit_realign:

loop:

msq = *(floor(p)); # load in loop

p' = p + (VS-1);

lsq = *(floor(p')); # load in loop

result = realign_load (msq, lsq, realignment_token);

Input:

STMT - (scalar) load stmt to be vectorized. This load accesses

a memory location that may be unaligned.

BSI - place where new code is to be inserted.

ALIGNMENT_SUPPORT_SCHEME - which of the two misalignment handling schemes

is used.

Output:

REALIGNMENT_TOKEN - the result of a call to the builtin_mask_for_load

target hook, if defined.

Return value - the result of the loop-header phi node.

References add_phi_arg(), build_int_cst(), copy_ssa_name(), create_phi_node(), dr_explicit_realign, dr_explicit_realign_optimized, DR_REF, gimple_assign_lhs(), gimple_assign_set_lhs(), gimple_bb(), gimple_build_assign_with_ops(), gimple_build_call(), gimple_call_lhs(), gimple_call_return_type(), gimple_call_set_lhs(), gsi_insert_before(), gsi_insert_on_edge_immediate(), gsi_insert_seq_before(), gsi_insert_seq_on_edge_immediate(), GSI_SAME_STMT, loop::header, HOST_WIDE_INT, loop::inner, loop_preheader_edge(), make_ssa_name(), nested_in_vect_loop_p(), reference_alias_ptr_type(), targetm, tree_int_cst_compare(), vect_create_addr_base_for_vector_ref(), vect_create_data_ref_ptr(), vect_create_destination_var(), and vinfo_for_stmt().

Referenced by vectorizable_load().

vectorizable_condition.

Check if STMT is conditional modify expression that can be vectorized.

If VEC_STMT is also passed, vectorize the STMT: create a vectorized

stmt using VEC_COND_EXPR to replace it, put it in VEC_STMT, and insert it

at GSI.

When STMT is vectorized as nested cycle, REDUC_DEF is the vector variable

to be used at REDUC_INDEX (in then clause if REDUC_INDEX is 1, and in

else caluse if it is 2).

Return FALSE if not a vectorizable STMT, TRUE otherwise.

References build_nonstandard_integer_type(), condition_vec_info_type, dump_enabled_p(), dump_printf_loc(), expand_vec_cond_expr_p(), get_same_sized_vectype(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs3(), gimple_assign_rhs_code(), gimple_assign_set_lhs(), is_gimple_assign(), make_ssa_name(), vect_create_destination_var(), vect_finish_stmt_generation(), vect_get_slp_defs(), vect_get_vec_def_for_operand(), vect_get_vec_def_for_stmt_copy(), vect_internal_def, vect_is_simple_cond(), vect_is_simple_use(), vect_location, vect_nested_cycle, vinfo_for_stmt(), and vNULL.

Referenced by vect_analyze_stmt(), vect_transform_stmt(), and vectorizable_reduction().

Function vectorizable_reduction.

Check if STMT performs a reduction operation that can be vectorized.

If VEC_STMT is also passed, vectorize the STMT: create a vectorized

stmt to replace it, put it in VEC_STMT, and insert it at GSI.

Return FALSE if not a vectorizable STMT, TRUE otherwise.

This function also handles reduction idioms (patterns) that have been

recognized in advance during vect_pattern_recog. In this case, STMT may be

of this form:

X = pattern_expr (arg0, arg1, ..., X)

and it's STMT_VINFO_RELATED_STMT points to the last stmt in the original

sequence that had been detected and replaced by the pattern-stmt (STMT).

In some cases of reduction patterns, the type of the reduction variable X is

different than the type of the other arguments of STMT.

In such cases, the vectype that is used when transforming STMT into a vector

stmt is different than the vectype that is used to determine the

vectorization factor, because it consists of a different number of elements

than the actual number of elements that are being operated upon in parallel.

For example, consider an accumulation of shorts into an int accumulator.

On some targets it's possible to vectorize this pattern operating on 8

shorts at a time (hence, the vectype for purposes of determining the

vectorization factor should be V8HI); on the other hand, the vectype that

is used to create the vector form is actually V4SI (the type of the result).

Upon entry to this function, STMT_VINFO_VECTYPE records the vectype that

indicates what is the actual level of parallelism (V8HI in the example), so

that the right vectorization factor would be derived. This vectype

corresponds to the type of arguments to the reduction stmt, and should *NOT*

be used to create the vectorized stmt. The right vectype for the vectorized

stmt is obtained from the type of the result X:

get_vectype_for_scalar_type (TREE_TYPE (X))

This means that, contrary to "regular" reductions (or "regular" stmts in

general), the following equation:

STMT_VINFO_VECTYPE == get_vectype_for_scalar_type (TREE_TYPE (X))

does *NOT* necessarily hold for reduction patterns. Transform. *

References binary_op, create_phi_node(), dump_enabled_p(), dump_printf(), dump_printf_loc(), flow_bb_inside_loop_p(), get_gimple_rhs_class(), gimple_assign_lhs(), gimple_assign_rhs1(), gimple_assign_rhs2(), gimple_assign_rhs3(), gimple_assign_rhs_code(), gimple_assign_set_lhs(), gimple_bb(), GIMPLE_BINARY_RHS, GIMPLE_SINGLE_RHS, GIMPLE_TERNARY_RHS, GIMPLE_UNARY_RHS, loop::header, loop::inner, is_gimple_assign(), basic_block_def::loop_father, loop_preheader_edge(), make_ssa_name(), nested_in_vect_loop_p(), new_stmt_vec_info(), optab_default, optab_for_tree_code(), optab_handler(), phis, reduc_vec_info_type, reduction_code_for_scalar_code(), set_vinfo_for_stmt(), ternary_op, types_compatible_p(), vect_constant_def, vect_create_destination_var(), vect_create_epilog_for_reduction(), vect_double_reduction_def, vect_external_def, vect_finish_stmt_generation(), vect_get_vec_def_for_operand(), vect_get_vec_def_for_stmt_copy(), vect_get_vec_defs(), vect_induction_def, vect_internal_def, vect_is_simple_reduction(), vect_is_simple_use(), vect_is_simple_use_1(), vect_location, vect_min_worthwhile_factor(), vect_model_reduction_cost(), vect_nested_cycle, vect_reduction_def, vect_unused_in_scope, vect_used_in_outer, vectorizable_condition(), vinfo_for_stmt(), and vNULL.

Referenced by vect_analyze_stmt(), and vect_transform_stmt().

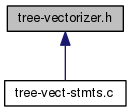

Source location

@verbatim Vectorizer

Copyright (C) 2003-2013 Free Software Foundation, Inc. Contributed by Dorit Naishlos dorit.nosp@m.@il..nosp@m.ibm.c.nosp@m.om

This file is part of GCC.

GCC is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3, or (at your option) any later version.

GCC is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with GCC; see the file COPYING3. If not see http://www.gnu.org/licenses/.

Loop and basic block vectorizer.

This file contains drivers for the three vectorizers:

(1) loop vectorizer (inter-iteration parallelism),

(2) loop-aware SLP (intra-iteration parallelism) (invoked by the loop

vectorizer)

(3) BB vectorizer (out-of-loops), aka SLP

The rest of the vectorizer's code is organized as follows:

- tree-vect-loop.c - loop specific parts such as reductions, etc. These are

used by drivers (1) and (2).

- tree-vect-loop-manip.c - vectorizer's loop control-flow utilities, used by

drivers (1) and (2).

- tree-vect-slp.c - BB vectorization specific analysis and transformation,

used by drivers (2) and (3).

- tree-vect-stmts.c - statements analysis and transformation (used by all).

- tree-vect-data-refs.c - vectorizer specific data-refs analysis and

manipulations (used by all).

- tree-vect-patterns.c - vectorizable code patterns detector (used by all)

Here's a poor attempt at illustrating that:

tree-vectorizer.c:

loop_vect() loop_aware_slp() slp_vect()

| / \ /

| / \ /

tree-vect-loop.c tree-vect-slp.c

| \ \ / / |

| \ \/ / |

| \ /\ / |

| \ / \ / |

tree-vect-stmts.c tree-vect-data-refs.c

\ /

tree-vect-patterns.c Loop or bb location.

Referenced by execute_vect_slp(), get_initial_def_for_induction(), increase_alignment(), process_use(), report_vect_op(), vect_analyze_data_ref_access(), vect_analyze_data_ref_accesses(), vect_analyze_data_ref_dependence(), vect_analyze_data_ref_dependences(), vect_analyze_data_refs(), vect_analyze_data_refs_alignment(), vect_analyze_group_access(), vect_analyze_loop(), vect_analyze_loop_1(), vect_analyze_loop_2(), vect_analyze_loop_form(), vect_analyze_loop_operations(), vect_analyze_scalar_cycles_1(), vect_analyze_slp(), vect_analyze_slp_instance(), vect_analyze_stmt(), vect_bb_vectorization_profitable_p(), vect_build_slp_tree_1(), vect_can_advance_ivs_p(), vect_compute_data_ref_alignment(), vect_create_addr_base_for_vector_ref(), vect_create_cond_for_alias_checks(), vect_create_data_ref_ptr(), vect_create_epilog_for_reduction(), vect_detect_hybrid_slp(), vect_determine_vectorization_factor(), vect_do_peeling_for_alignment(), vect_do_peeling_for_loop_bound(), vect_enhance_data_refs_alignment(), vect_estimate_min_profitable_iters(), vect_find_same_alignment_drs(), vect_finish_stmt_generation(), vect_gen_niters_for_prolog_loop(), vect_get_and_check_slp_defs(), vect_get_data_access_cost(), vect_get_known_peeling_cost(), vect_get_load_cost(), vect_get_loop_niters(), vect_get_mask_element(), vect_get_store_cost(), vect_get_vec_def_for_operand(), vect_grouped_load_supported(), vect_grouped_store_supported(), vect_init_vector_1(), vect_is_simple_iv_evolution(), vect_is_simple_reduction_1(), vect_is_simple_use(), vect_is_slp_reduction(), vect_lanes_optab_supported_p(), vect_loop_kill_debug_uses(), vect_make_slp_decision(), vect_mark_for_runtime_alias_test(), vect_mark_relevant(), vect_mark_stmts_to_be_vectorized(), vect_model_induction_cost(), vect_model_load_cost(), vect_model_promotion_demotion_cost(), vect_model_reduction_cost(), vect_model_simple_cost(), vect_model_store_cost(), vect_pattern_recog(), vect_pattern_recog_1(), vect_prune_runtime_alias_test_list(), vect_recog_bool_pattern(), vect_recog_divmod_pattern(), vect_recog_dot_prod_pattern(), vect_recog_mixed_size_cond_pattern(), vect_recog_over_widening_pattern(), vect_recog_rotate_pattern(), vect_recog_vector_vector_shift_pattern(), vect_recog_widen_mult_pattern(), vect_recog_widen_shift_pattern(), vect_recog_widen_sum_pattern(), vect_schedule_slp(), vect_schedule_slp_instance(), vect_slp_analyze_bb(), vect_slp_analyze_bb_1(), vect_slp_analyze_data_ref_dependence(), vect_slp_analyze_data_ref_dependences(), vect_slp_transform_bb(), vect_stmt_relevant_p(), vect_supported_load_permutation_p(), vect_transform_loop(), vect_transform_slp_perm_load(), vect_transform_stmt(), vect_update_inits_of_drs(), vect_update_ivs_after_vectorizer(), vect_update_misalignment_for_peel(), vect_update_slp_costs_according_to_vf(), vect_verify_datarefs_alignment(), vector_alignment_reachable_p(), vectorizable_assignment(), vectorizable_call(), vectorizable_condition(), vectorizable_conversion(), vectorizable_induction(), vectorizable_live_operation(), vectorizable_load(), vectorizable_operation(), vectorizable_reduction(), vectorizable_shift(), vectorizable_store(), and vectorize_loops().